Welcome back to our 10th ToyzRgone blogpost! Below, you will find the contributions made by each of our team members:

Hamsa Hashi 💻

This week, I made significant progress in improving the accuracy of pixel coordinates and object detection using camera calibration and object recognition on the Raspberry Pi. My main focus areas were integrating the YOLOv8n model and performing precise camera calibration to enable robust navigation and tracking capabilities.

YOLOv8n Integration on Raspberry Pi

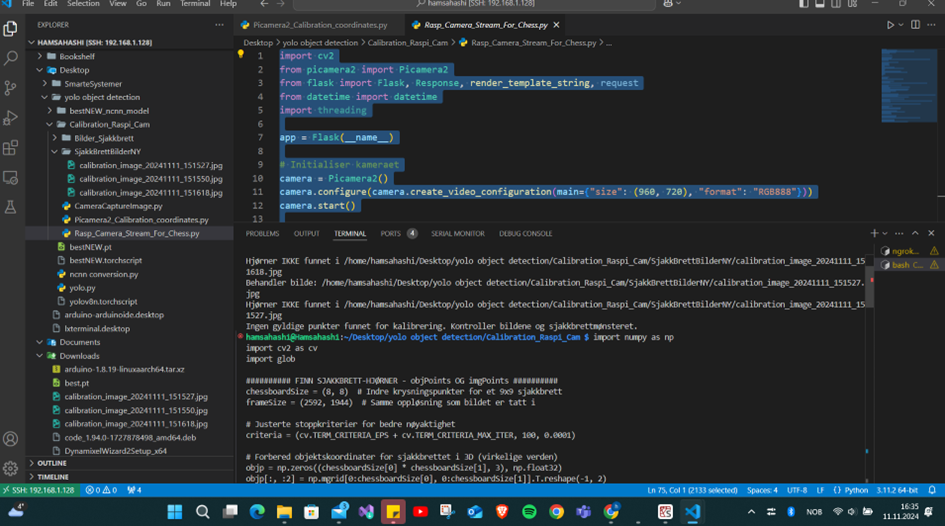

To optimize the performance of real-time object detection, I started by integrating the YOLOv8n model on the Raspberry Pi. First, I converted our custom-trained dataset to the NCNN format, which reduces resource usage and makes inference more efficient. This adjustment ensures that the Raspberry Pi, with its limited computational power, can handle detection tasks smoothly.

Additionally, I improved video streaming by redirecting the output of cv2.imshow from the Raspberry Pi to my Windows machine. This change significantly reduced the workload on the Raspberry Pi’s graphics hardware, resulting in better performance and higher FPS. By offloading this task, the system now runs more efficiently, ensuring smoother real-time object detection.

Camera Calibration

A major part of this week was spent calibrating the camera to improve object detection accuracy and allow precise localization of objects in the environment. I chose a calibrated camera instead of LiDAR to simplify the project and save time. While LiDAR provides highly accurate 3D coordinates, it requires complicated hardware and software setups. Since this project only needs to detect balls of a fixed height (Dronesonen) with a known object size (8 cm), a calibrated camera using OpenCV works well for our needs. OpenCV’s tools make it possible to accurately convert pixel coordinates into real-world (x, y) coordinates, allowing accurate object placement without needing depth data.

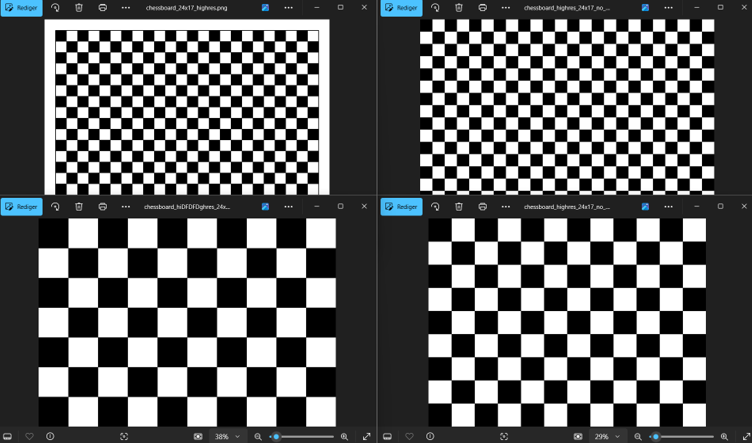

The calibration process began with generating a chessboard pattern in Python. The initial 10×7 grid, with squares measuring 200 pixels, was too small for effective calibration when printed. To resolve this, I increased the grid size to better fit an A3 sheet and removed an unnecessary border that interfered with corner detection. Using a custom Python program, I controlled the Raspberry Pi camera from my Windows machine, capturing images by pressing the spacebar. The camera was positioned at various heights and angles to gather diverse calibration data.

Captured images were then processed with OpenCV to detect the chessboard corners. Early attempts using the cv2.findChessboardCorners function failed due to poor lighting and an incorrect chessboardSize. Adjusting the size to 9×6 for inner intersections and retaking the images under improved lighting resolved these issues, enabling accurate corner detection. With this data, I calculated the camera’s intrinsic parameters, including focal length and principal point, and saved the calibration results in an .npz file for future use.

The calibration had two primary outcomes. First, it allowed for the conversion of pixel coordinates into real-world positions using a transformation matrix computed by OpenCV. Second, it enabled accurate localization of objects, ensuring that their positions could be precisely determined relative to the camera’s orientation.

https://learnopencv.com/camera-calibration-using-opencv/

Kevin Paulsen 🛠️

This week, I reprinted the servo-to-linear-motion-stick using polycarbonate filament to make it stronger, as it’s the weakest part of the entire robot assembly. I also experimented with laser cutting it from different materials to explore alternative options. First, I tried acrylic, but I’m concerned it might be too thick to use without making major adjustments to the gripper. I also cut it out of plywood and another mystery black material that looks and feels a lot like plywood. Lastly, I tried an unknown material that seems like some kind of fiberglass—it has the same thickness as the 3D-printed part and it seems very strong and durable, so it might be a good backup if the polycarbonate one breaks.

In addition to the material tests, I found myself stepping into the role of repair technician after a few incidents with the robotic arm. One of our team members got a bit too hands-on during a sudden disassembly, which led to a connector between a servo and a joint snapping. I printed and replaced that part, but shortly after, the arm took an unfortunate nosedive off the desk during testing due to, let’s say, a slight lack of caution. This caused a few more parts to break, so I had to jump in for repairs again.

Let’s just say we’ve given the arm’s durability a solid test this week! Everything’s fixed now, and the arm is back together and ready for more testing—with a gentle reminder for a bit of extra caution moving forward.

Too the left we have the different 3D printed and laser cut servo-to-linear-motion-stick.

As you can see, the robot arm is looking a bit like it needs a dentist appointment! But since everything’s functional for testing purposes, I’ll hold off on making another one for now. We’re expecting the Nylon 3D printed parts next week, so there’s no point in wasting material when this setup is holding up well so far.

Ruben Henriksen 🛠️

This week I have produced most of the parts for our robot. I used a laser cutter to make the flat panels and sls 3d printing for pretty much everything else.

With permission from Kevin, I had to make a small modification to the robot arm. Since the base vehicle had to be made larger to facilitate all the electronics, I made the arm joints 175mm instead of 150mm to reach the toys.

Because of how SLS 3D printing works it is necessary to design features to optimize for weight and strength. Luckily there are tools that help with this built into Solidworks. I first performed a topology optimization study, but the results were not what I had hoped. I used the study to determine what changes I could make and then made a cut to hollow out the arm. I then did a static study to make sure it could handle the loads before printing. The result was a factor of safety of 2.2 when I applied force equivalent to 1 kg on the arm. 1 kg is the expected weight for the arm components plus a payload. Meaning a FoS of 2.2 gives us room in case someone wants to try to pick up heavier objects than the intended toys.

TO BE DONE:

I did notice that the servo of the arm collides with the Lidar scanner, this is an easy fix i will be done next week. Additionally the storage container for the robot needs to be printed and or redesigned so that it can be laser cut. I have also gotten news that the camera will be re-positioned to the front of the base vehicle so I need to design and bracket to hold that as well.

Philip Dahl 🔋

Today I tried progressing with the LiDAR module. Steven provided me with a darlington transistor array which I could use as a motor driver, since the motor would draw ~60mA and could damage the pins on the controller if connected directly. We had found a post where someone connected the same type of LiDAR, scavenged from a robot vacuum, and followed the wiring from there.

As I was not very familiar with Pi, I attempted to first get the sensor running with Arduino to check if it was even functioning. From a few documents I read it seemed like the TX/RX on the LiDAR on 3.3V, where the Arduino’s pins run 5V. I therefore added a voltage divider from the RX line from the Arduino and the TX line from the LiDAR, so as to prevent any chance of damage to the LiDAR component.

I got the LiDAR to spin, but I was having trouble getting any valid data displayed in the serial monitor. Nothing really showed up other than the setup text “Starting Lidar Test”. Even with help from the almighty Mr.GPT, I had no luck with reading any form of data.

We have ordered all parts required to make the battery pack, and it will be assembled as soon as they arrive. We went with a 4S2P (4 series 2 parallel) pack at 14,4V and 5200mA using 18650 li-ion cells, ensuring everything will be powered sufficiently. This delegitimize the simulations I ran in week 6&7, but it will be compensated for.

Mikolaj Szczeblewski 🔋

This week we had a serious discussion amongst one another in the group, about the battery. Given that, the battery we had for a moment, won’t suffice for us. A lot of factors discouraged us from using the battery further on (we even managed to set a cable on fire, but we won’t go into detail about that!), however the most important factor for that decision was, that we simply wanted to create our own, and you have the best learning experience when you simply do it yourself. We’ve bought two lithium battery cell packs consisting of 4 cells each. We will configure them in a 4s2p configuration (4 series, 2 parallel).

Also, since we will be working at quite absurd current draws which are not suited for breadboards and these smaller jumper cables. We have to abstain from using them and further on we will expand our connections to either prototype boards and or potentially PCBs if we manage to get this fit into the schedule.

For that I’ve purchased cables which can sustain a max of 9A, this will be ideal for when we will feed the circuit as a whole.

In terms of the PCB layout that I’ve created, I had to restrain myself from ordering it as i cant be sure at what range the supply voltage will operate at, besides the battery pack will be recharged and might be overcharged slightly, but for that we will get a BMS overprotection and balancing circuit for this purpose.

Sokaina Cherkane 💻

Implementing the Control System in Code

Once I gained an understanding of the theory and structure of the Jacobian matrix, I proceeded to implement it into code. It is important to note that the servo motors in the robotic arm are controlled by the OpenCM9.04 board (the master controller). The plan is to connect the laptop (and eventually the Raspberry Pi) to the Arduino Mega 2560 board, which will then interface with the OpenCM9.04. The code will be developed in the Arduino IDE, where instructions will be sent from the Arduino to the OpenCM9.04 for execution.

I began the coding process by writing in C++ for testing and validation purposes. The initial intention was to convert the code to Python once the implementation was fully functional and achieving the desired outcomes. However, after consulting with my fellow computer engineering student, we decided that it would be more efficient to retain the code in C. This decision was based on the realization that the Raspberry Pi is capable of running C code directly.

The implemented code calculates the Jacobian matrix and uses it to control the movements of the robotic arm in the X and Y directions, as previously discussed in an earlier blog post.

I successfully implemented the mathematical calculations into code with the help of a GitHub repository, which provided a clearer understanding of how the code should be structured. Of course, I made modifications to tailor it to our project. The GitHub-repo: https://github.com/kousheekc/Kinematics/blob/master/examples/forward_kinematics/forward_kinematics.ino

The Arduino Mega board serves as the main controller, which will be connected to an OpenCM 9.04 microcontroller. The OpenCM 9.04, in return, manages the servo motors that drive the arm’s joints.

Code Functionality Overview

By connecting the Arduino Mega to the OpenCM 9.04, we can execute the kinematics control code and send commands to the servo motors.

This setup allows us to control the arm wirelessly and achieve precise movements based on the Jacobian matrix calculations.

The code initializes the transformation matrices and Jacobian matrix needed for the kinematics calculations. Using matrix functions, the code computes the Jacobian and updates joint angles based on the target position for the end-effector. The program continuously reads the current position of the arm, calculates the necessary adjustments based on the Jacobian matrix, and updates the joint positions to move the end-effector to the desired X-and-Y coordinates.

Next week:

The upcoming weeks will stay focused on the solid foundation for the robotic arm’s control system. By thoroughly researching the requirements and implementing the Jacobian matrix, I ensured that our arm will move accurately in the 2D plane as required by the project specifications. Adapting the code to Arduino’s environment and setting up the hardware configuration with the Arduino Mega and OpenCM 9.04 brings us closer to having a fully functional robotic arm capable of picking up the ball-toys autonomously. Next steps will involve testing and refining the control system to ensure smooth and reliable operation in real-world scenarios.