Mechanical Engineering

Last week was the fall break, and I only worked a few hours on the design of the robot arm.

For this week (week 8) I have been working on the design of the robotic arm and the design of the turn table. As I have mentioned before, I ended up doing some changes on the initial design of the arm. But the concept is based on the same idea, that the arm will have the “same” function as a human arm. I am still waiting on the arms for the servomotors.

The turn table will give the player the possibility to choose if they want to play black or white pieces. The turn table will be motorized with a stepper motor and based on what my group members want, the idea is that one can operate the table with a single button or toggle switch, which will make the table turn 180 degrees. How the steppermotor will be operated is not finalized.

Workhours this week: 7.

Liv Marte Olsen – Mechanical engineering.

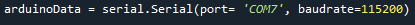

Jeg har nå modifisert koden til sjakkalgoritmen slik at den sender sjakk-koordinatene for trekkene til maskinspilleren fra python til arduino for å sette servomotoren i en bestem grad i arduino. Importerte serial library, satte opp en port (COM7), og overfører deretter koordinatene gjennom COM port. I python sjakkalgoritmen:

render funksjonen som blir kalt her er fra selve sjakkalgoritmen, men jeg fant ut at det er denne funksjonen som returnerer koordinatene som “maskinspilleren” har utført. Det er disse koordinatene jeg må sende til arduino sånn at armen kan utføre trekkene fysisk.

Jobber nå med å gjøre klar koden i arduino som skal styre armen ved å sette opp strukturen og lage funksjoner for hver koordinat. Hver koordinat må leses slik at en bestemt funksjon kjører. Hver funksjon må også vite når den skal plukke eller slippe brikken for å vite når den skal slå på eller av magneten. På den første koordinaten (2 første characters) som kommer inn skal det plukkes fra, den andre påfølgende koordinaten (2 siste characters) skal det slippes på. Bruker charAt() funksjonen for å lese av hver koordinat og se om den skal plukke fra eller slippe på koordinaten. Skriver ferdig koden når armen er klar. Da må de bestemte servomotor gradene for hver koordinat settes inn i sin tilsvarende funksjon. Har også en plan om å lage en funksjon som fjerner en brikke fra plassen sin hvis den siste koordinaten (der det skal slippes en brikke) allerede er opptatt. Da må jeg ha en “fjern-funksjon” som fjerner brikken som allerede er på plassen der en ny brikke skal flyttes til. Altså når en brikke slår ut en annen brikke, slik at den ikke legger en brikke oppå en annen brikke. (5 timer)

Kevin Johansen – Computer engineer

Denne uken har jeg hatt eksamener(konte) så har ikke brukt noe særlig tid på faget, bortsett fra å møte opp og snakke med gruppen.

Hossein Sadeghi, Computer science.

Computer Vision

I didn’t complete any work last week since I had two exams that I needed to pass in order to begin my bachelor project the next semester.

I did visit Zoran at his workplace to pick up a Raspberry Pi 3B+, which I will test soon. I can’t yet continue working on the new one because Joakim still has the SD card and power supply from the Raspberry Pi 4 I previously had.

I had Covid this week, which is why I was unable to attend class.

Chess pieces

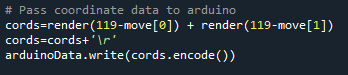

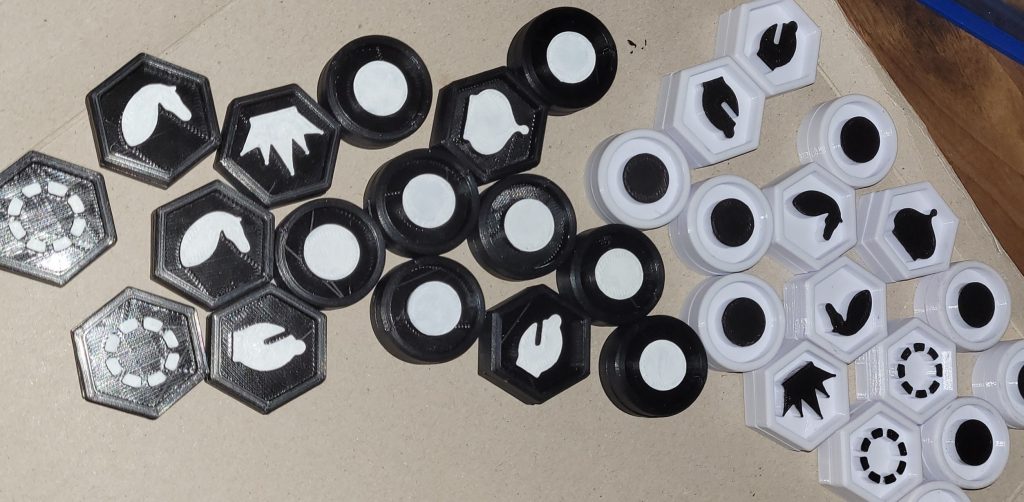

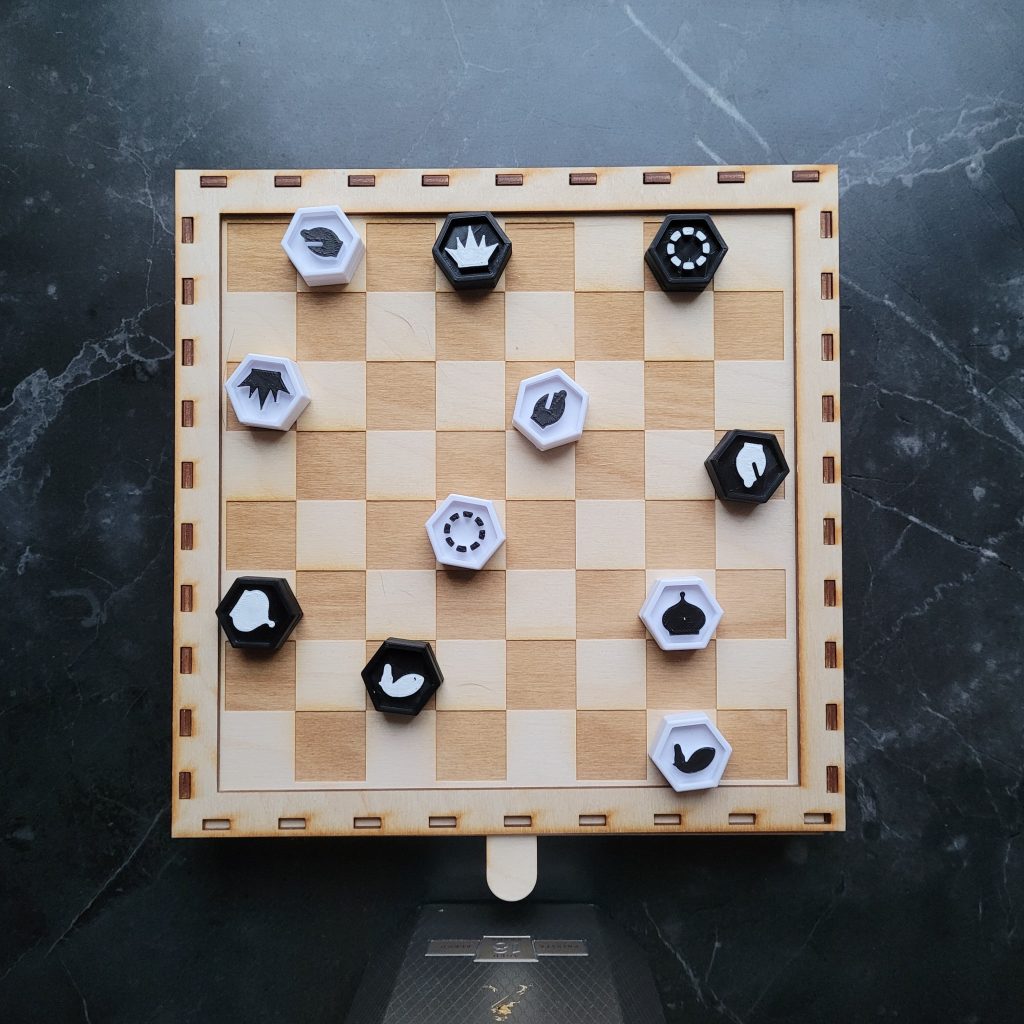

I’ve decided to paint every chess piece so they stand out more to the camera. White symbols will be assigned to the black pieces, and black symbols to the white pieces. As a result, the computer vision model will be significantly more accurate because the symbols will stand out in a bigger range of lighting.

The white pieces wouldn’t be distinguishable if it were too light, and the same would apply to the black ones in the dark. For them to be opaque, I ultimately had to paint the black pieces three times and the white pieces twice. I spent approximately 2.5 hours on this.

images for dataset

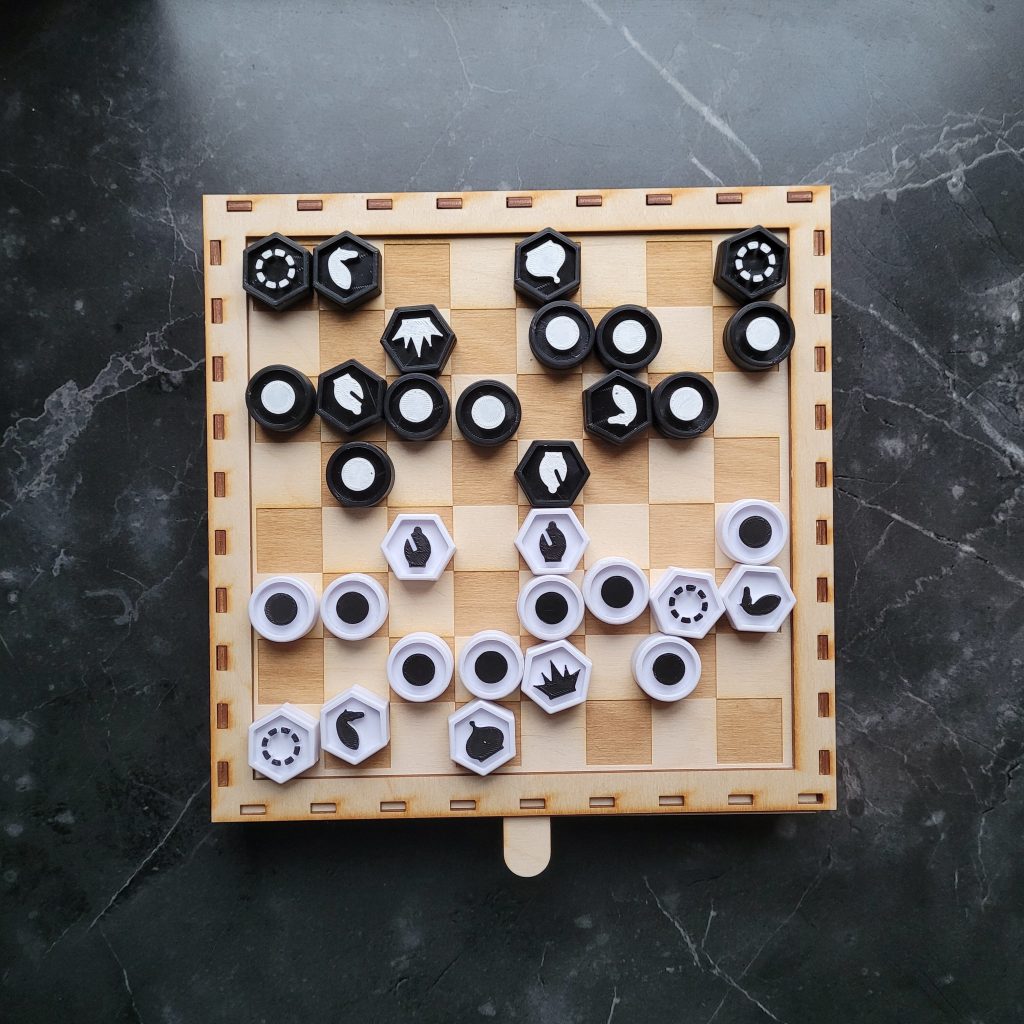

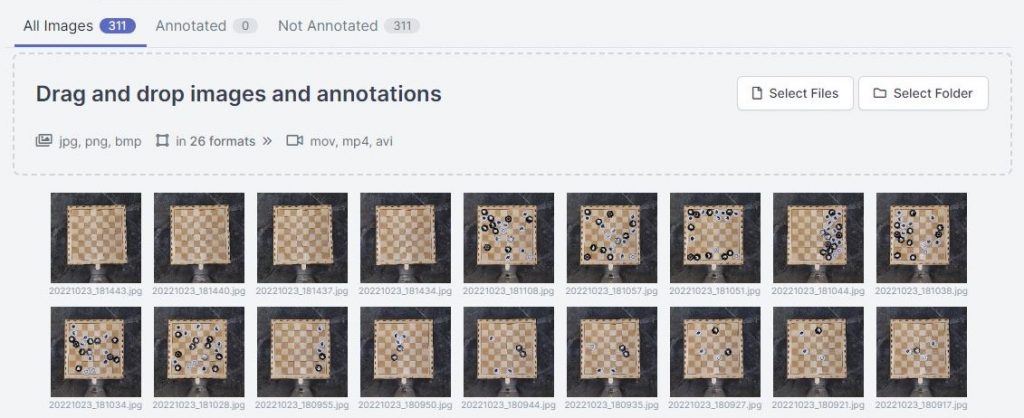

The chess pieces were prepared at this point, allowing me to begin capturing photos for the dataset. I took about 300+ pictures in a variety of setups. Liv Marte and I determined that the camera should be positioned about 50 cm from the board, and it will also be this height at the end, to get the images as close to the finished configuration as possible. It will be less likely to predict incorrectly if the photos are as similar to what it will ultimately encounter.

To enable it to handle a greater range of lighting conditions, the camera is also positioned directly above and photographs are taken in various lighting conditions. About 2 hours was used on this segment.

Starting the annotation process

I downloaded the completed images to my computer and immediately started planning all the classes I would require for the model to be able to recognize. I also watched a YouTube video on how to annotate images the best way possible, which included making sure to obtain the entire item, and continue to annotate over any overlaps between classes.

I’ve discovered that it’s really simple to change classes and remove classes in the end, so it’s best to create more than you think you’ll need just in case. Keeping this in mind, I choose the following 14 classes:

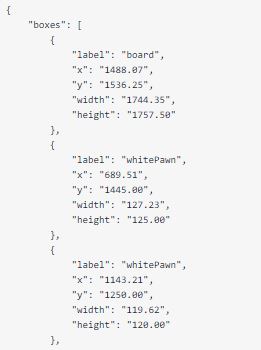

- board

- whitePawn

- blackPawn

- whiteBishop

- whiteKnight

- whiteRook

- blackKnight

- blackBishop

- blackKing

- blackQueen

- blackRook

- whiteKing

- whiteQueen

- flipped

After starting to annotate the images, it took me around 4 hours overall, including the earlier preparation and research, to go through 75 of the images with fever pieces.

Hours on the project this week: 8,5

Marte Marheim, Computer Science