Petter:

Because of my issues with the input system I went and talked to Steven about possible solutions, he ended up giving me new ideas and perspectives.

I ended up redoing the cube completely because of this and after 3 days compared to four weeks of work, I have a working prototype, which is frankly better in all ways compared to the old one. For a rough outline on how my thought process went:

First, Steven and I categorised the options:

9 layers x 2 for + – = 18 options

27 total pieces, including middle piece

If we exclude middle because it can’t be seen, therefore should not be touched:

6 layers have 9 pieces

3 layers have 8 pieces, these three layers are the center layers, and are the only three layers which exceptions may be made for.

Middle pieces never change their parallel position compared to each other, which means we can use them to identify which pieces are currently in the vicinity.

I’m gonna simplify the solution a lot for right now, both because it’s very difficult to explain and because I still need to do some more testing, which means it might change, I’ll do a full code walkthrough later. Anyways so:

Give each middle piece a large layer sized box collider.

This box collider can be used to gather the surrounding pieces. As well as register touch.

The remaining pieces (20) will be kept in the main parent and not used before they must be.

For the touch solution:

Movement will only be allowed on the last layer parallel to the two others touched. The last layer can be turned by touching it and rotating any angle(x,y,z) by more than 45 degrees while still having one finger touching the side to be turned, the rotational direction is decided by whether the change is pos or neg or just tightening the fist and rotating it +-45 degrees.

Parallel layers can be hard defined in code and the layer ready to be turned is highlighted.

William:

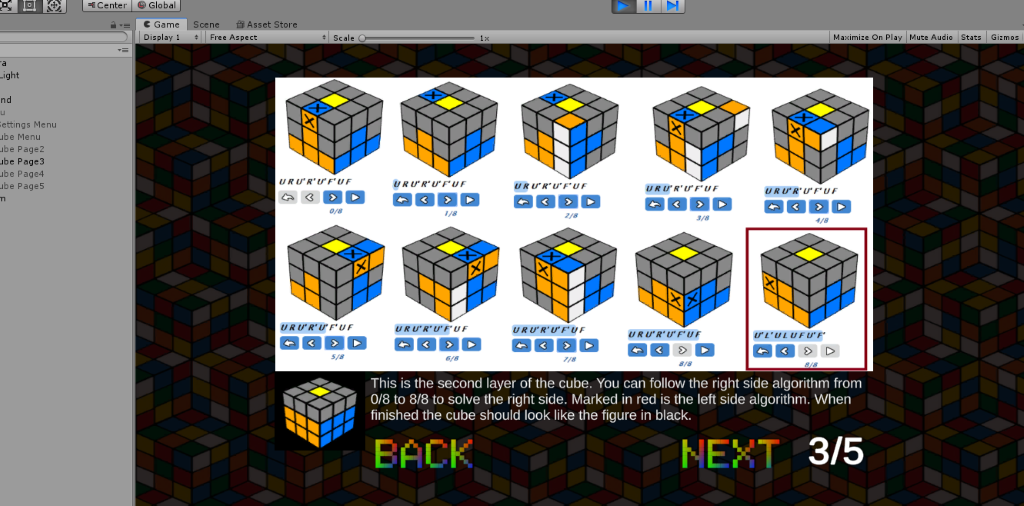

I am now done with the tutorial part in Unity (On how to solve a Rubiks Cube). The scoreboard is still beeing worked on.

Herman:

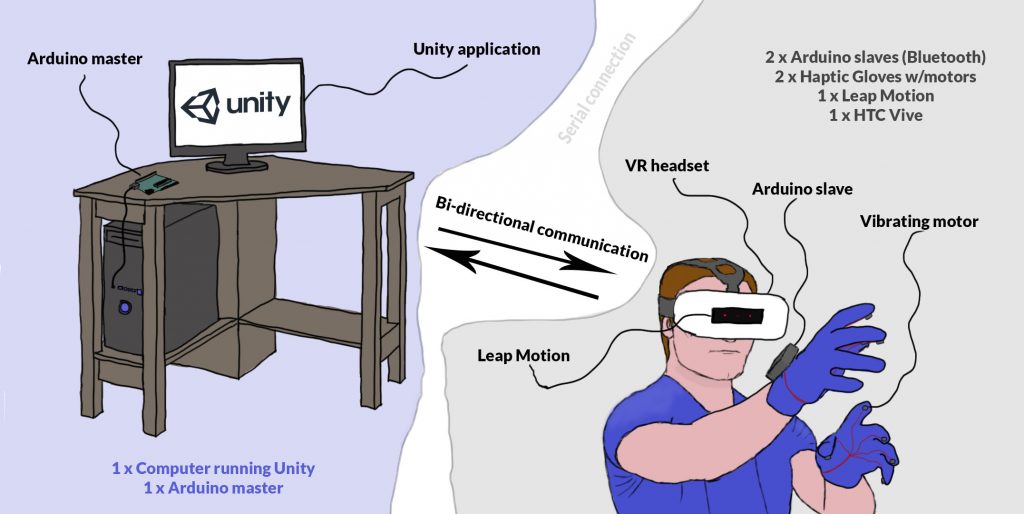

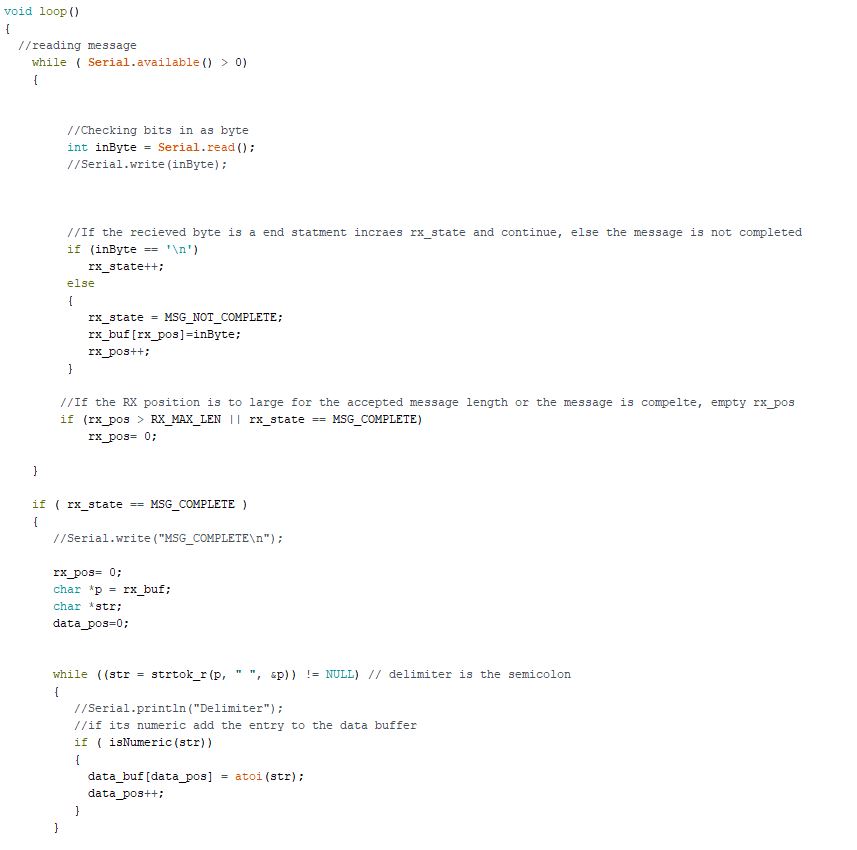

The communication between the master hub and the slaves at the gloves is somewhat complete. For test purposes I have used led diodes for the vibration motors. Here it parses the information given and checks if it’s numeric. It reads it as bytes and check for /n as the end bit.

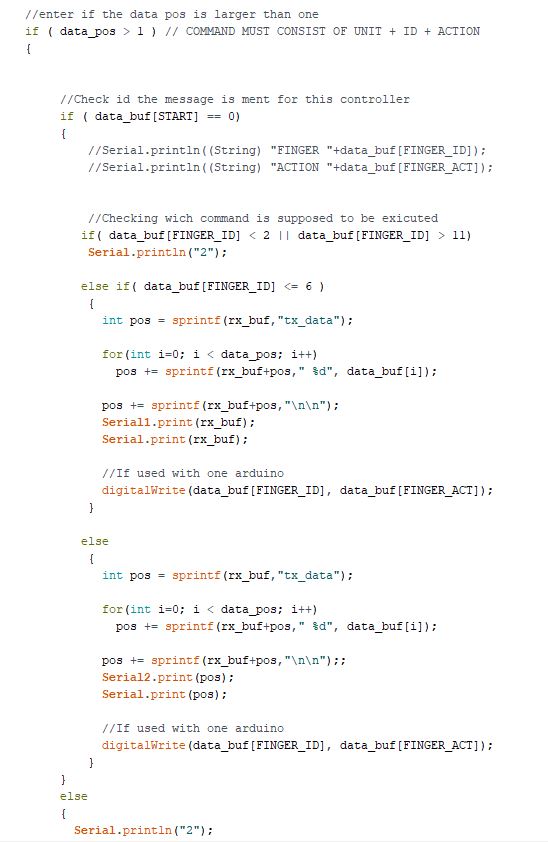

When the system sees the start bit (0) it starts to check if the message wants to act on a finger that do exist, here we have numbered them 2-11 after which pins it connects. So, if it is the right hand (2-6) it will send the message forward to unit 1, or if it is the left hand (7-11) it sends the message to unit 2

If an error occurs along the way it sends back an error code to the unity script.

The scrpit for the gloves are base on the same idea, only here we remove sending the message further and rather execute the “digitalWrite(data_buf[FINGER_ID], data_buf[FINGER_ACT]);” line

What is missing now is to adjust it for wireless connection and to add more measurement to ensure that nothing fails during use, such as timers for timeout from unity, if the motors are still going when it shouldn’t and so on.

Tom Erik:

Assembled and wired up the glove. To further optimize spacing, I’ll look into designing a pcb for the circuit.

Daniel:

For this week I have continued on the Unity test application for the Haptic gloves. Where I have enlarged the environment in the main scene, both by implementing new objects and music. My idea was to create a narrative of being in the American Old West.

In order to achieve this, I figured that I could carry on with the Low Poly graphics. Upgraded the scene with some assets for a Low Poly Western Saloon. Added some clouds in the background and imported some music into the scene.

As the user of the application you are placed into a chair in the saloon. From there on out, you will hear some Western music in the background. While also being able to both look around with the VR headset and interact with the nearby objects.

In order to make the experience feel as good as possible, the objects close to the user had to be adjusted for the Leap Motion Rig’s Interaction Behaviour. Specifically with regard to such as the object’s Rigidbody and colliders.

In the upcooming week I will look at the possibility of implementing a functional gun into the scene. As well as update the menu with graphics and helpful information. So hopefully the project will soon end up much like this illustration: