Had a meeting in the Cave at the University. The electro students had received their components, so we looked at these together. In addition, we went through the Leap Motion device and the HTC Vive.

Petter:

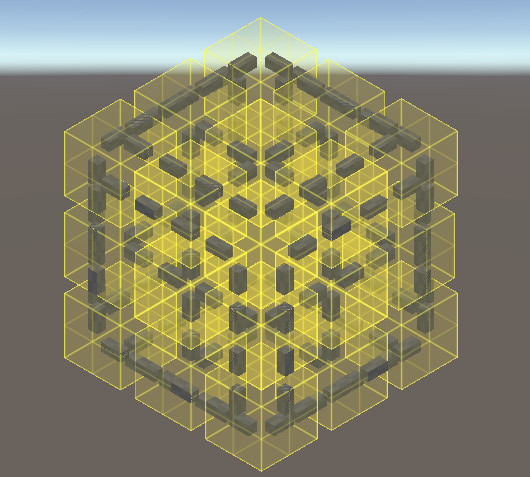

I’ve finished the rubix system which basically describes how each piece interacts with the pieces around it . I still need to adjust how the pieces interact with each other, as there’s some fun glitches going on, but it’s looking pretty good.

Hoping to start work on some custom rubix color designs next week.

Daniel:

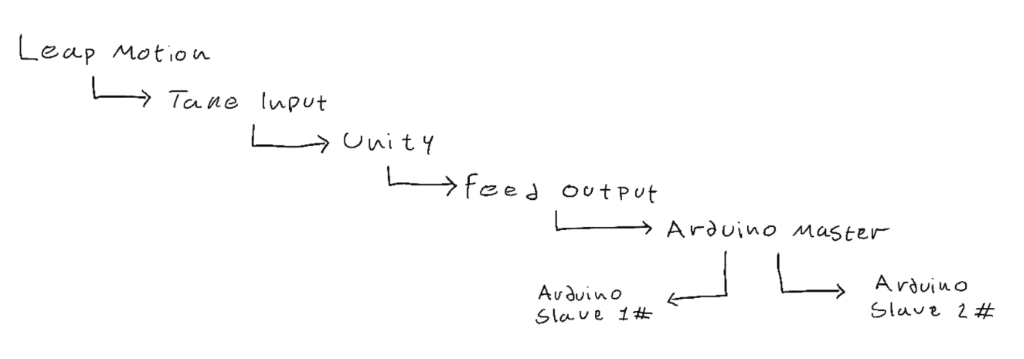

After going through the Leap Motion device in depth, I figured that I could start creating the interface between Unity and the Haptic gloves. Rather than developing another interaction for the Unity application.

This is something I discussed with the electro students, specifically Herman. Who said that his intentions with the Haptic gloves were to make them wireless. Basically, that he would use an Arduino master to feed output to 2 Arduino slaves (1 per glove).

I can then try to create a Unity script that reacts to the input from the Leap Motion. Later, have that Unity script feed information to the Arduino master, which will then feed to the arduino slaves.

So for the next few weeks, this is what I will be doing.

Even:

Using Reinforcement learning AI to make a competitive environment

To be able to compete against something or someone solving a Rubix cube, we decided to investigate how to use artificial intelligence to make an opponent.

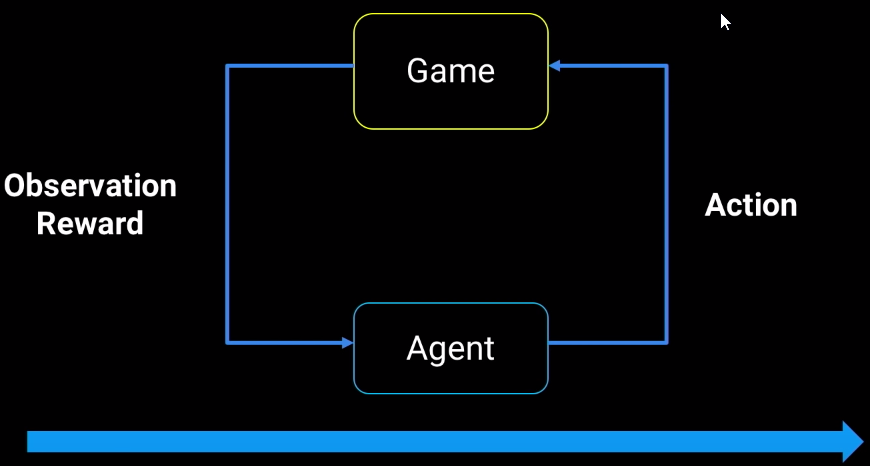

The AI will use reinforcement learning. Reinforcement learning (RL) is a technique used to train the AI to solve a specific problem. The concept is that you reward the AI when it does something positive and penalize it when it does something bad. The AI’s goal is to achieve the highest reward possible.

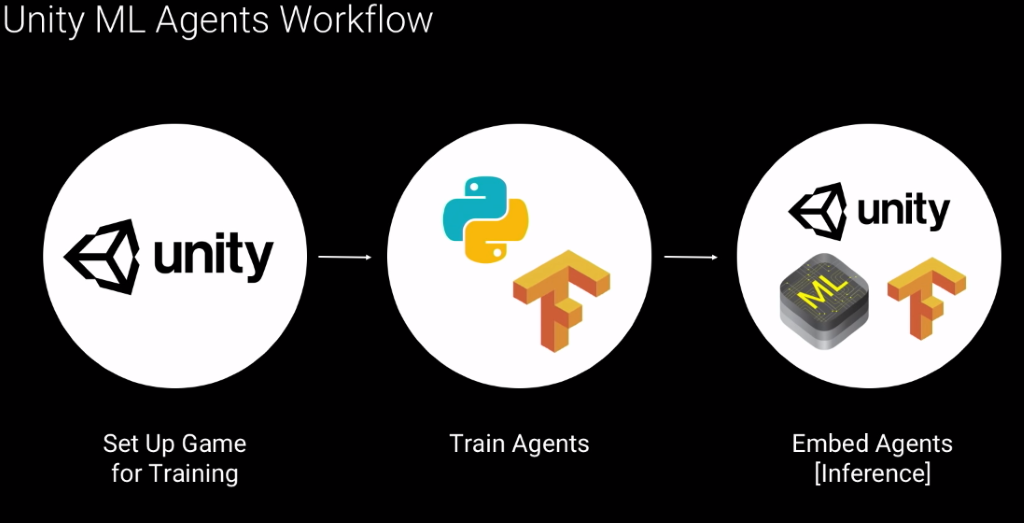

In unity there is a tool called ML Agents which allows you to train the “agents” that will interact with the environment. The general workflow is:

Figure 1 Democratize machine learning: ML-Agents explained – Unite LA

Because the Rubix cube is not finished we need to train our model on a different(copied) cube.

To ensure that the training goes as fast as possible we need to define some parameters and milestones. This is what my research time is being used for now. When the parameters and milestones are set, we can start training the agent. This is a iterative process described in the diagram underneath.

Figure 2 Democratize machine learning: ML-Agents explained – Unite LA

Actions

Action describes how many actions the cube can do at any given time. There are 12 actions in total. 6 sides, clockwise and counter clockwise for every side.

States

The 3x3x3 cube has 43 252 003 274 489 856 000 possible combinations, or ≈43 quintillion. This means that brute forcing (running through all the combinations) the cube is not an optimal solution.

Going forward

The plan the coming weeks is to set up the plugin and configure it in unity. We need to start learning how to use the plugin and start training less sophisticated models for learning purposes. After we have a good understanding of how it works, we can ether train it on our own cube, if its finished, or we can train it on another cube to keep the process going.

Herman and Tom Erik

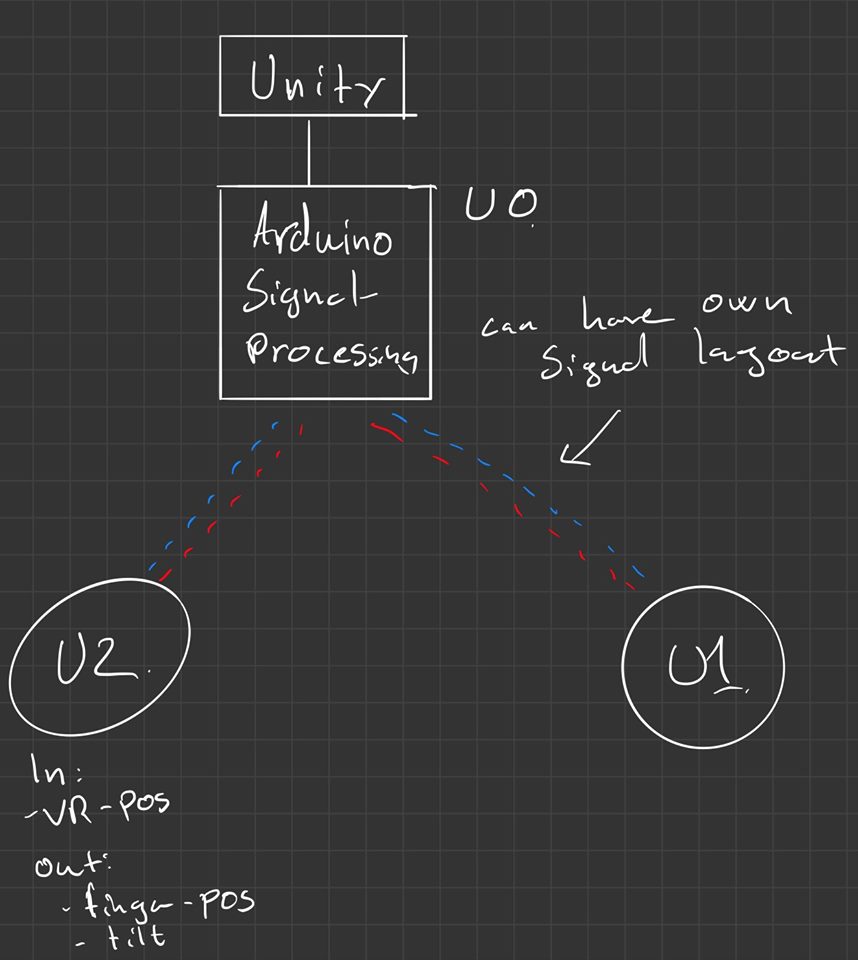

The communication between Unity and the gloves will be separated into three different units. First, we have the hub that is going to open the communication between Unity and the gloves and process the data so that the hub and the gloves speak the same “language”. The last two units are the processing units on the gloves which will translate the signals from the hub into actions and measurement data to a transmittable signal to send to the hub. These units will also do most of the calculations surrounding the finger position from the flex sensors and the IMU on the glove.

To replace the Leap motion camera, we were originally looking into replicating the tracking system of the Vive unto the glove but realized quickly that this would propose too big of a challenge and would consume too much time to be possible in this iteration of the glove. This will be substituted by attaching the vive controllers tracking module on the back of your palm and then use the vives tracking system this way.

William:

The Main-Menu user interface is being worked on. My plan is to finish the Menu part of the interface and make it more suitable for our purpose. I am also going to work with Even on the Reinforcement learning part. Looking forward we have a big task when we are going to be implementing/configure Reinforcement learning into Unity. We must make a sophisticated overview for the learning part (how we want it to communicate and so on).