Virtual meeting through Microsoft Teams on Thursday at 10:40. The meeting lasted over an hour. Out from the meeting it was decided that the electro students should continue working on developing the glove, begin to finalize which components to use and design the necessary circuits. The computer students decided to continue working independently with their selected areas of the project.

Petter (Computer engineering)

Made Leap Motion work with hands and Vive headset. Problems with the Leap Motion graphics program, but think we’ll get away with it by going with what is working and applying unity skins on top.

Made some prototypes of the rubix cube, they spin, sides move and with a dedicated amount of concentration it’s solvable, however it seems the calibrations must be extremely well toned to give us what we are looking for because the difference between barely moveable pieces and pieces piercing space is next to nothing. Creating a smooth rubix cube in real life is challenging and it very much is in unity as well.

Daniel (Computer engineering)

Demonstrated a quick overview of how to set up projects in GitHub.

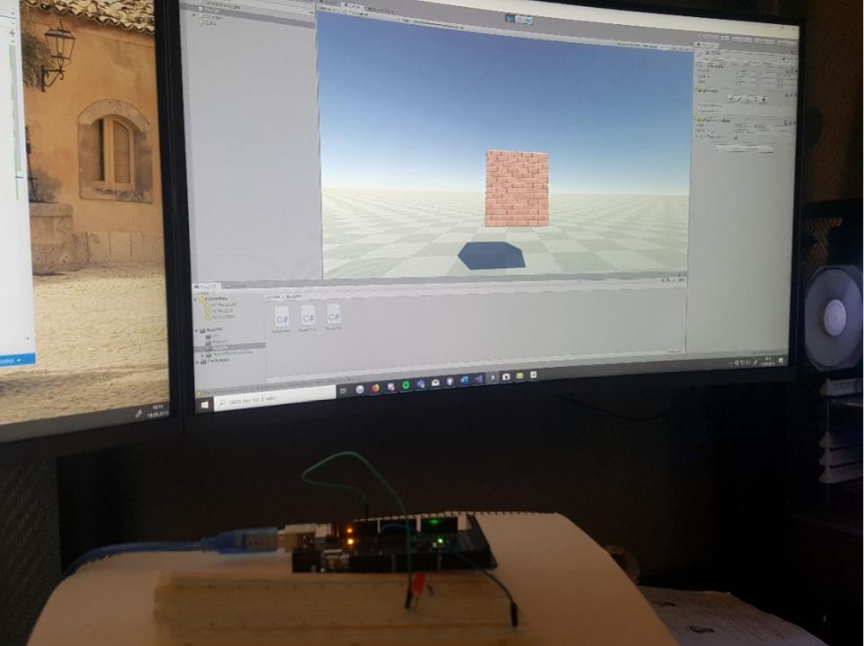

Started with the development of an interface between the gloves (Arduino C) and Unity (C#). Managed to connect the Arduino to the Unity platform without too much trouble. Had some slight issues with the API compatibility level (might have been some issues with my Unity client). But fixed this after some troubleshooting with the settings in Unity, manual install of SDK and the Arduino program/IDE. At this moment, the connection between the Arduino and Unity is running on the previous Unity project I did in week 37. The relation is simply then a simulation on how we can integrate the Haptic gloves as an output source for Unity. In this case, the Unity project is a simple scene where the player can move around and destroy a block (by clicking on it). If the block is destroyed by the player, a LED diode goes to high through the Arduino.

Furthermore, there’s a slight delay in the transition from breaking the block in-game till the LED diode lights up. My suspicion is that this is because the Unity project hasn’t been built yet. But I should investigate that.

In addition to this. I also started researching how I can interpret the different gloves (left and right) and fingers for the Arduino output. Because in the case above, having a LED diode respond to a single input from the user won’t justify the single output from the Arduino. We will need the Unity program to “understand” which hand and finger(s) that are doing an action within the application. From there on out, have Unity transfer that signal to the Arduino and the Arduino to the gloves. Such that the sensors on the Haptic gloves will respond accordingly. E.g. if you touch something with your index finger on the right-hand glove, there should be a vibration on the index finger on the right glove. Nothing else should be accepted.

William (Computer engineering)

Starting R&D on UI, examines the use of hand gestures to manage UI.

Even (Computer engineering)

Struggling to create UML charts for the Unity part of the project. Continues UML but makes it more activity based. Starts to code the test section of the project to verify the concept. (simple demo)

Tom Erik and Herman (Electro engineering)

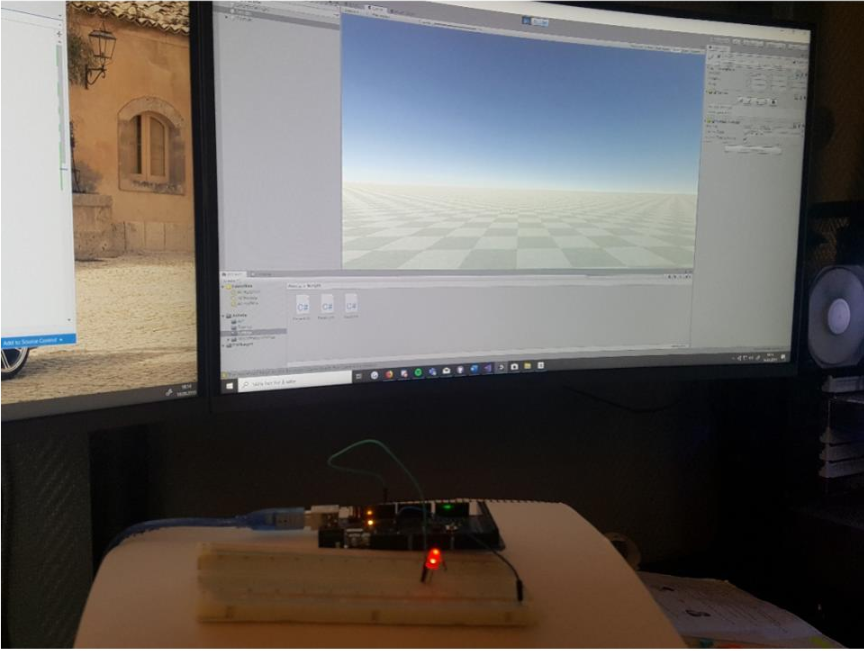

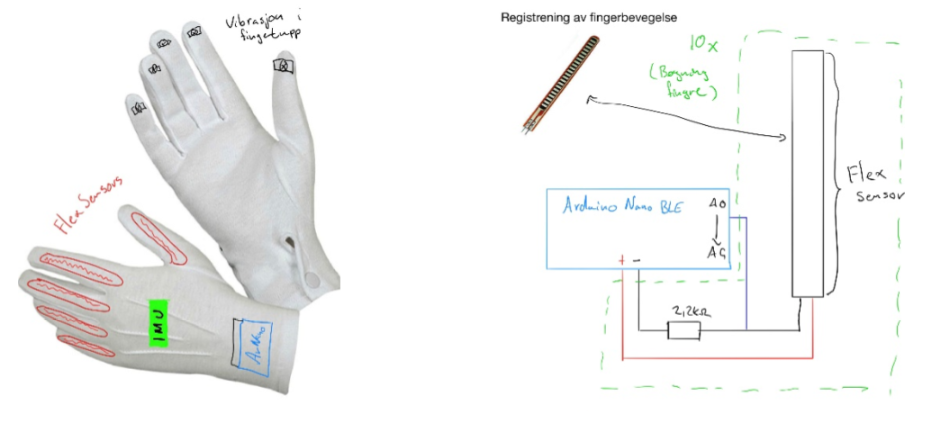

In the electronic part of the project, we continued to research different methods of tracking the fingers movement, physical response from unity to the real-life glove, communication between the PC and the gloves, vives tracking system and started designing rough layout of the circuits and components.

To track the movement of the fingers we looked at several methods. The current solution is to use a leap motion camera. We have a wish to add a form of tracking on the glove it self, so that it is possible to track the fingers even though they are not in line of sight. So far, we have looked at flex sensors and strain gauges as well as IMUs.

A flex sensor as well as a strain gauge is a component that changes its resistance as it becomes compressed or stretched and if these where placed over the finger or each joint, we could measure the changes and determine at which position each finger is at.

An IMU, or an Inertial measurement unit, is an electronic device that consists of an accelerometer and a gyroscope that can track the objects forces, angular rate and orientation. If there were to be placed one or two IMUs on each finger as well as one at the back of the hand, one can thus calculate the right movements in the hand.

We decided to use both technologies to try to optimize the gloves accuracy, where we place strain gauges over each joint on each finger or a flex sensor on each sensor and an IMU on the back of the hand. This will result with the possibility to track the hands orientation and angular rate as well as the fingers movement. The next step would be to test this out.

The physical response in the glove is as it was last week, a small vibration motor on each finger. We are still looking for other options that are more alike to how it would feel in real life.

Another goal would be to make the gloves wireless. Some ideas her is to implement micro processes that allows for BLE implementations or build a communication system need. This will be looked more at on a later date.

The last thing is the vive handheld controllers tracking system. Most VR headsets uses an “anchor” that is a camera with IR that communicates with the computer and tells the system that the anchor is at point zero and maps out a coordinate system from that. The vive on the other hand uses one or more “lighthouses” that emits an IR grid though out the room, a synchronization signal is also emitted that tells each unit to recalculate its position before emitting the grid. These lighthouses are not connected to a pc and will continuously do this while there are on. The position calculation is done in each device through geometry of the emitted IR lights. This allows for a bigger area to be used when you move in VR. So far, all the other products we have seen on market uses the handheld controllers or vives trackers to track the hands position and we will therefore no prioritize this as of right now.