Virtual meeting at 10:00 AM on Thursday through Microsoft Teams. The group decided to split up into a computer and electro party for this week. The computer students will focus on the setup of a Unity environment for the VR, while also learning how we can take advantage of the software.

The Unity application will be companied with HTC Vive, Leap Motion tracking and the Haptic gloves. Something which will be connected in a later session. But for now, the computer students will have to make a foundation for how we are going to connect all these technologies together.

We have so far had the opportunity to borrow a Leap Motion device and HTC Vive from the University. So, one of the first challenges we meet this week is to get those set up. However, it can be noted that the computer students do not have to focus solely on the hardware in order to make progress. Since Unity has an excellent way to implement the VR device, the computer students can already start to outline an application. Which can include the scenes, user interface and interactions.

When it comes to the electro students, then the task is to design and make two functional Haptic gloves. The purpose of the gloves is to feel (in the physical world) when you touch something inside the Unity application. To achieve this, the gloves must give some sort of feedback to the hands when something in the application is touched. This is made possible by having some sort of tiny vibration motors at the tip of each finger. Which is then again controlled by a microcontroller that constantly listens to the position of the hands in Unity.

The current solution to see the hands, and correspond its position in VR, is to use a Leap Motion camera that traces the position of the hands. The necessity of the Leap Motion camera would be eliminated if the gloves itself could track the position of the hands and fingers, which is theoretically possible through sensors implemented in some or each joint of the glove.

For the next week, the group should take full advantage of Github repository for the project. Because, with Github we will be able to track the technical progress better and use a Kanban/SCRUM sprint system. Which is important now that we’ve done our research and are ready to start the development at a full scale.

Daniel:

Went into Unity exploring the possibilities we have for the creation of a VR application. Specifically, the part about creating a scene and user interface. A goal here with the scene would be to create a terrain with textures and materials that looks appealing. E.g. one could create a fantasy terrain that locks the player into a small section of the scene, and then place the interaction in that small section. That way, the player would be able to experience an interesting environment which also limits the player when it comes to movement. The reason for this is to simply draw attention to the interaction, rather than the movement of the player.

The image above illustrates a white box (player) and a Unity scene with a fantasy terrain. The player is locked into the circular platform and cannot move outside it. Since all that is outside the platform is lava and spikey mountains. Which might be an ideal environment for the player. Because in this case, we will have the advantage of placing the interaction (E.g. a Rubix cube) onto the platform. Which should immediately draw attention to the main objective of the application. As a little side note, adding a simple sprite into this scene should also eliminate the unpleasing greyish background seen from the image.

In the creation of this scene I also wrote two small scripts for both the camera (first-person) and player. That way, one could easily move around, look and interact with the environment. Something I did intentionally to leave an opportunity to interact with the environment without already having implemented the VR device and Haptic gloves. When it comes to the textures and materials seen from the image, then these were imported from the Unity Asset Store as free assets. Fortunately for us, Unity has a great selection of high-quality assets that are also free.

A user interface for the application should also serve a somewhat valuable purpose. Because, with a user interface one would have the option to customize the settings of the application and choose an interaction and/or scene. But the latter is more about aesthetics than anything else.

William:

Worked with Unity testing and exploring effects, visualization and animations. Checked out several tutorials and ideas that our group can potentially implement into our system. For next week I will continue working with Unity and C# to gain more knowledge and figure out what we can potentially implement/create.

Tom Erik:

Had a meeting with Herman at Uni, where we detailed a rough layout of the sensor, and feedback motor positions. We further researched different IMU’s and landed on initially placing one IMU on each hand.

Further research went into different kinds of sensors, and ways to give the user feedback from VR space through different means.

We are looking at different technologies to track the hands position. So far, our current options are;

- Visual Camera tracking E.g Leap Motion

- Echo location (very unlikely)

- Several IMU connected to the fingers

- Stretch sensor/ strain gage

- IR tracking

- 3G grid or directional

Each method has its drawback, which we’ll spend more time looking into in the coming week.

For feedback, we are considering whether to use vibration, or create a pushing sensation somehow, perhaps with a solenoid.

Even:

Started development of UML diagrams.

- Use case (complete)

- Sequence diagram (not finished)

Petter:

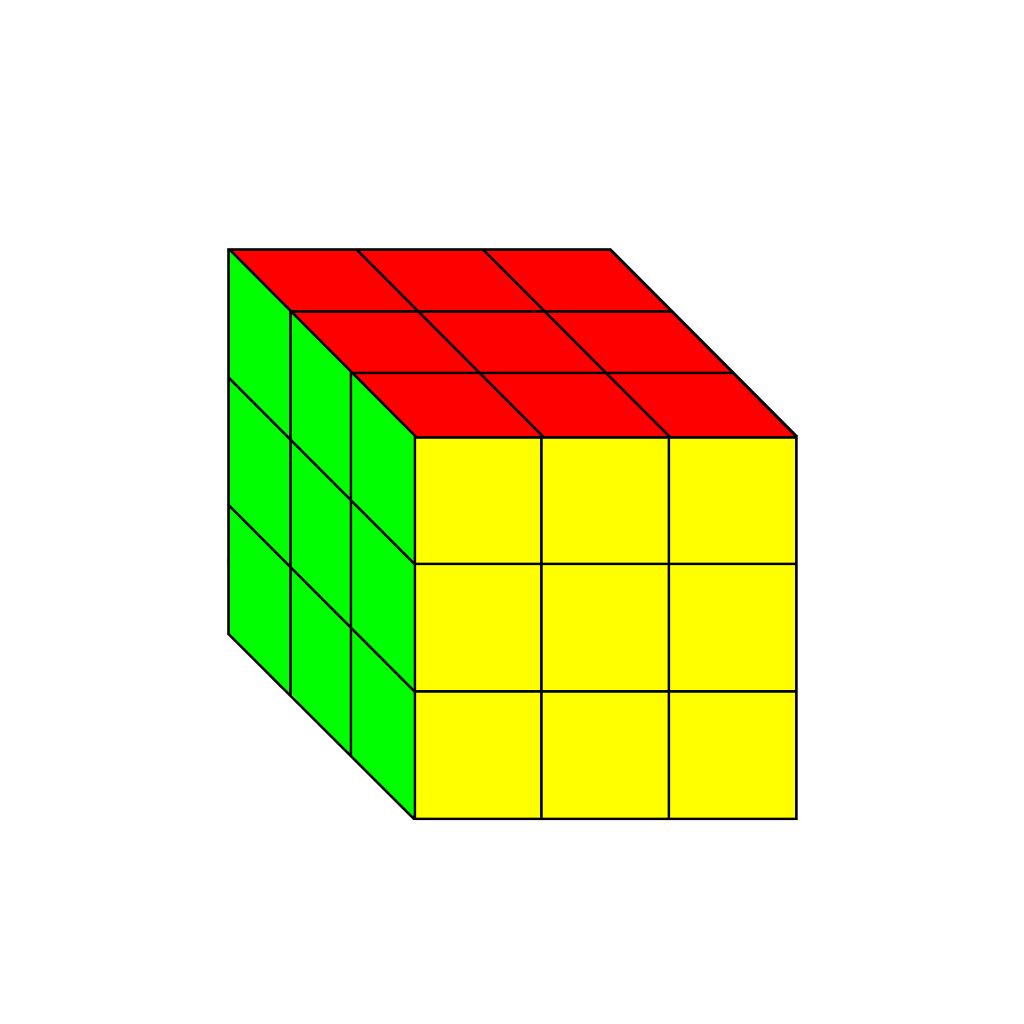

Created a prototype of a Rubix cube in Unity and discussed a potential solution for how the Rubix cube is going to interact with the glove. While also thinking about how we could implement the programming part of the interaction. Also started setup of the leap motion device that we got earlier this thursday.

As a brief explanation, despite its many combinations. The Rubix cube has very few options when it comes to movement. By rotating one corner, the rest of the section rotates either horizontally or vertically.

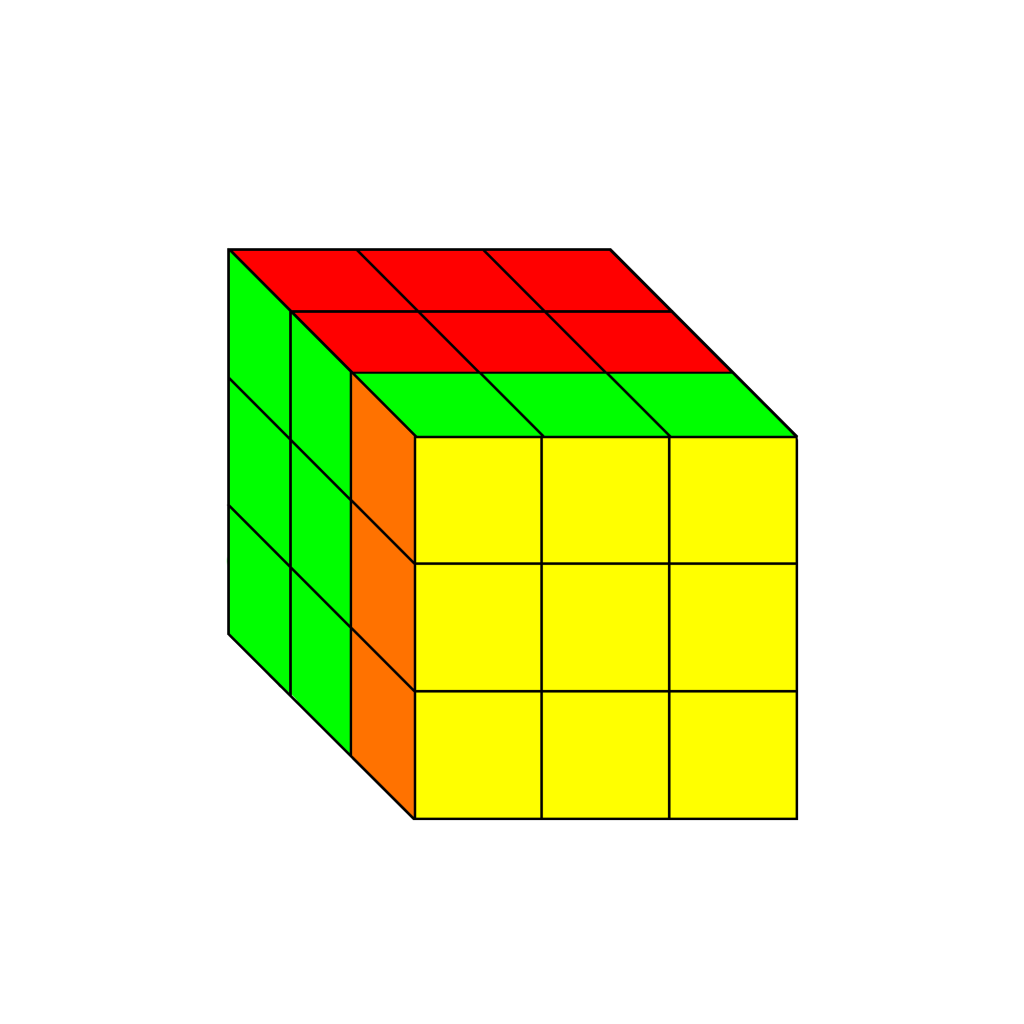

For example, if I rotate the top right of the green grid, the vertical section of that cube’s parts will react accordingly (shown in the visual illustration below).

We could also add movement on the middle corners, however it’s not necessary to complete the cube.

This means that the only movement we need to script is this single one. From there on out, we can apply it to every corner of the cube. However, there’s one problem with this; In real life this works because the rest of the cube is held fast by the other hand. One solution that avoids this is to use the vector from the movement and use that direction and apply it to the cube. But this would also assume that there’s a small difference in the angle that people normally rotate. We need to test the cube in vr to see how it reacts.

Herman:

- Researched different IMUs

- Researched circuit design and Arduino code for the most basic features

- Roughly mapped out how to implement wireless communication through WLAN or BLE

- Roughly sketched out component layout for the gloves

Next week:

- Research how the current VR handheld controllers work and interact with the VR sensors.

- Acquire and test an IMU.

- Prepare a piece of code for the possibility for IoT, either through wireless or wired communication.