Pamela

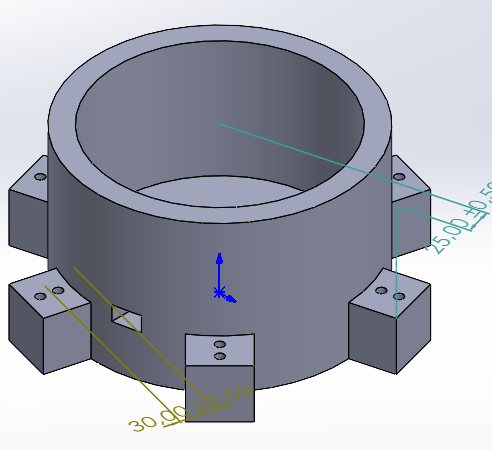

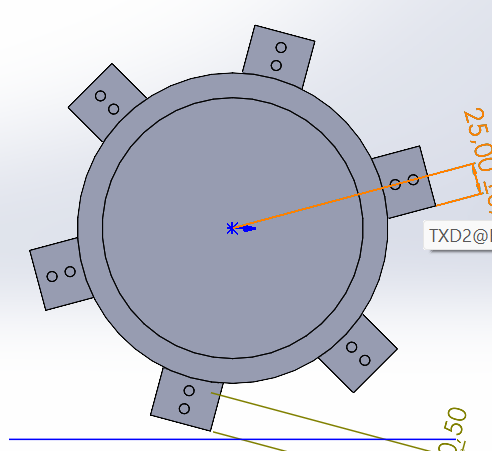

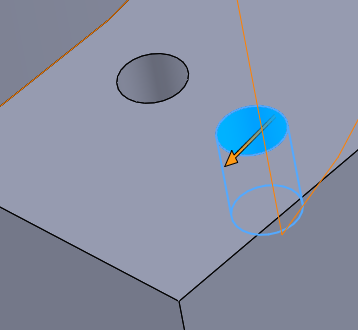

Thursday: Sergi and I made a first draft of the base, here it’s the first look at how is going to be:

We calculated all the dimensions of the base to be capable of fiting all the electronic components inside, this improves the stetics of the robot and the funcionality.

Tuesday: We all met up and divided up the work.

Adrian

I’ve discussed different coding methods and software with the rest of the computer engineers. Firstly, I wanted to try coding in RAPID and simulate with OpenCV, but RAPID was a part of a paid service, so that was a no-go. Then, we settled on using ROS (Robot Operating System) with OpenCV to code with. I used the rest of the week to familiarize myself with ROS and set it up on my computer.

Sergi

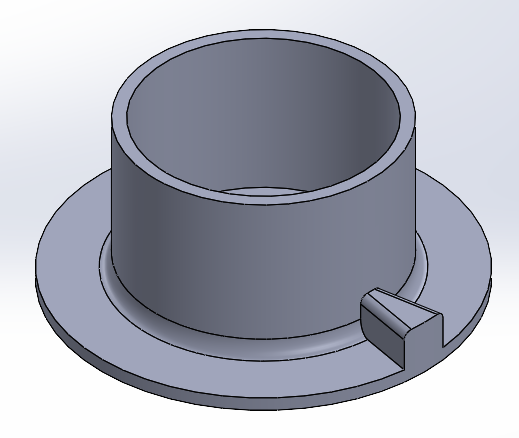

During this week Pamela and I started doing the base of our robot with SolidWorks, here there is another idea of the robot base made by myself:

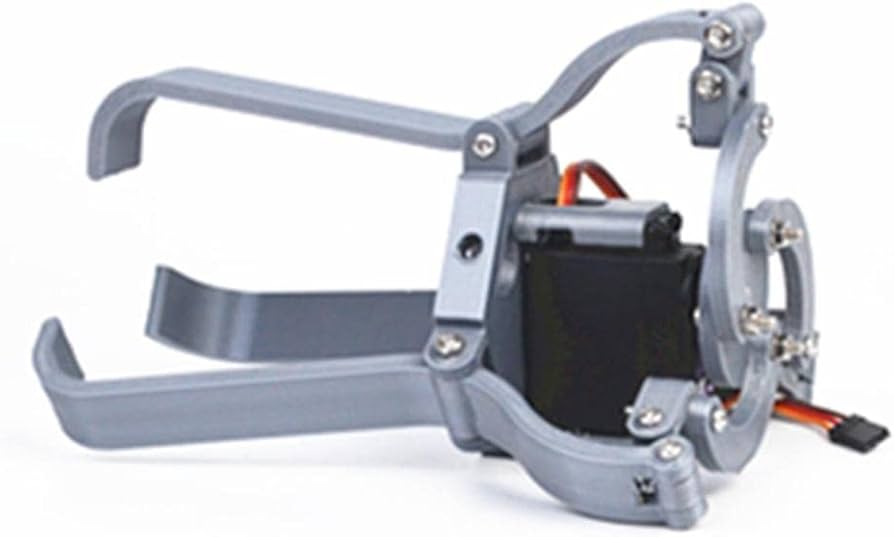

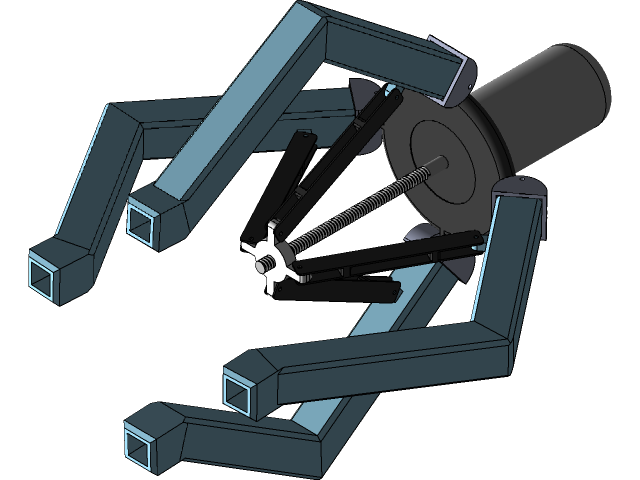

Also I started getting some ideas of how would look like the robotic arm and the amount of motors and movements we need for our robot. I also search a way to gather or join the legs of our base with the base itself: https://images.app.goo.gl/GUGn66MBSRCTth1R8

Farah

This week, I made some progress on the simulation of a robotic arm using Unity. I focused on experimenting with ROS, after successfully setting up the environment, I explored basic communication protocols within ROS, which will be essential for controlling the robotic arm’s movement. This included testing ROS nodes and message-passing systems to ensure smooth interaction between the simulation and real-world robotics tasks.

Some essential I tools installed:

- Docker: Docker was used to containerize the ROS environment, ensuring that the development and runtime environments are consistent and easily portable. This is particularly useful when integrating the simulation across different platforms.

- Notepad++: This was my primary text editor for coding in ROS and editing configuration files. Its simplicity and support for various programming languages made it an ideal choice for the early stages of development.

Basic or a bit Advanced.

One of the key challenges in simulating the robotic arm is implementing inverse kinematics to control it’s joint movements. To tackle this, I began experimenting with two approaches: machine learning algorithms compatible with Unity, and a coordinate-based algorithm using MATLAB. Both methods required extensive research into mathematical models, which I am now applying within the Unity environment. The Aim is to control the robotic arm in real-time, making sure it can move accurately to the correct positions based on user inputs.

Adam

This week I started looking for information on the internet about the mechanism of the jaw of the mechanical arm and design for them.

Jakub

I have just been assigned to this group and we assigned roles to each other. Together with my colleague Adam we have started our work on the claw mechanism for our arm.