Hey there, beautiful human beings! 💖✨Another week has passed and it has now been an entire month since we lost a member, so we must be doing something right! 🎉

While we still need to downsize the robot so it can actually fit the maze there are still plenty of other progress in preparation for this.

Jonathan 🧙♂️

This week I’m exploring alternatives to the localization feature I’ve previously had a look at. I suspect there are simpler ways to track the position of the mouse, that involve fewer sensors and are more intuitive. My problems to solve are the following:

Determine the coordinates of the mouse inside the maze.

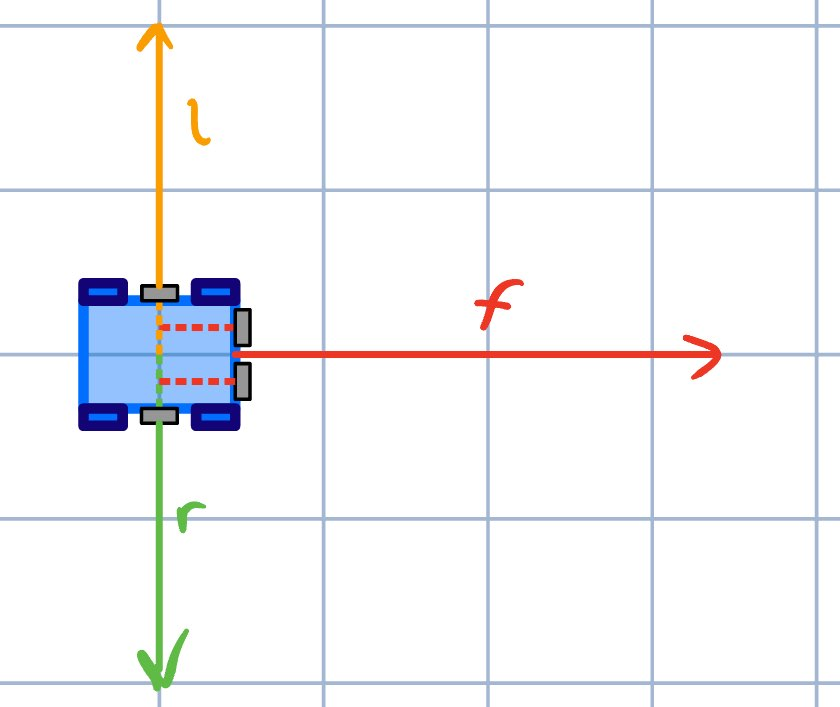

Let f, l, and r be the distances measured from the mouse to the nearest walls in the front, left-hand and right-hand directions respectively. These will be measured with ultrasonic sensors. Incremental changes in these variables will be denoted df, dl and dr.

Before we can trust these distance values, we must know either know the angle the system is heading and include this in our calculations, or just use regulation techniques to make sure the system is perpendicular to the front wall, and parallel to the side walls.

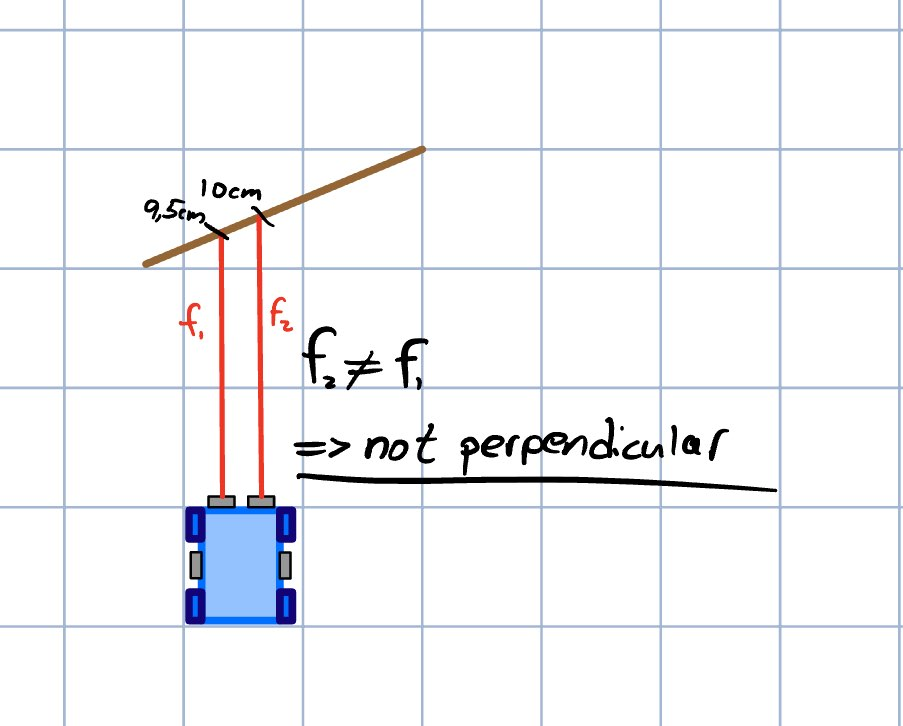

There are two ways I want to consider in regards to regulating the system towards being perpendicular to the front wall. The first way is to have two distance sensors facing the same direction and rotate the system until the difference between the two readings are 0.

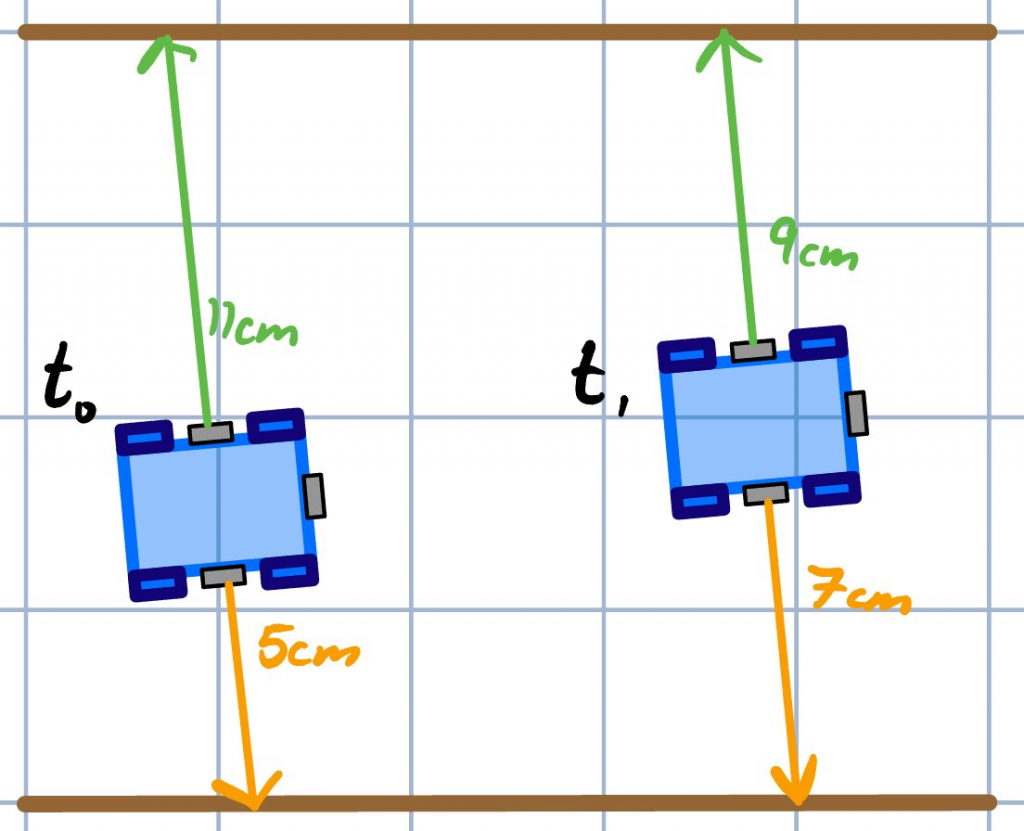

We can also attempt to find a perpendicular orientation the front wall by using our left and right distance sensors by assuming that these distances should not change when performing a forwards or backwards movement of the system. Any changes in the values will indicate a non-perpendicular orientation and can thus be regulated towards 0. Combining these methods with the front-facing sensors can provide extra redundancy or replace the front method altogether. 🎯

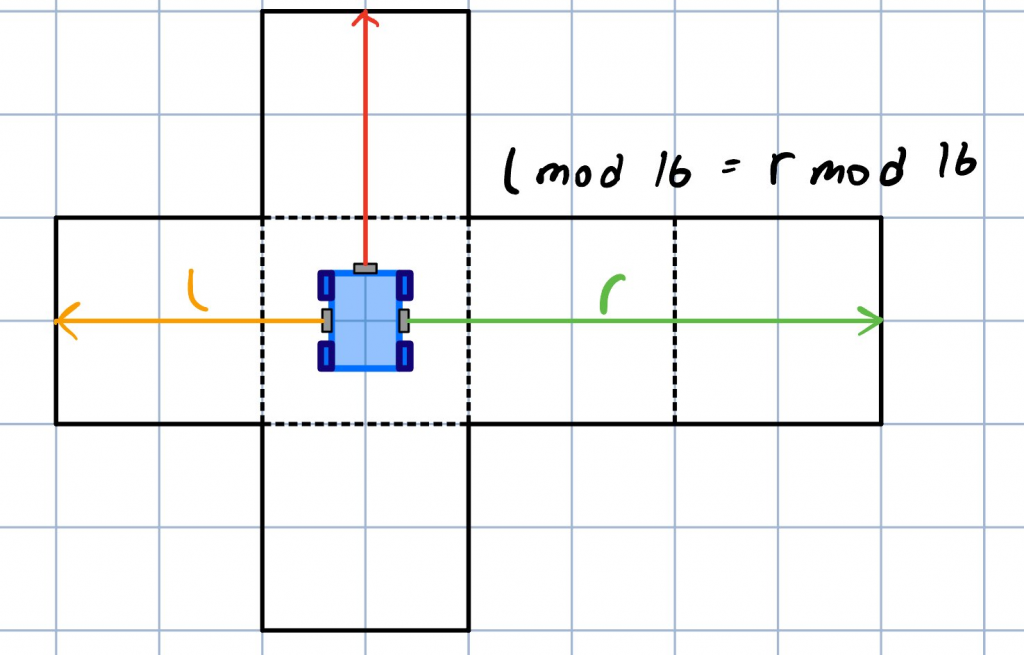

The approach we can take to make sure the system is always in the middle of a “tile” is simply by regulating the left and right distances until their difference is 0. However, when an unbalanced amount of tiles appear on either side, we must utilize modulo division to stay centered in our tile and not regulate towards an unwanted center. 🏹

Now, when we are safe to assume the orientation and centralization of the system, we can attempt to determine its position. 👊

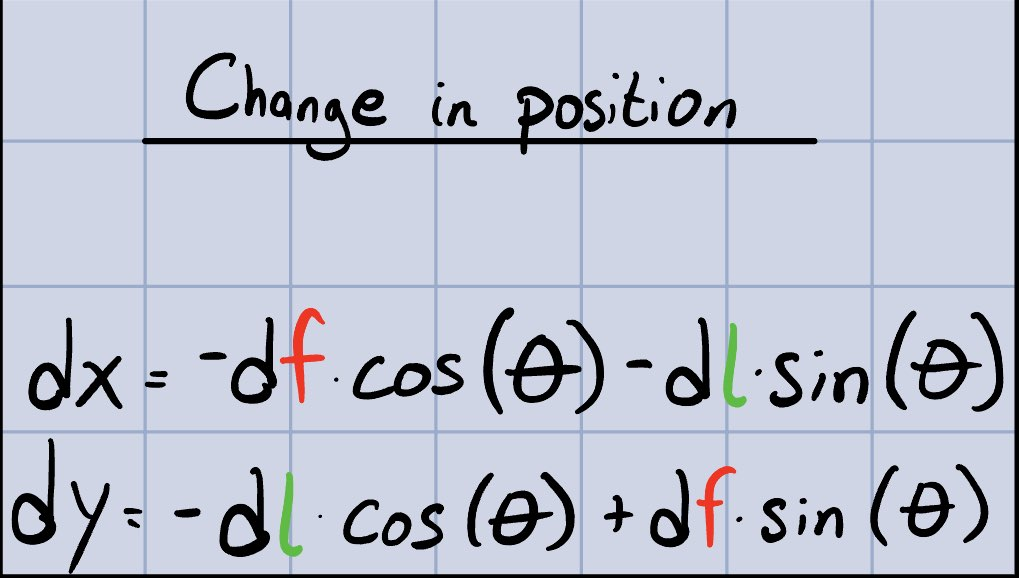

Remember df, dl, and dr? Let’s combine them with the orientation of the system θ to track the system’s changes in position in the following way.

Determine placement of walls in the maze.

If we assume the position of the system and its orientation, any obstructions detected by our ultrasonic sensors can be interpreted as walls and thus placed into our virtual maze.

This method is performed in two steps: 1) Determine the tile in which the wall exists, and 2) determine the direction of the wall inside its tile (top, right, left, down).

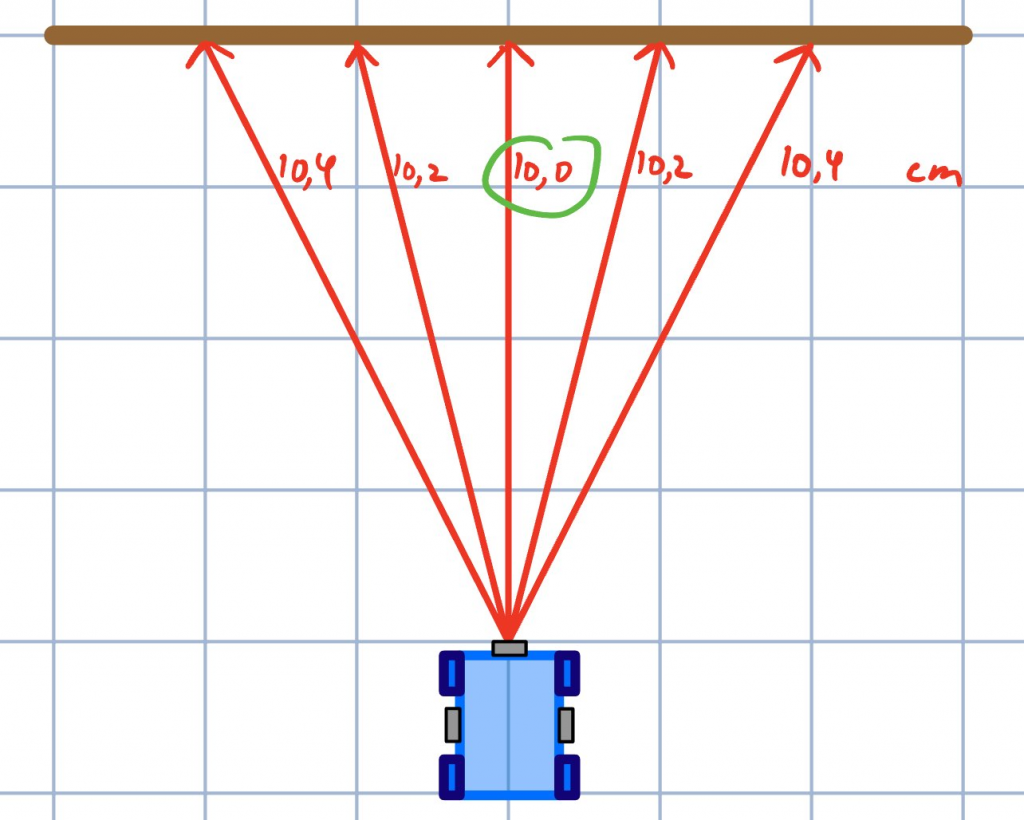

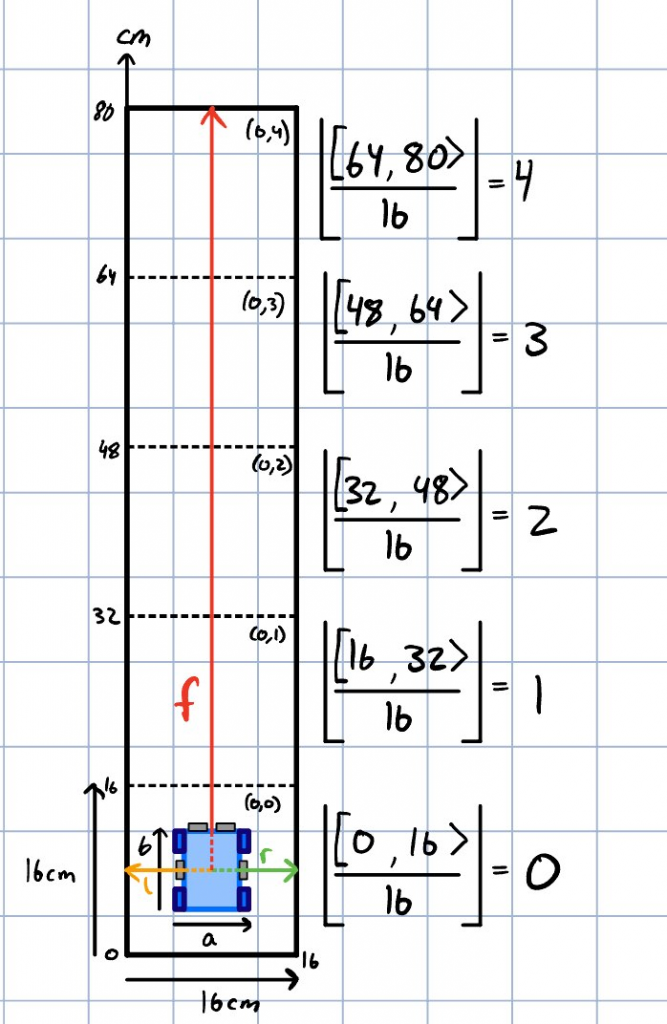

The below image shows how we can calculate the tile numbers based on distance readings and floor division.

Mats 🧑💻

Monday this week went to watching Jan Dyre’s presentation about signal processing. In addition these are things I have done throughout the week:

Implement callback-based timers ⏲️

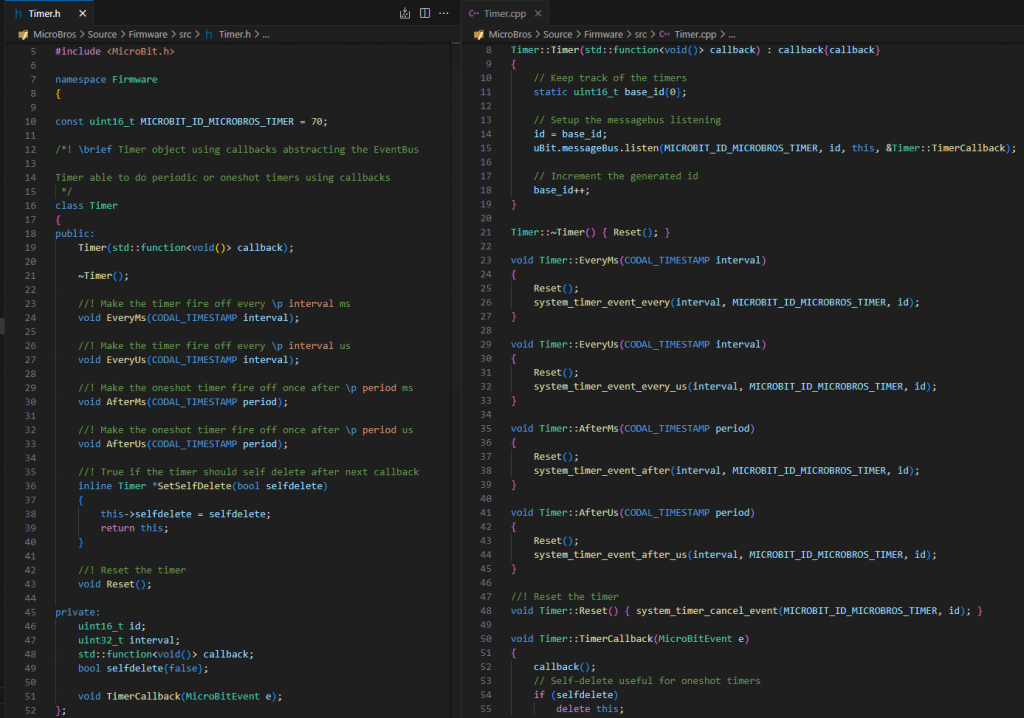

CODAL already has ways to interact with the system timer, but it is event based and needs to be provided an integer that will be sent with to a common event handler and not the more normal high-level callback per timer one, many of us are used to.

However implementing this on top was easy and something I did when implementing output smoothing for the motor driver, here is the code!

It is not perfect, and you can easily run out of id’s for timers when creating one-shot ones rapidly, at the moment this is not an issue, but it is something to watch out and rectify if they ever become used somewhere in the code.

Implementing output smoothing for the DFR0548 driver 🛞

So in short, switching the direction of a motor while running has a high chance of triggering over-current protection. So I wrote some code that will smooth this transition if the values are for opposite directions and this seems to have helped.

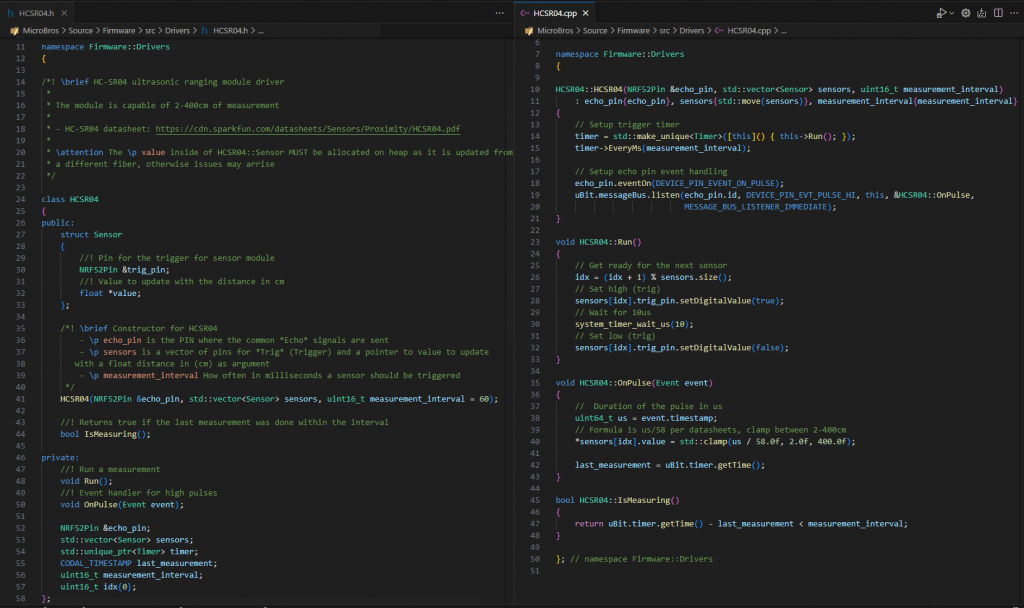

Writing a C++ driver for the HC-SR04 📏

On Monday Jonathan asked me if I could make a C++ driver for the ultrasonic sensor this week, something I did. I decided to write it in a way to support multiple sensors with a shared echo pin.

Using the CODAL event system that uses interrupts internally I was able to move on from blocking on the waiting for the falling edge on CPU to just using the CODAL pulse event, meaning the driver only sleeps the 10us needed for the trigger signal!

Implementing flood fill 🌊🚰

Building on the research done by Iver previously I decided to implement flood fill in our Core library meaning it can be used in the Firmware and tested in the Simulator.

I implementing it by adding another vector with values for every tile, I default initialize the ones with a goal to 0 and add them to a stack, and then all the others one to the max value.

After that I iterate the stack until empty and add 1 to the value and check if any of the adjacent tiles have a higher value and are not blocked by a wall, if so update the value and add to stack.

There are still things that can be done. One is better direction prioritization, when there are multiple ways you can go towards the goal, right now forward is always prioritized.

Also instead of just having map tiles away from goal there can be some kind of weights keeping track of turns and costs of movement to give more accurate information time wise, but these are not a high priority for now!

Iver 👴

After to more trying than should be necessary I have got the IR sensor to work as it should. But I have gotten it to work at last. Now all we need is to calibrate the sensors and run them thru a filter to remove interference from other light sources, and we should be good to go!

Testing the IR sensor

Next week on Keeping Up With the MicroBros 🫂

- Maybe we’ll get to rebuild the robot so it fits the maze

- Potentially some refactoring when it comes to the Simulator

- An even worse opening to the weekly blog post

One response to “Keeping Up With the MicroBros – Week 8”

Less gooooooooo