Date: 19.09.2019 – 26.09.2019

We have done a lot of technical research this week to learn more about machine learning and specifically working with large datasets to achieve autonomy. We are working with the TensorFlow library and currently setting this up to cooperate as a ROS node. TensorFlow is a huge Python-friendly open source library for numerical computation that makes machine learning possible, as it can train and run deep neural networks for digit classification, image recognition, language processing and much more. We have compared some different machine learning libraries, and we have also looked at the famous object detection algorithm, YOLOv3, which is commonly used for image processing and object detection. After some discussions we concluded that YOLOv3 is way too abundant for our project and TensorFlow is enough to fulfill all our requirements.

Another reason why we decided to use TensorFlow is simply because our embedded single-board computer, Nvidia Jetson Nano, is optimized to run TensorFlow for machine learning, as Nvidia even released their own version of the library, called NVIDIA TensorRT. They also claim that TensorRT-based applications perform up to 40x faster than CPU-only platforms during inference, which is an extensive factor in our drone project due to the fact that we are depending on great and reliable performance.

We have been looking at different datasets for this project as well. A dataset is simply a collection of data, which in our case is a huge collection of labeled objects. There are numerous existing datasets online containing pre-defined objects and items, which has been prepared by thousands of machine learning enthusiasts. And since machine learning depends heavily on data, a good dataset is essential to achieve proper autonomy. We decided to use a large dataset named COCO, which is very known and often used in object segmentation and superpixel stuff. It is one of the best image datasets available, so it is widely used in cutting edge image recognition and artificial intelligence research.

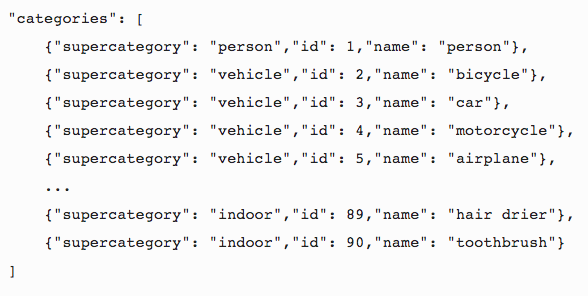

Since we are making a drone to recognize and detect specific objects, we are depending on a large dataset containing pre-labeled images to recognize “everything” surrounding the drone. Even though the COCO dataset contains a lot of information, it is not perfect and we still need to modify and train it to work better for our use. Luckily the COCO dataset is formatted in JSON, so it is integrable and easy to adjust and train to cooperate with our surveillance drone. The images within the dataset are organized with categories, which contains a list of items, such as dog, boat, etc. Each of those belong to a supercategory, such as animal, vehicle, etc. The pre-defined COCO dataset currently contains 90 categories and it is our job to continue its development and add more categories in order to work better with our drone. You can see below how items are categorized in the dataset, as it’s quite effortless to add more items to the list.

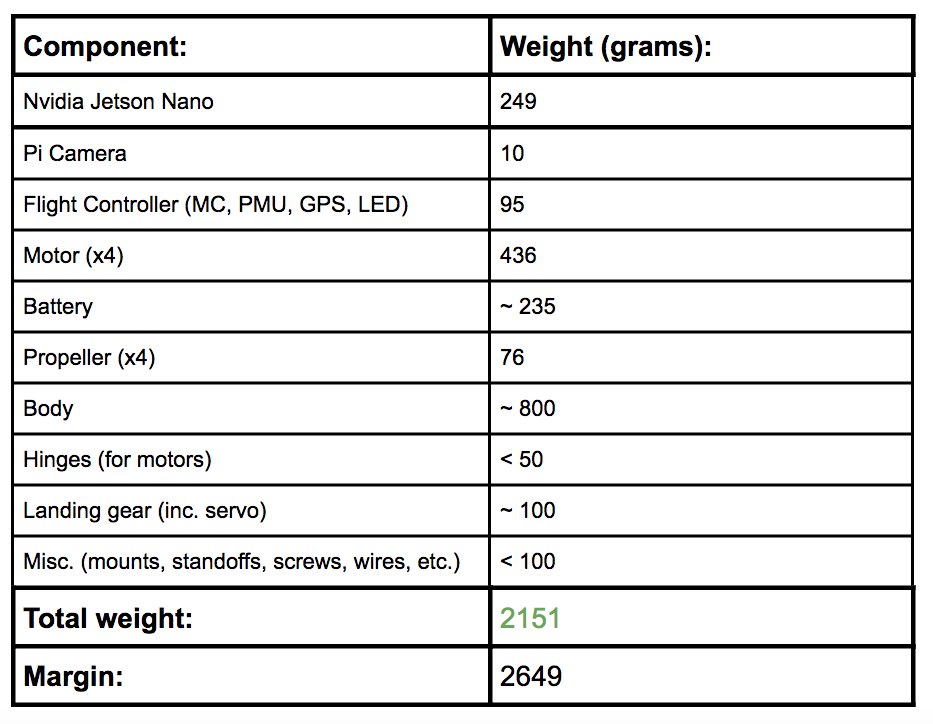

We have been working with some technical requirements and a budget as well, where we make sure that all the components are within the weight limit of our system. For our drone to able to fly, it has to weigh under 4,8 kilograms. The reason for this is that each E800-motor can lift maximum 1200 grams each, which adds up to 4800 grams.

That is why we have prepared a list with all components with corresponding weight to make sure that we are within safe limits. We have added a safe limit on the body and landing gear, as these may vary in weight after testing and finding improved solutions. This research is very important to help us reduce risks of flying, as we know where our limits are. If all our calculations are correct, we have a high amount of leftover weight at the moment. This makes the drone lightweight and more agile during flight time. It will also make the drone more attractive to potential customers as low weight is strived after in the drone industry, especially for military use.

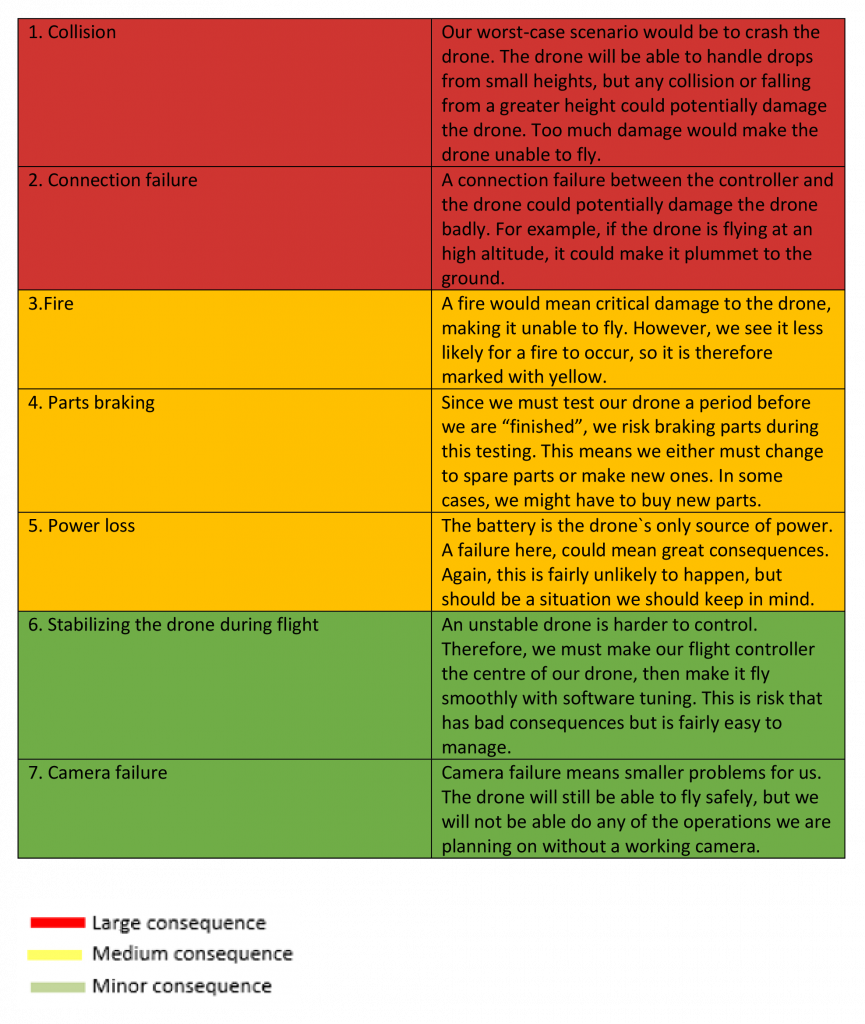

This week we also discussed the risks involved in our project. Risk will always be in the back of our heads. There is no way of getting rid of it, but we are always working on minimizing it. There are many risks to consider when working on such a complex system, and with little to no experience, most of them can end up pretty costly. And we don’t want to spend all our money and resources on solving complications that could have been avoided. With every part or subsystem that is designed, risk analysis follows. Not always as an own process, but with every suggestion we come up with, we think of what problems we might encounter and how we can solve them.

Often we can accept some risk on an idea, but other times we have to kill the idea and come up with another one because the probability of the risk is too high. But at the same time, we come up with a solution for that risk if it is possible to do something about it. For example collision is a huge risk. The drone could fly into a wall and crash, which is something that would be a huge catastrophe for our project.

Understanding risk is in many cases just as important as understanding the main problem. The first impression you get from the risks of your design might not be what the risk really is about. Sometimes the risk will have greater consequences than you first thought, other times the consequences can be smaller than you thought. In both situations, resources must be used right to accomplish deadlines and budgets. We have created a risk assessment table below to illustrate some of our major risks and some minor risks.

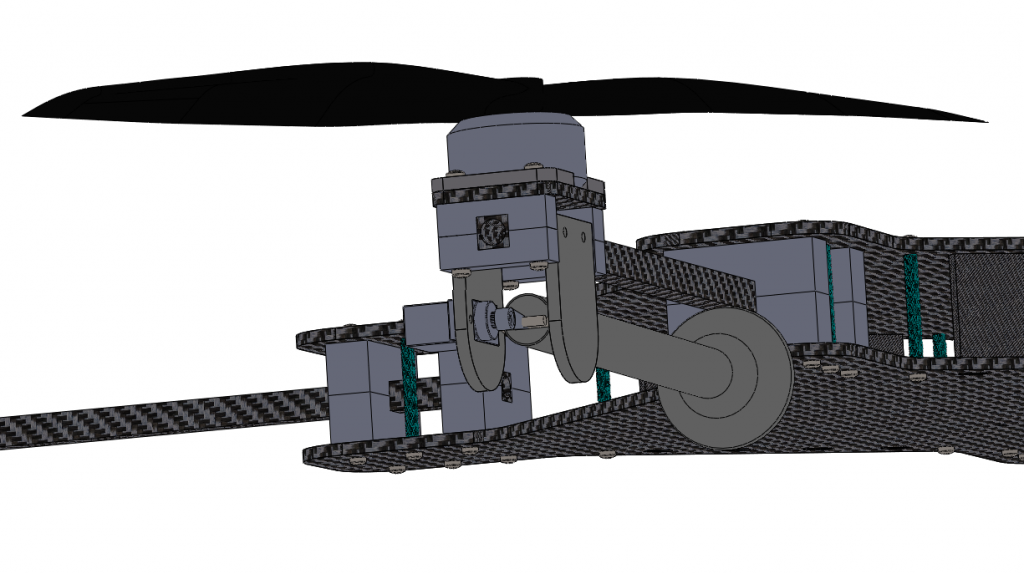

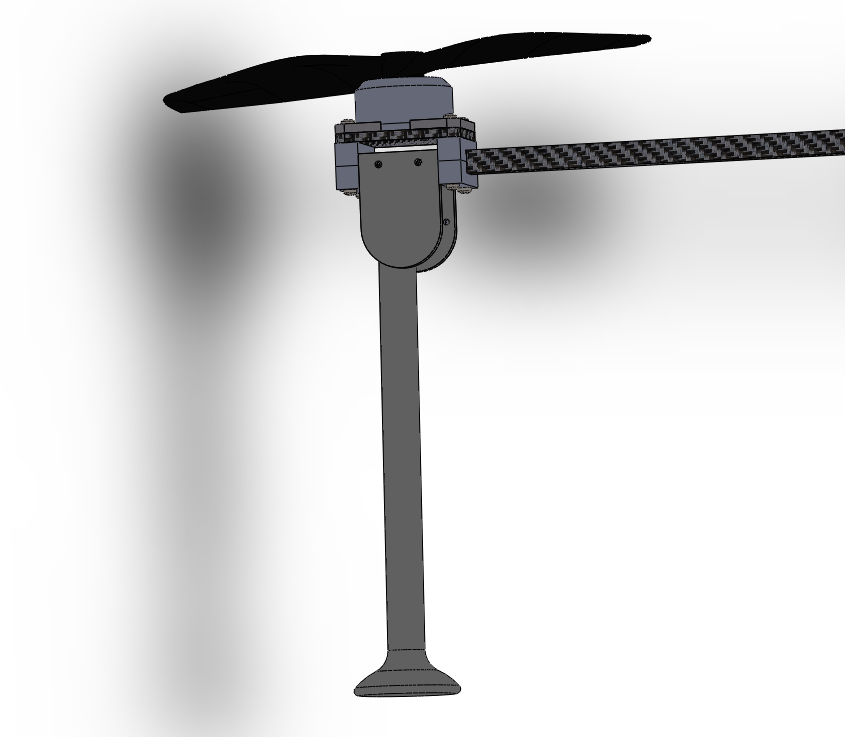

Another huge improvement from last week is the landing feet implementation that we plan to attach underneath the drone. We initially came up with a landing gear last week, but we stumbled upon some issues and decided to do another iteration to make some vast changes to the design and therefore we came up with a new solution. We are planning to mount four landing feet underneath each drone motor and attach one servo motor to each landing feet to rotate after takeoff. All four landing feet will rotate inwards and rotate back out again before the drone lands on the ground.

We believe this solution will work much better than our last design iteration and we are aiming to 3D-print four hinges to hold the landing feet and to keep them attached to the drone motors. We already have the landing feet ready, so we just need to work on the solution to mount them properly.

For the next sprint we are planning to test the components and unpack the flight controller to see how it works with the rest of our components. We will do some measure to calculate the power consumption and get ahold of a battery that will have enough power to supply all our single-board computer and all our components. We are also testing the TensorFlow library to try what it offers and implement it into our own system as well. This is a large process because we need to wrap our ROS setup around the TensorFlow implementation to ensure communication between the machine learning section and rest of the drone. This is something we are very excited about, as we are learning something new every day.