August & Sander:

Mapping

Sander and I have created a hard deadline. We have set a timeline of 2 blog weeks to finish implementing mapping. This particular deadline is extremely hard, and I kid you not, I will smash the entire car with Sander inside (of course without the mecanum wheels, we dont want to owe USN money), if we dont meet the time constraint. I will play “Psycho Bitch” by Henning Gundersen on max volume, and as soon as I get to do protected actions, inside a protected object, which of course are non-preemptive, I will lock and destroy the car completely and give up engineering for life. This is atomically, and I will fulfill my task. We will then present the failed project the 19th of December in shame. You heard it here first.

Driving the car fully wireless w/ cover on:

August:

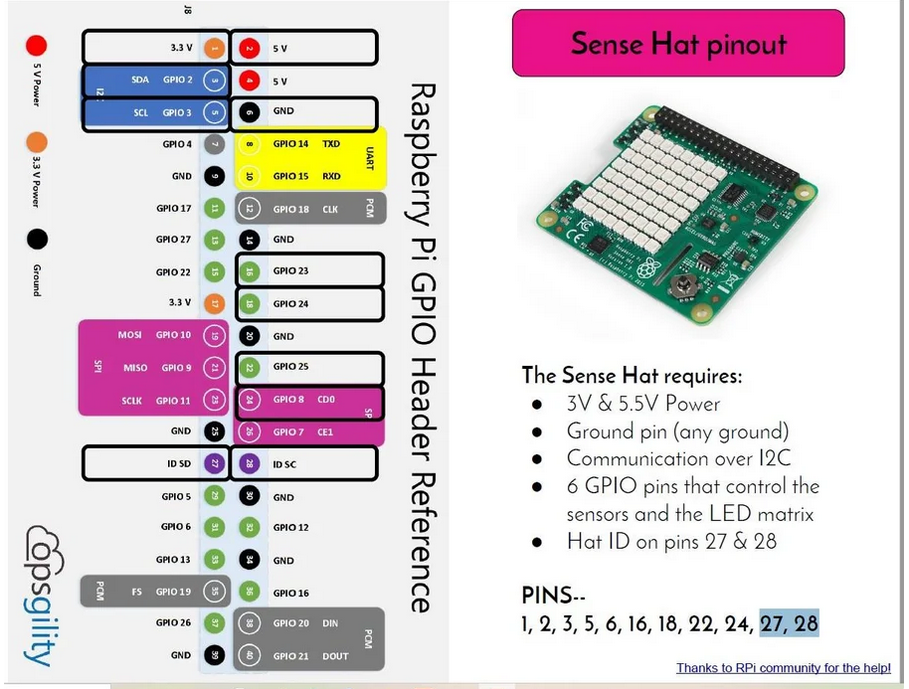

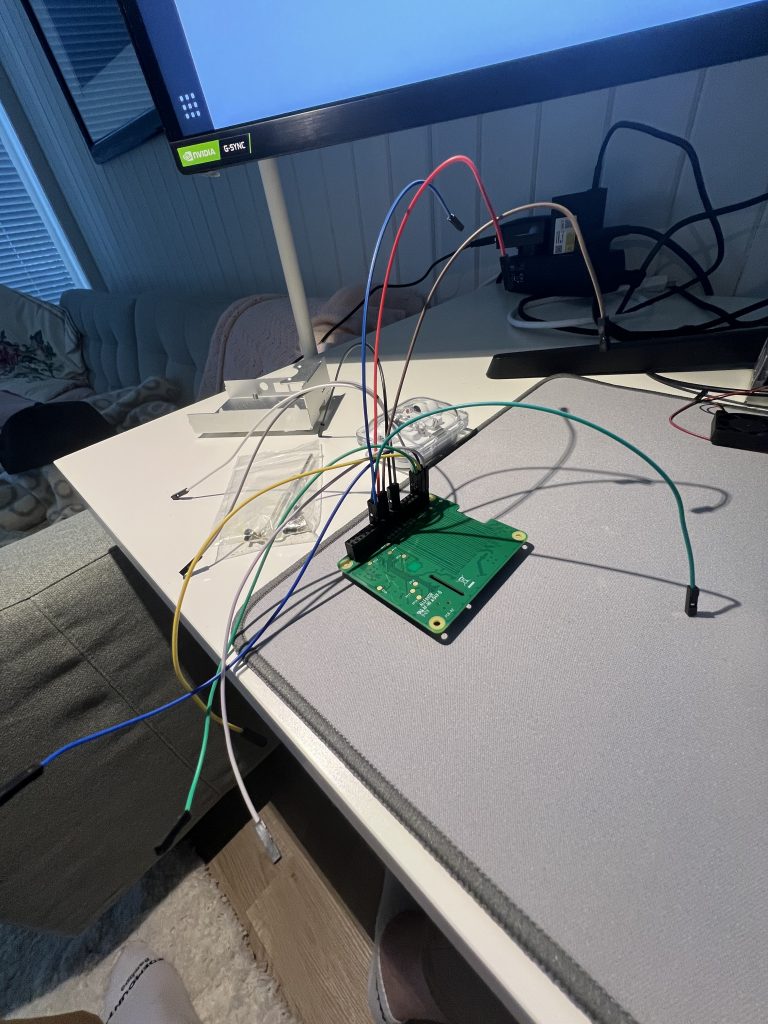

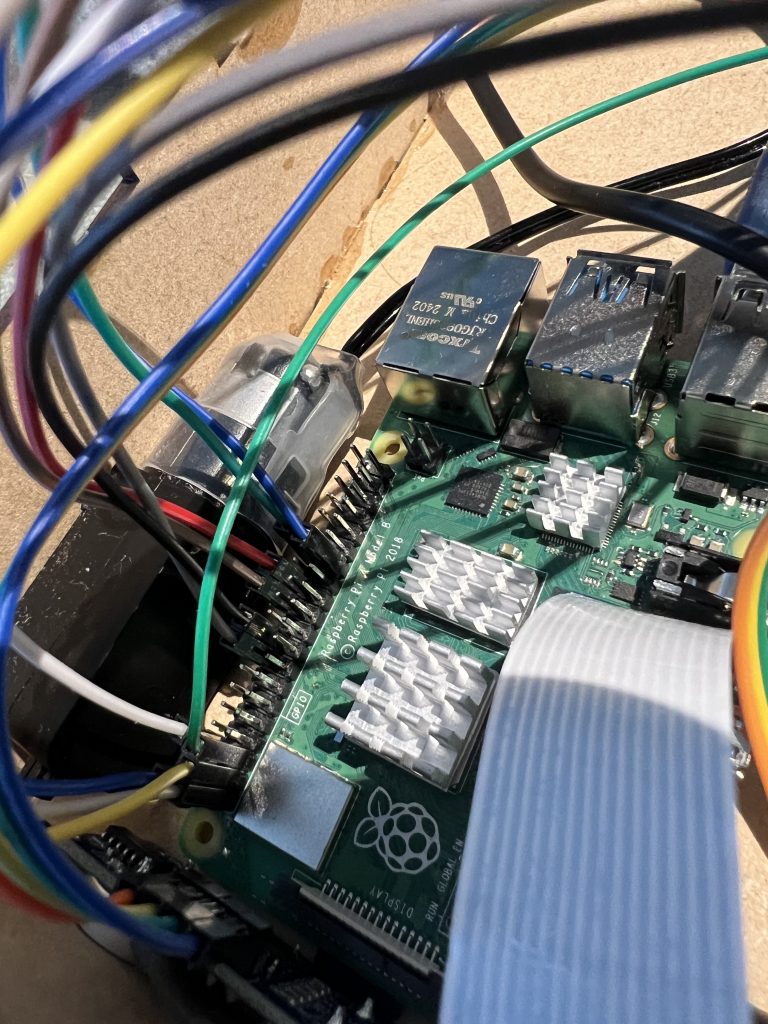

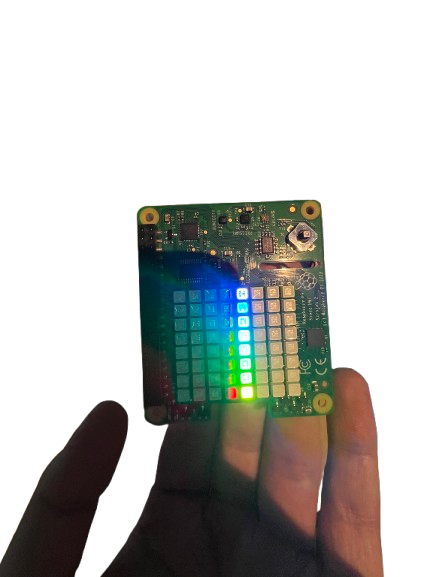

SenseHAT <-> jumper cables <-> RPi4

We have a LiDAR, IMU data and we are the right guys for the job. I am currently in the aftermath of integration hell, and even though my nerves are hanging by a thread (with no mutex), I am starting this week by connecting the SenseHAT to the RPi4 via jumper cables:

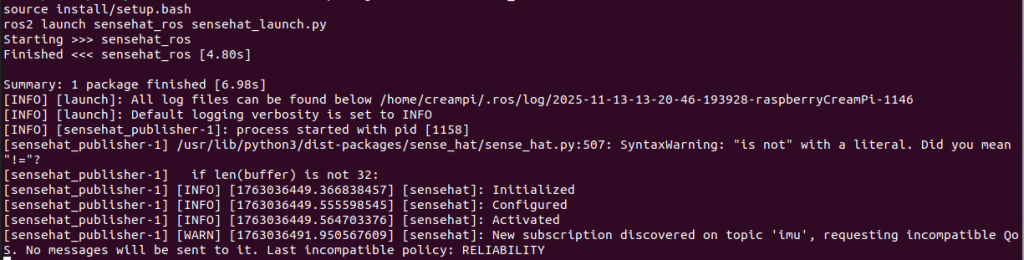

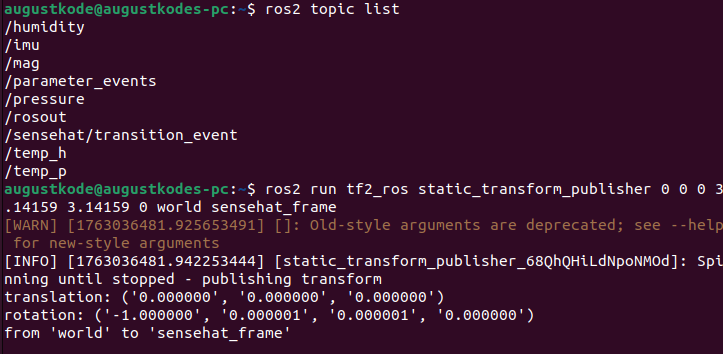

This was 11 pins. I dont think I need all of them, because the led matrices is not supported by Ubuntu 22.04 anyway, but some places online said that they didnt get the SenseHAT to work if they didnt plug in all of these pins. Now that we at least have contact from the RPi4 to the SenseHAT, lets verify by checking the ros2 topic list, and whether the IMU data is consistent with the orientation shown in RViz2:

It went surprisingly well , but to be fair, the prep work has been pretty thorough. I will mount the SenseHAT directly under the LiDAR. The SenseHAT will be placed in the ceiling of the car.

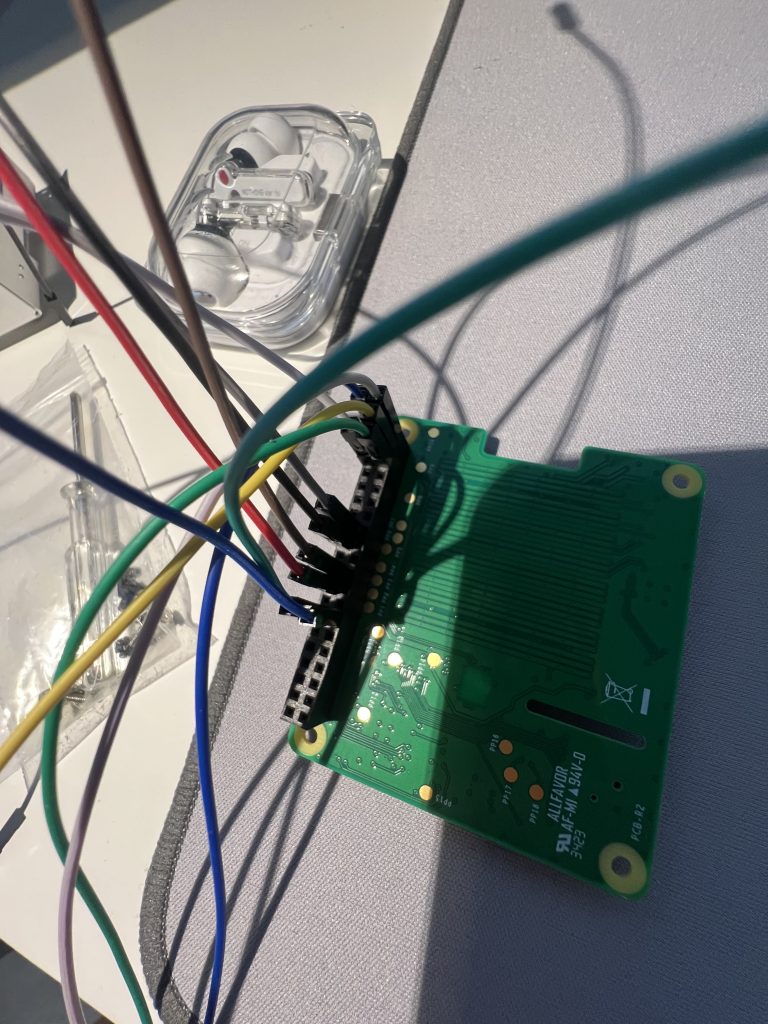

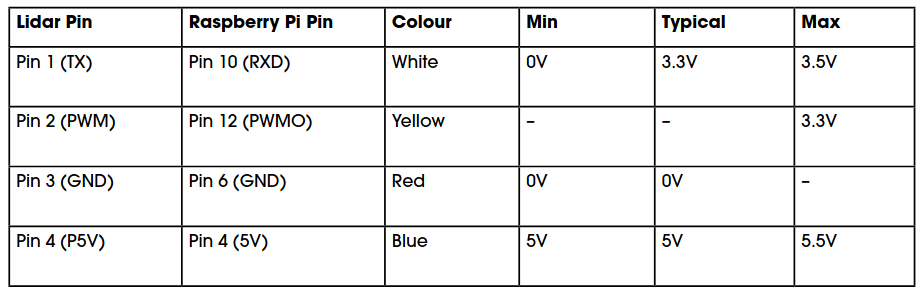

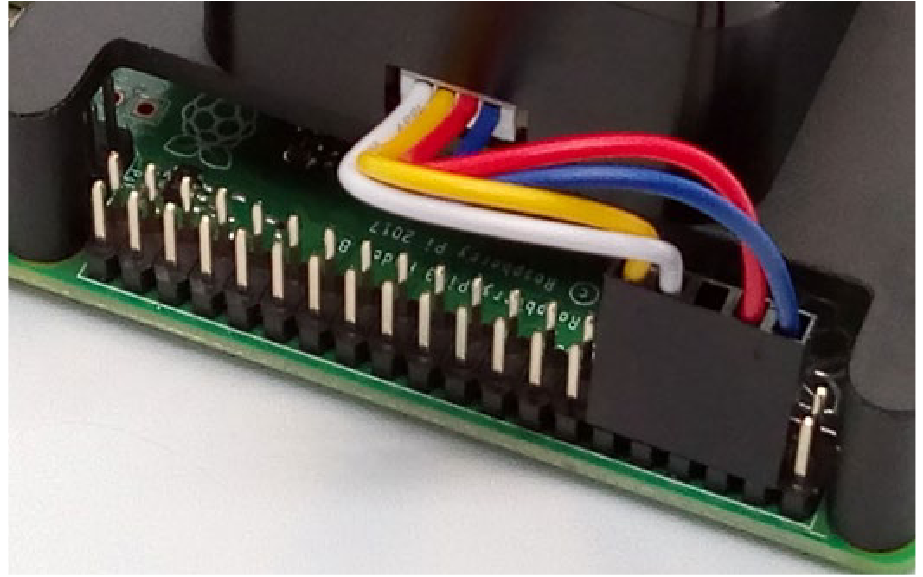

LiDAR <->jumper cables <->RPi4

Pretty much the same thing here:

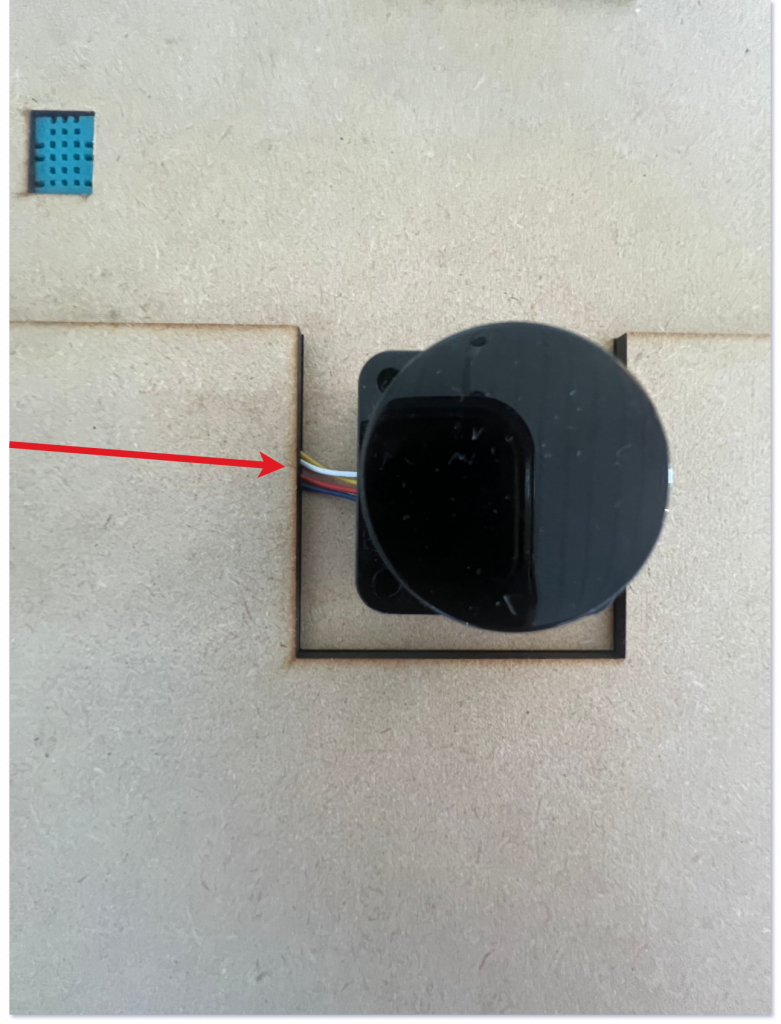

Making space for LiDAR jumper cables

Yes! It was supposed to take 5 minutes, but this ended at the emergency at Kongsberg hospital. I wanted to save 70kr, and refused to buy gloves. Only a tapetkniv. Well, the tapetkniv ended up in my finger. I was afraid that my days in the terminal was over, but luckily, its not. My finger is still attached to my body. Collage:

This task broke its deadline by far. Luckily, the RTOS was dynamic priority scheduled. Thanks to dynamic priority scheduling, the RTOS is pretty fast when it comes to new tasks with a close deadline. I also had to get a tetanus shot. It sucked!

Lesson learned. If it cant be solved with a curl-command in the terminal, I will ask Richard Thue if he can help me. I was literally supposed to cut away 3mm of material to make space for the wires. Instead i cut the knife 3mm into my finger. Luckily my finger is ready for more Vim. Not my cup of tea, not being able to type commands at the tempo I breath in the terminal a friday night.

Fixing log path in the GUI and mounting fan inside of car

I fixed the log path in the GUI, and we mounted fans inside of car.

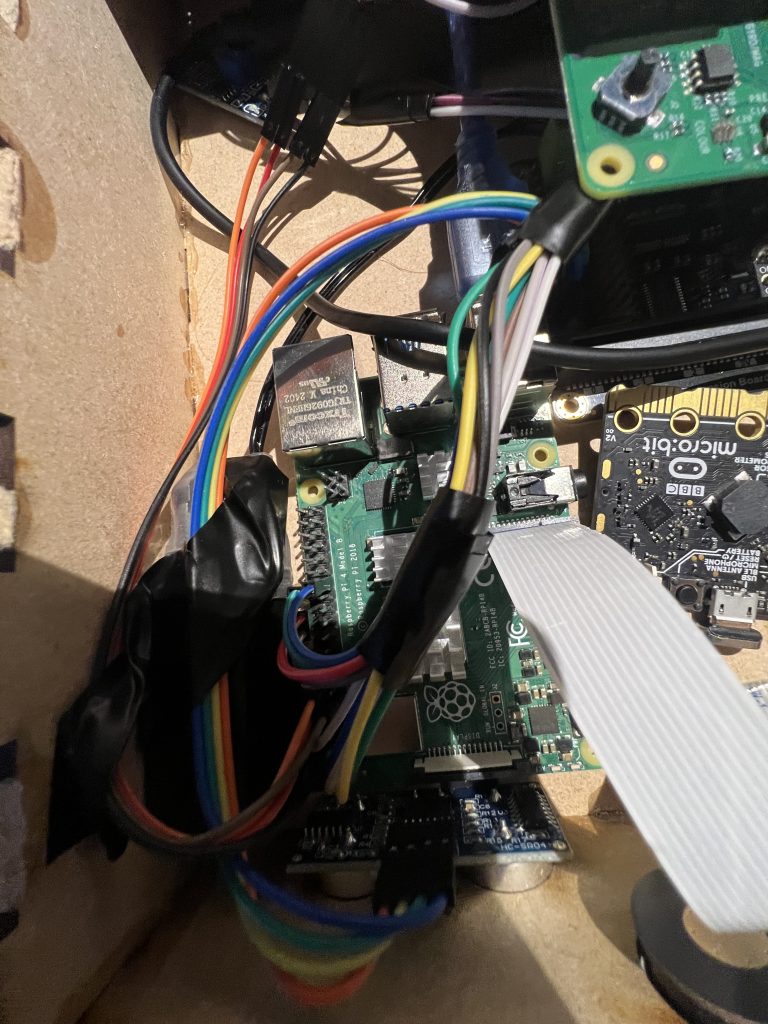

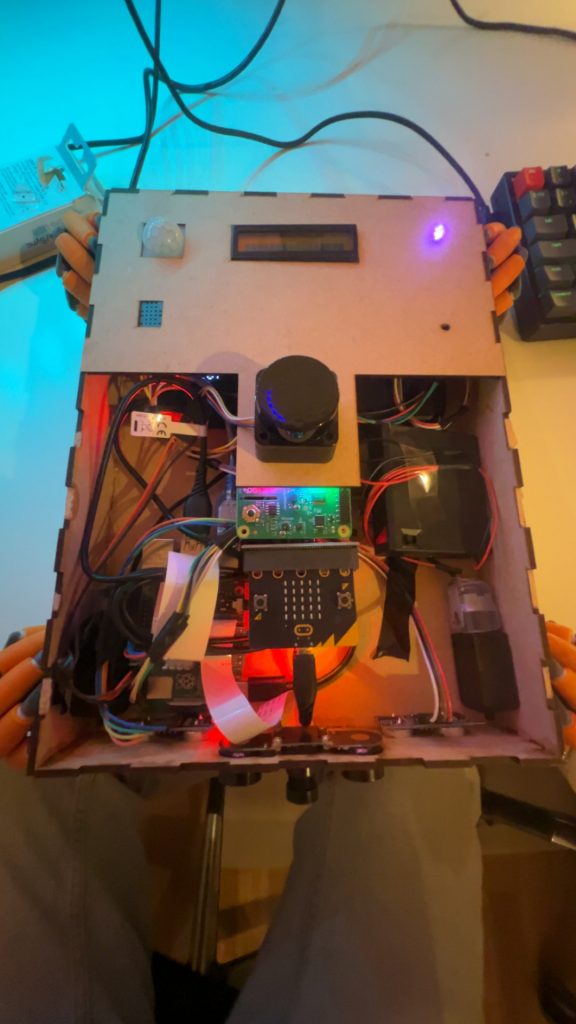

Lining everything nice in car:

I made space for everything to fit in the car, with top on.

Mapping:

Workin with Sander on the way to mapping. Assisted with IMU topic and data. Read Sander for full.

Arduino topics malfunction:

I must debug next week. First thing that work, do not work now.

Next week:

- Continue on mapping

- Autonomous

- Fix Arduino sensors

Sander:

This week we had our first completely wireless drive, with the onboard power pack(see August’s video). The car drives a bit slow with the power pack but it doesn’t matter much, the maneuvering is flawless. We also got the angle:bit bracket to mount the micro:bit laying down, so we are able to close the lid. As far as the progress on SLAM, we are closing in. Since we now have assembled and integrated the whole system, we are able to conduct real world testing and debugging.

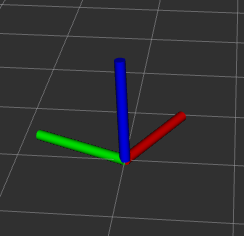

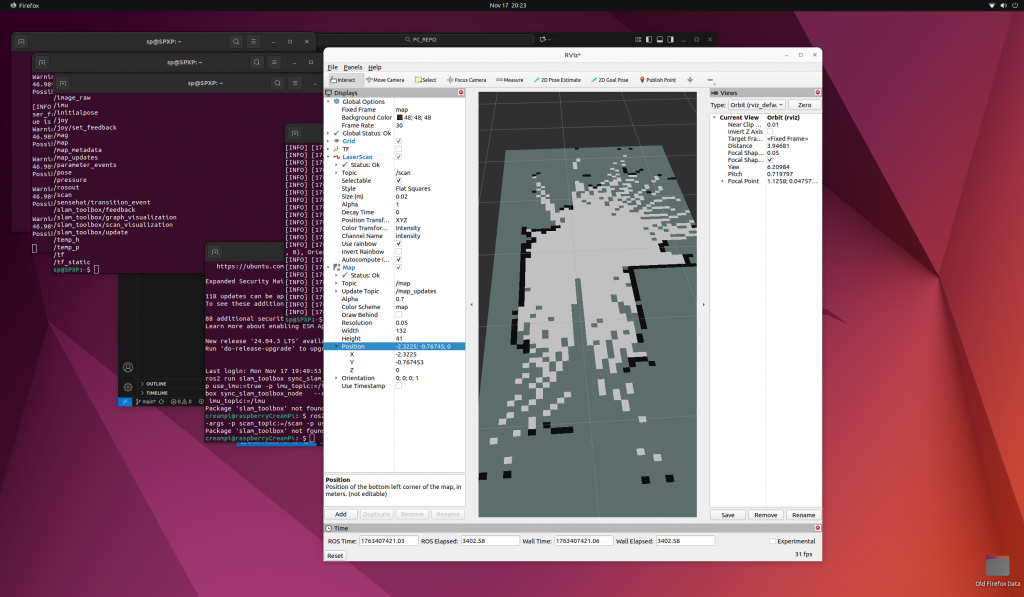

I had some trouble getting the map to show up, and now for the Slam toolbox to use the IMU data for yaw correctly, this is not the best solution but its what we have without wheel encoding.

The picture is me visualizing the map in rviz, using a static position transform, this displayed the map at least, but I had difficulties getting the data to be interpreted correclty by the slam_toolbox. Did some research of how to fix this, I will continue with the IMU + Lidar odom, to see if this will work, this is what i envisioned from early on that would be necessary.

After a few ere the map was finally visualized in Rviz, though everything didn’t work exactly as planned. Using only the IMU for orientation did as expected not give the best measurements. We probably have to use some sort of Lidar scan matching to counteract the IMU drift, if this will work I dont know. Everything would have been easier if we had wheel encoders. I will try the ros2 native robot_localization package for sensor fusion between the lidar and IMU data, giving a much better position estimate for use in slam_toolbox, using the extended kalmanfilter.

Next week:

- Get the mapping right (robot_localization)

- “dumb” autonomous mode up and running

- More integration, set up easy launch commands/files.

Sondre

Group Trouble in another course:(

I have a group assignment in another course, but everyone in the group has left me, so I’m stuck with all the work by myself. This affects how much time I can spend on the project this week and next week. I hope to get more done next week 🙂

Oliver

This week, I worked with the Swift Navigation GPS, but I encountered issues during setup. I discovered several problems with the UART settings, which I managed to correct. The GPS was getting a weak signal and was unable to connect to the satellites. I tried several different approaches, but I still haven’t been able to get it to work. I also attempted adjusting NMEA GGA, NMEA RMC, and NMEA GSV, but it didn’t help.

I haven’t been able to work as much due to other courses and health-related reasons.