August

Plan For the Week

I will continue on last weeks work:

- Make the GUI amazing

- Continue on with Sander on making the car autonomous

- We will make spaceholders for sensors on the car, hopefully we will mount them this week as well

Making the Arduino a ROS2 node

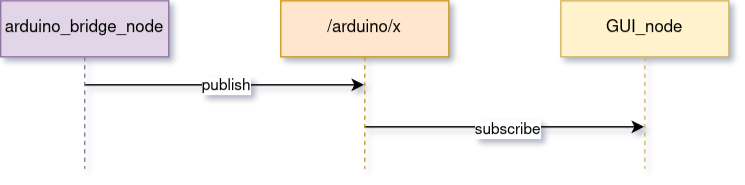

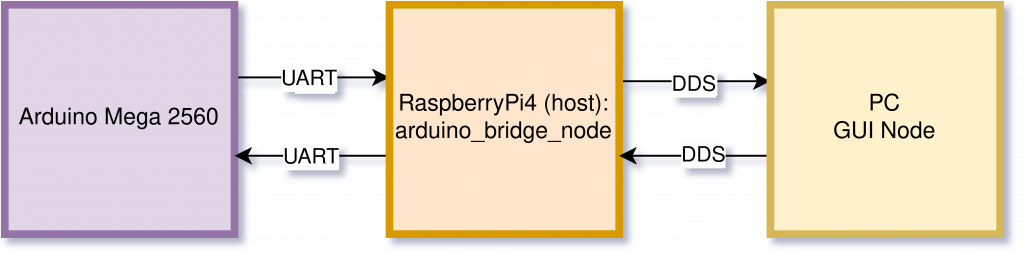

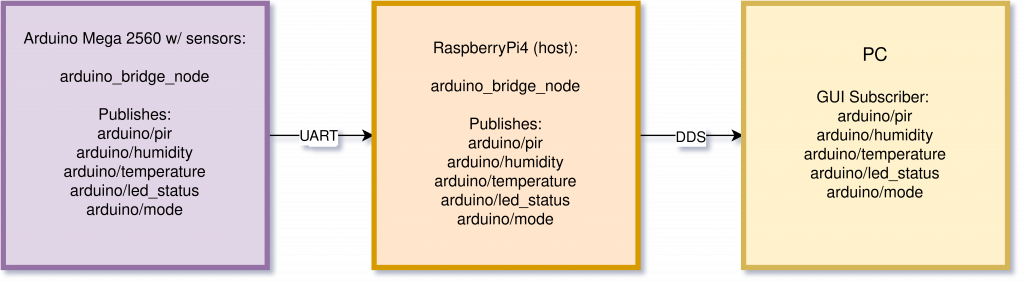

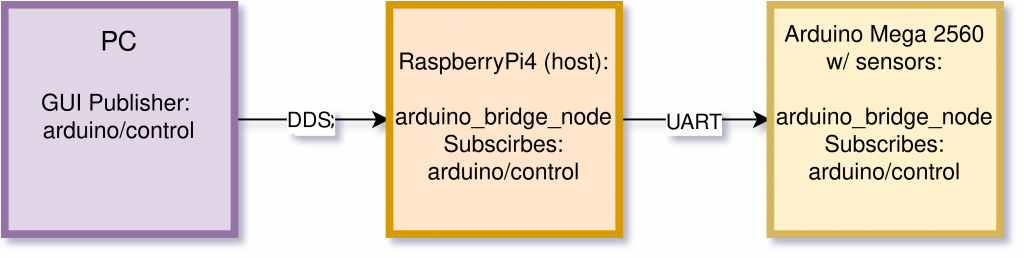

The original plan was to use a flask-server. But after working with ROS2, I decided that we would instead make the arduino a ROS2 node as well. I started with creating a class ArduinoBridge, in arudino_bridge_node.py. This works as a bridge between the Arduino and the GUI.The Arduino sends sensor data (temperature, humidity, PIR, LED, and mode) over UART (serial).

- The ArduinoBridge node running on the Raspberry Pi 4 receives the data, parses it, and publishes it as ROS2 topics.

- The GUI subscribes to those topics to display live data.

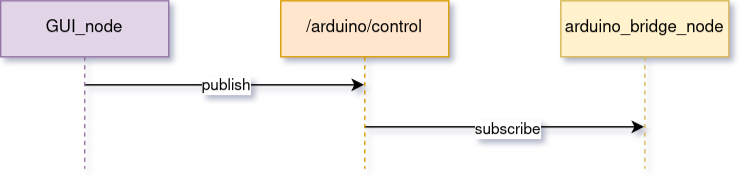

- The GUI also publishes commands (like

ON,OFF,AUTO,GUARDIAN) to the topic/arduino/control.

- The ArduinoBridge node subscribes to

/arduino/controland forwards those commands to the Arduino through serial.

This made the communication completely two-way. Everything now happens through ROS2. These are the new topics:

Diagrams of the ROS2 nodes:

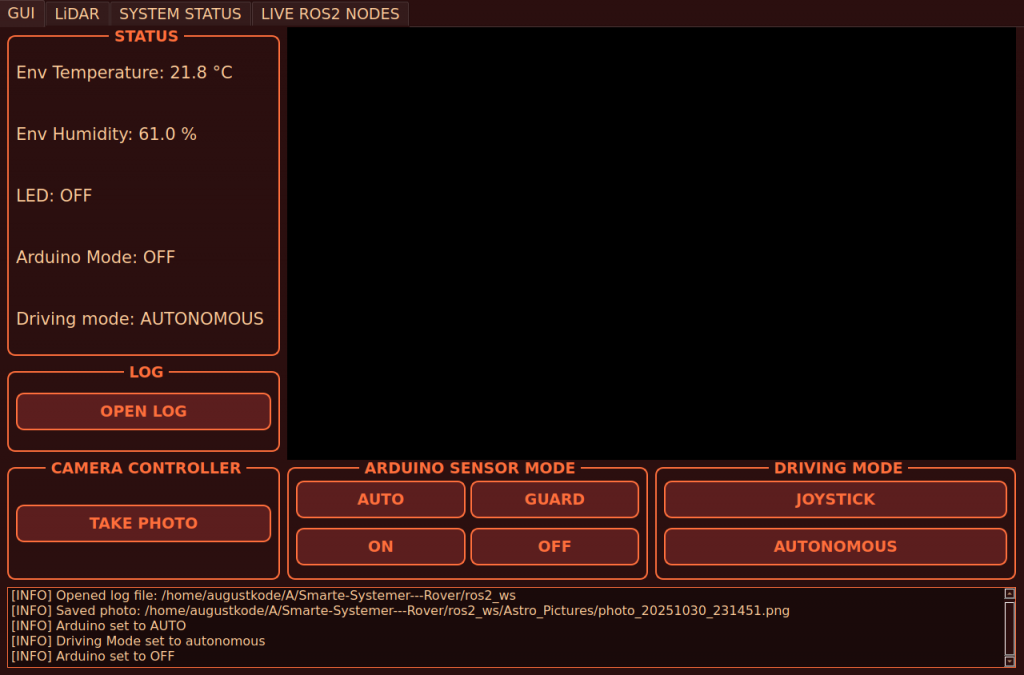

Buttons in GUI to Control Arduino

Previously, button control was handled through HTTP requests to the Flask server. Now, using ROS2, we had to rebuild that logic from scratch. But somethings where pretty similar. Thats what i noticed with ROS2 and doing this by using a flask server, it is pretty similar all over, but easier and more robust using ROS2. Every button is now working, and have logic. The arduino_bridge_node subscribes on the arduino/control topic, the GUI buttons publishes on! I made the buttons just like last week, except that I had to make them as lambda. Lambda makes sure that the data is sent from the signal, and that the button can send unique arguments.

Arduino Data in the GUI and Logging

Picture of labels working in the GUI and that every important thing done in the GUI is being loged in the terminal (these commands are also written to file):

All the other sensor data, both from the RPi4 and the sensors connected to the Arduino, are written directly to file. I reused the logic from last week to get the labels to subscribe on the topics. I also made the camera save pictures in a directory, giving it a pathway where to save the pictures. Same for the log!

E-mail Feature if ALARM

This is a feature we had on the flask server. This has been implemented in ROS2 now. We used this by using Pythons SMTP. https://mailtrap.io/blog/smtplib/

Chassie work

As mentioned in an earlier week, we are experiencing some fricition trouble between the frame and the motordrivers. Or the pin between the wheels and the motor. I tried to make it better by scraping the holes a bit bigger. This improved the rotation some, but not as much as I hoped.

All in all this week has been nice. The iteration I have done over the GUI is good. We are closing in.

Plan for next week

- Mount all the gear to the car

- Merch everything

- Survive integration hell

- Have a meeting to really define and tune everything we have, or want to add/extend

- Continue on getting the car autonomous

Sander

This week I continued with the SLAM implementation, and worked on using IMU data from the Sense Hat together with the lidar to transform the lidar pointcloud into the “Map” frame. first i had to find a way to connect the Sense Hat to the Pi at the same time as the lidar. We might need to order a expansion board for the PI, the sensehat does not use the same pins as the lidar but it covers all the GPIO pins on the pi. Therefore we might need a expansion board, or we can connect it manually.

Challenges

Getting SLAM to run on custom hardware proved more complex than expected, even though there are plenty of documentation on both ros2 and slam_toolbox same with videos, the problem is that they either use complete robot such as the turtlebot, or at least rovers that provide wheel odometry. The lack of wheel encoders mean we rely on the imu and scan matching. The fact that I havent got slam up and running, means I haven’t had the opportunity to test if the Pi4B is able to run all the services we plan. The good thing is that the use of ROS2 DDS makes running nodes on other devices easy using SSH, since SSH is an encrypted connection and the wifi module on the pi has limited bandwith along with limited processing power. This leads to even more latency, therefore running nodes critical for the rovers autonomy externally is not preferred. I have tested the lidar visualization over ssh in rviz and this does not have a lot of delay, but is a bit inconsistent. We need to get slam up and running this week.

We should also find a better more robust way to mount the lidar and Sense hat. This could have been some 3D printed mounts, but we will see what we have time and capacity for. The lidar should be placed in the center of the rover, this makes it easier so we doesn’t need any static transforms for the lidar position.

SLAM

ros2 launch slam_toolbox online_async_launch.pyRunning this command launches the slam_toolbox in online asynchronous mode, online means that the toolbox is working with a live data stream in this case from the lidar on the rover. And asynchronous meaning that the toolbox will process the most recent scan, this is important since the pi cant handle processing data at the same frequency as the lidar sends.

The slam_toolbox uses a grid based system, which divides the map into grids, that are either occupied, not occupied. This together with the imu for the dynamic transform creates a 2D map of grids based on if they are occupied or not. The challenge has been to get the slam_toolbox to use the orientation data from the sense hat.

Next week:

- Continue on SLAM and start on NAV2

- Testing hardware limits on the PI

- Integration and mounting of the rover

Sondre

Getting the Touchscreen and an Ambush

I started the week by stopping by Steven to pick up the touchscreen (and the GPS for Oliver), which will serve as the main interface for the ground station.

The screen was also a Raspberry-type display, so it was easy to connect to my own Raspberry Pi.

I finally got to test the code on the touchscreen, and it worked better than expected.

The plan for this week was to improve the dashboard and talk to Richard about the ground-station sketch so I could get it printed, but another assignment in a different course took up most of my time.

The plan for next week is to continue refining the dashboard and implement additional functionality.

Oliver

This week, I tried to fix the calibration errors. When I tested it, I had inaccuracies of 20–60 cm at distances of 1–2 meters. I then tried running a full startup calibration, which helped a little, but the errors returned when the device was moved.

I created an offset table to correct the errors.