August:

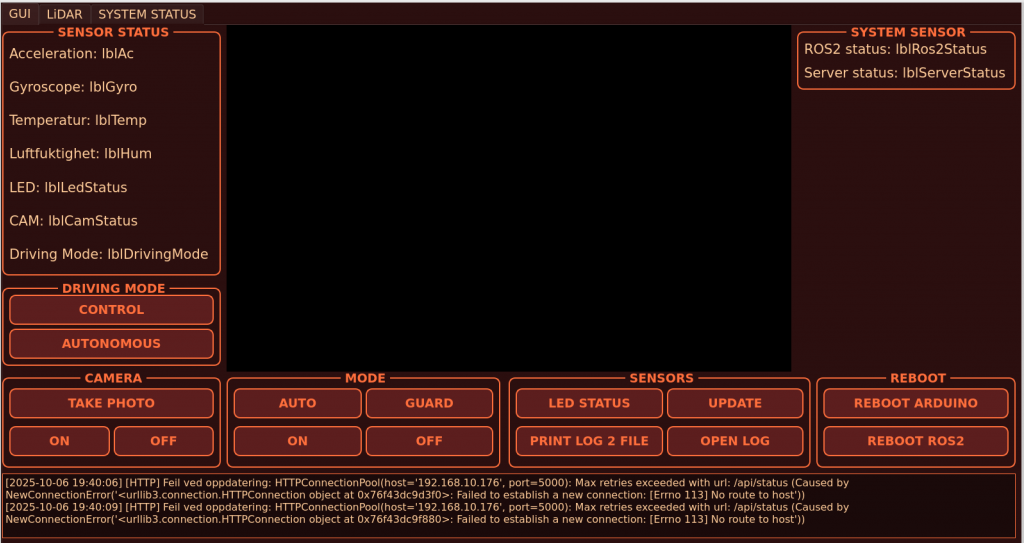

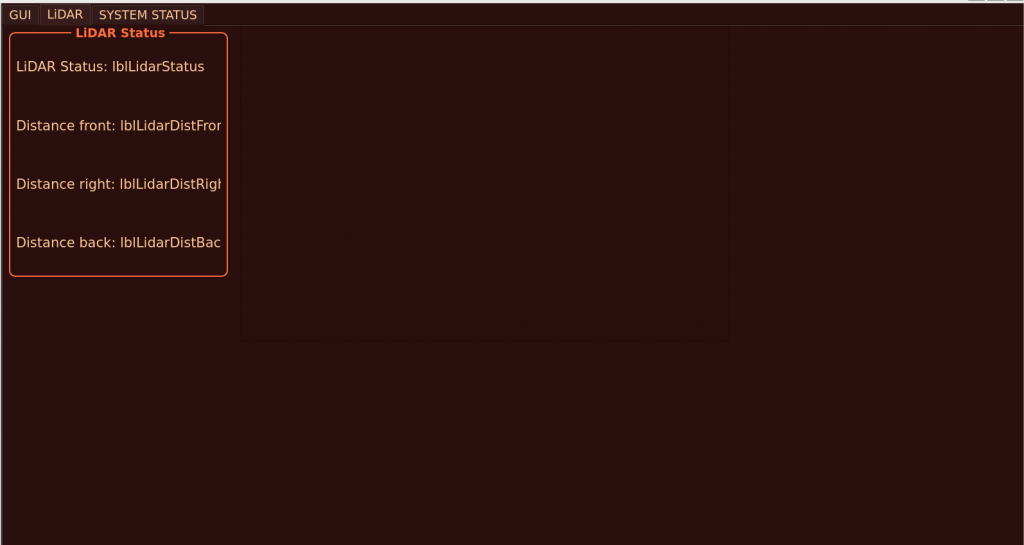

I have iteratet over the GUI. The GUI now has placeholders for a LiDAR site, it is a graphical widget there, I will later default color it black, so it is more intuitive that it is placed there. There is also now a SYSTEM STATUS site. The plan here, is to have every ROS2 node showing there. The default color of the labels will be red, and a matching button for every ROS2 node with the right name will be implemented. When a node is connected, and have contact, the label and button will be green, if not, the label will be red, and the button as well. If you press the red button, it will reboot the node, and hopefully make contact. There is also a placeholder for REBOOTING ROS2 if we will experience a hang.

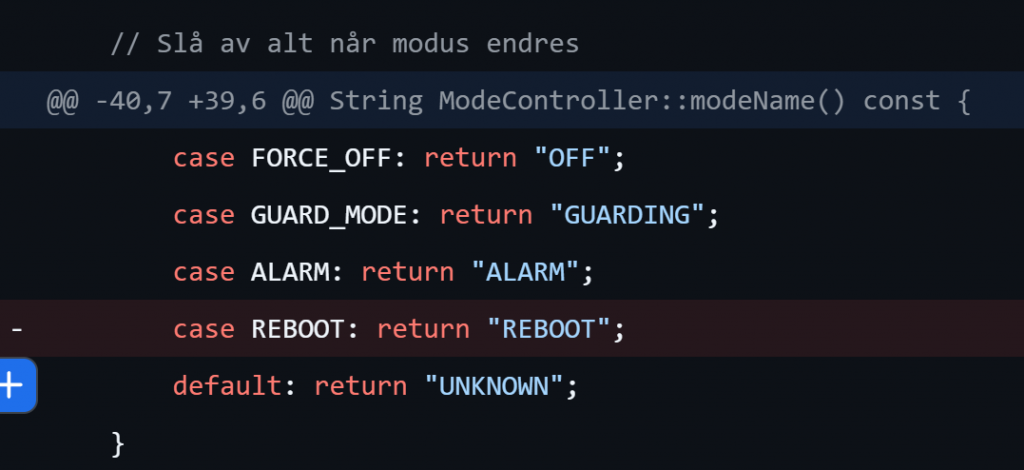

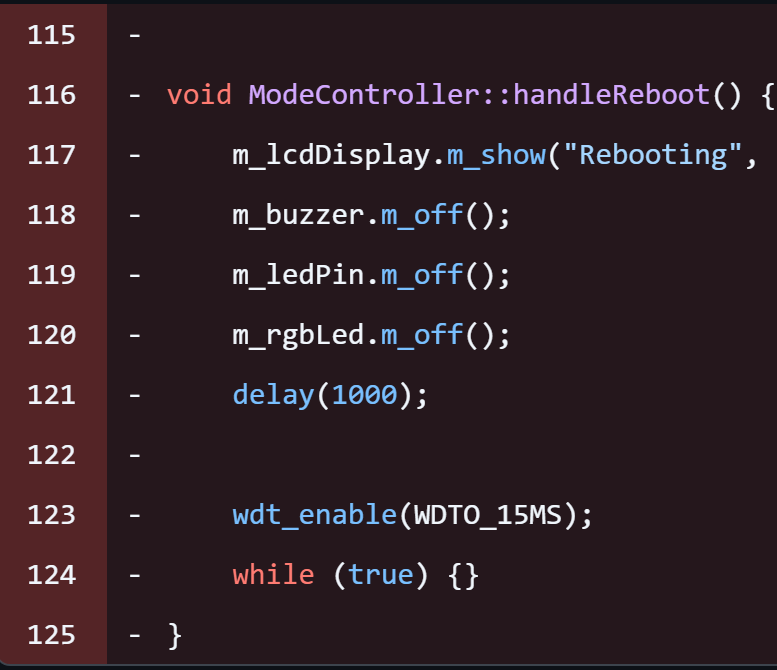

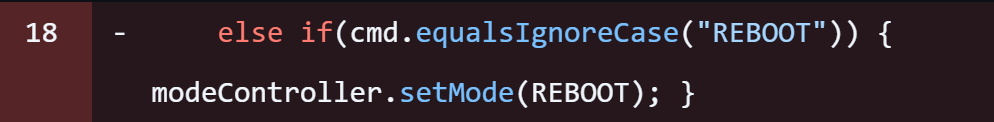

During this week, I met some challenges. I wanted to implement a function in the Arduino. This function was intended to reboot the arduino in case of a hang or a freez occuredin the arduino. I included the avr/wdt.h to get access to watchdog, and made a REBOOT enum. I then implemented a void handleReboot();. I extended the flask server to with a api call REBOOT and made a RESTART ARDUINO button in the GUI. This actually worked, the arduino was in the correct state, and everything implemented in the handleReboot function occured as planned. The problem however, was that it only worked the first time. After that the Arduino stayed in the state, never going out. I tried to have wtd_reset() in void setup(). In void setup(). The default of the Arduino is AUTO, but it didnt work. I then removed everything from the arduino code. I will try it later, put for now, the function do have a placeholder in the GUI. Same for the flask-server.

I spent a lot of time on implementing the graphical widget/view on the LiDAR, because i promoted the wrong widget, and spent a lot of time figuring that out. I also spent some time on the watchdog, but this will be implemented on a later time.

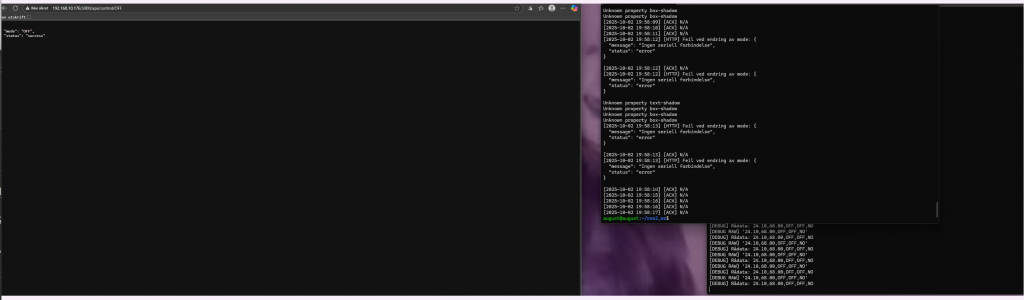

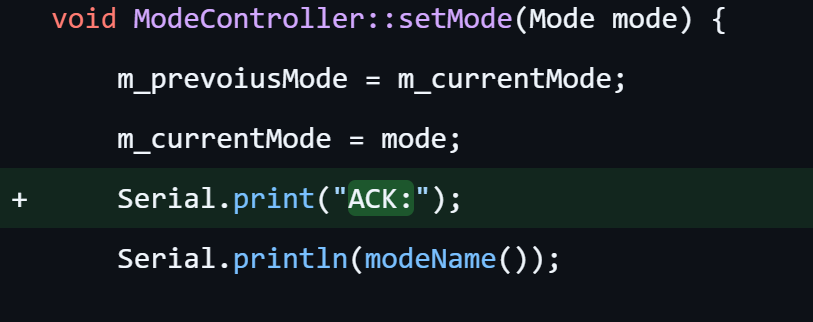

I implemented an acknowledgment protocol. If the arduino changes state, it will send a ACK: <modename> thrugh the serial connection to the flask-server. The flask-server prints ACK:recieved, ACK: <modename>. The GUI then recieves the ACK ACK, and logs ACK: <modename>. By doing this, we know that the ack has gone through the whole system, and if something stops within the system, it will be easy to debug and see where the system fails. Fails that im currently experienceing. I have debugged, and the ack is printed out in the serial monitor every time, the arduino is changing the mode using the terminal. When im trying to change mode in a client such as Edge, it also works, but the GUI isnt getting the ack, and if it does, it is only a couple of times before it stops. I will continue debugging.

I had both presentation of game design document i grunneleggende spillutvikling, and kont in statistics. I In statistics, i spent 26.5 hours studying statistcs as shown from my screen time in Notability from last week.

Me and Sander are spending a lot of time brain storming every week. This has helped a lot. See Sanders blogpost for requirements and drawings. We also made the powerpoint for the mid-term presentation in Utvikling av smarte systemer. Prette work-heavy week. But the exam, review and presentation went well. Motivating, to say the least. The more we put in, the more we get out.

This week i will implement a sense hat to the system, and make it a ROS2 node. I will display the data in the GUI. Ive already implemented the placeholders. I will also look at the ultrasonic sensor. I will connect this the microbit, and it will control the motors when the car is both autonomous and when you control it with a joystick.

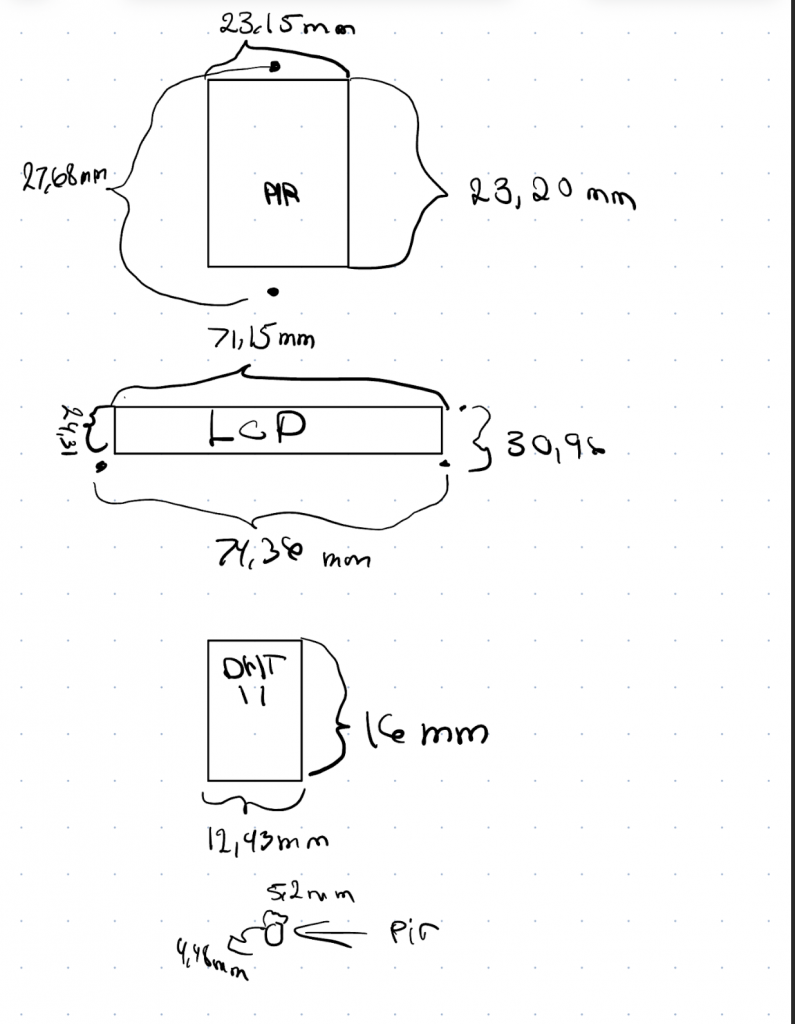

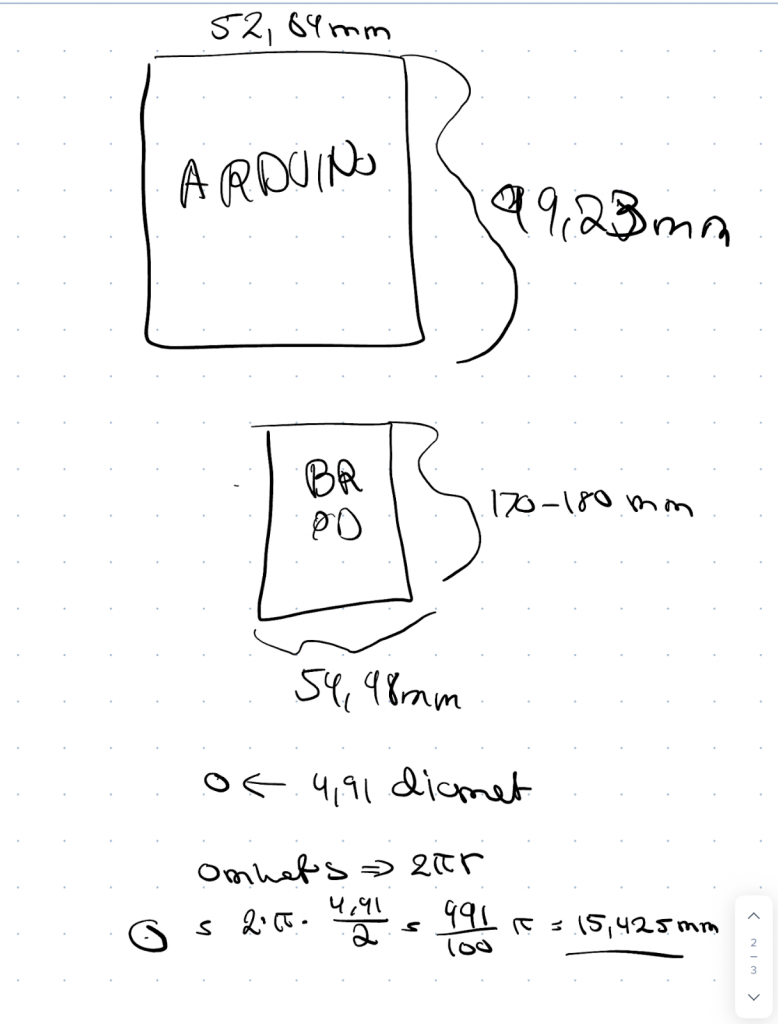

We are also planning the car design (see Sanders blogpost for drawings), and measured the sensors in mm.

Sander

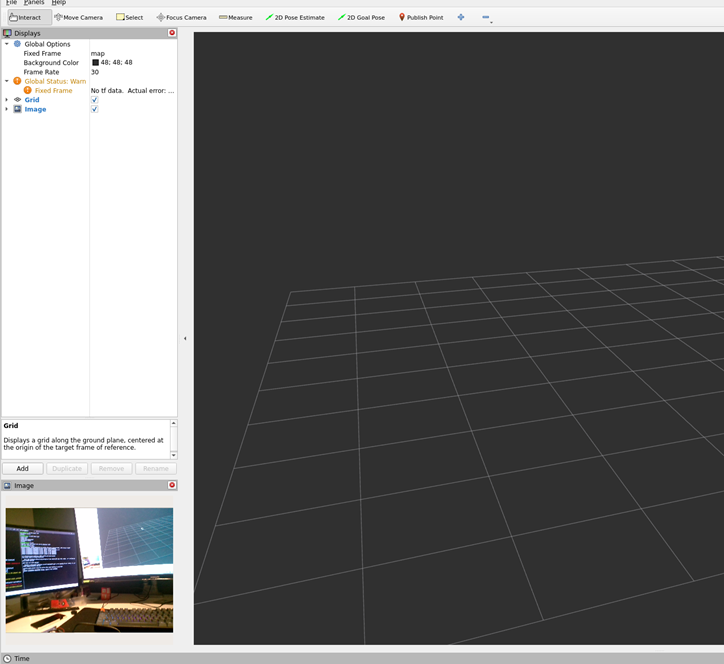

This week we finally got the lidar we ordered, I connected an LD06 lidar sensor to a Raspberry Pi running Ubuntu 22.04 and ROS2Humble. The goal was to get realtime laser scan data published to ROS topics and visualize it later in RViz2.

Because of an upcoming statistics exam and game document review I did not have as much time this week as I would have liked.

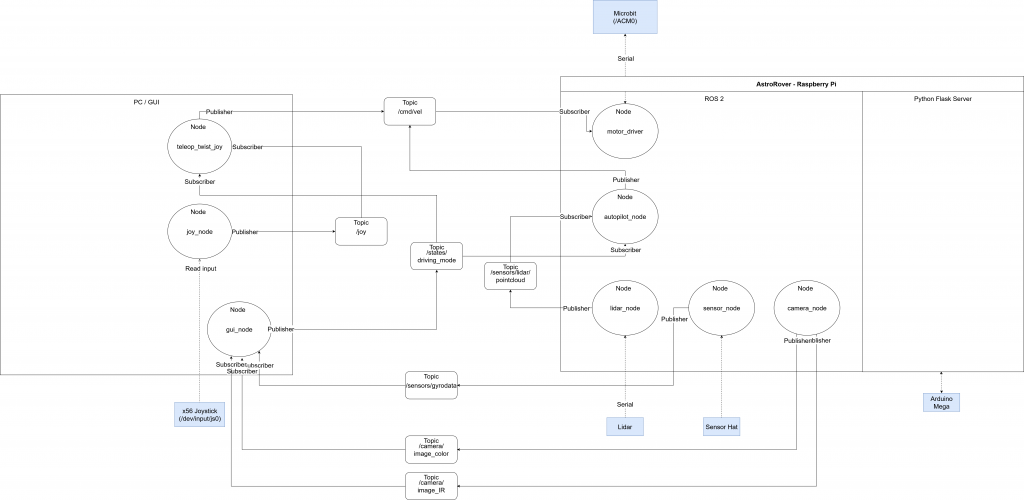

I also spent some time creating a ROS2 diagram where me and August visualized the entire system in drawio, this was to better understand the system layout and ensure that the team are on the same page. This is makes it much easier to understand all the different topics and nodes and how they communicate, as this can become complex, and its much easier to look at a diagram than to try to understand what is connected using ros2 commands like ros2 topic lists and such. The plan for this project is to not only use ROS2 but also other ways of sending commands to the system. While ROS2 is important for sensor fusion since the system is synchronized with the ROS2 clock, and data is timestamped. This is critical for the autonomous steering and navigation of the car.

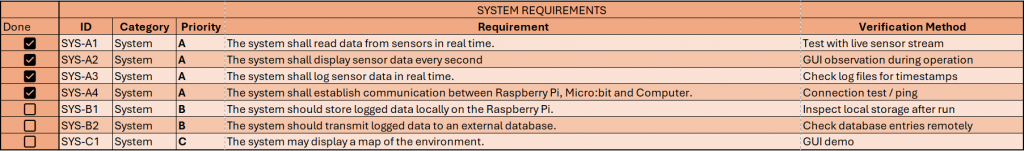

A lot of me and Augusts days are spent discussing and brainstorming the system to make it even better and making sure we don’t miss anything of importance. We also iterated and updated the requirements.

The lidar was connected via UART (GPIO14/15) instead of USB, which required configuring the correct serial interface (/dev/ttyS0). Initially the ROS2 node failed to start because it was trying to open /dev/ttyUSB0, and later due to permission and port access issues. I had to disable the default serial console(gettty), adjust device permissions, and ensure the Pi user was part of the dial out group. I also got a new computer this week and had some difficulties getting ubuntu to work on this computer, with ubuntu refusing to use the dGPU and instead using the iGPU on the CPU. This still hasn’t been fixed, but I’m able to use the computer but it is not optimal.

After resolving these issues, the Lidar node successfully connected and started publishing data to the /scan topic. I also created a custom launch file for easier startup with all parameters preconfigured.

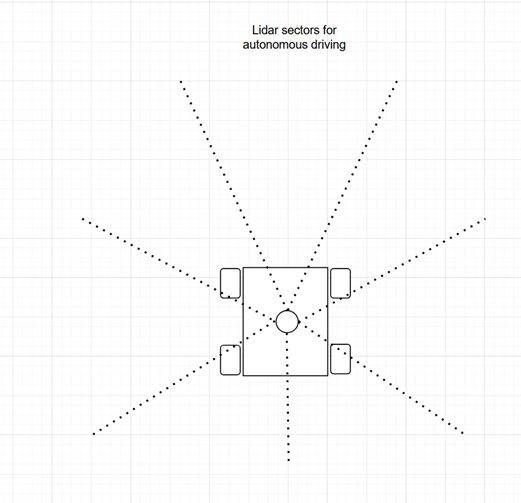

Visualization through RViz2 was tested using remote access over SSH, the plan is to later display this information in the GUI. Out of curiosity I also did some research on SLAM (Simultaneous localization and mapping). Using the PI Sense Hat IMU, we can use ros2 built in SLAM tools to enhance the localization of the rover. The plan here is to use the lidar together with the IMU to map its position relative to for example, the walls in a room or other reference points.

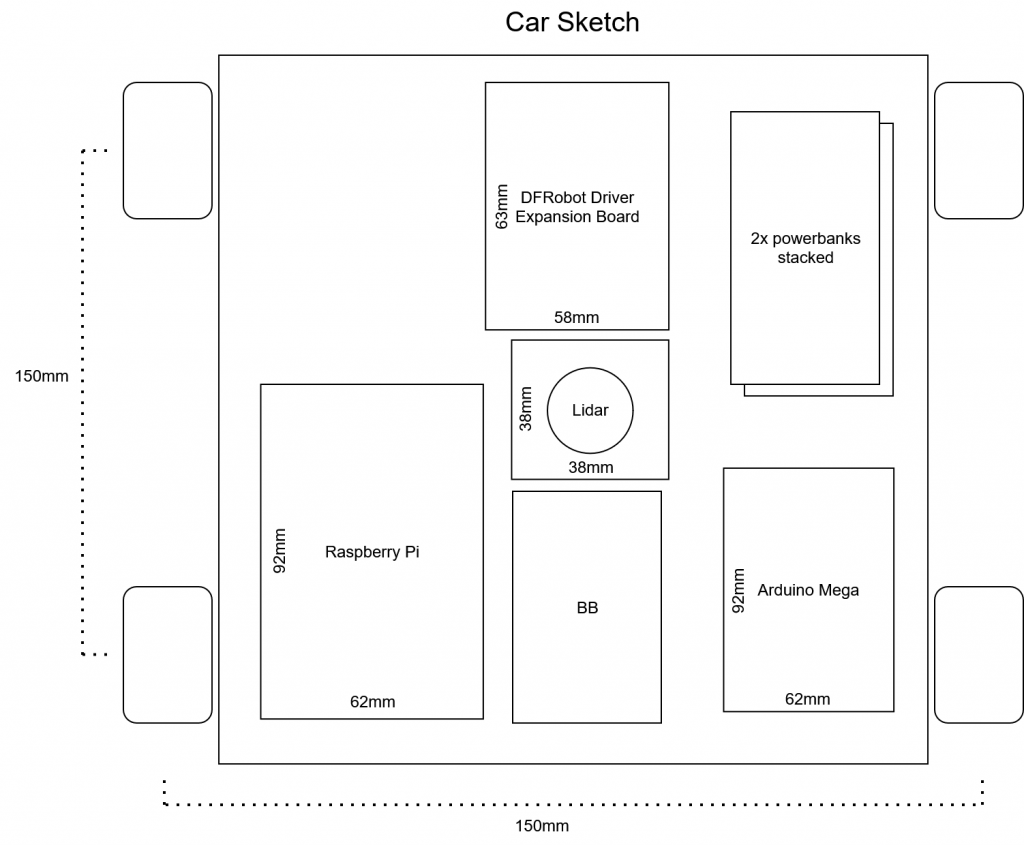

This is the first iteration of the car design, we will iterate more as I think we need even more space.

ROS2 system overview

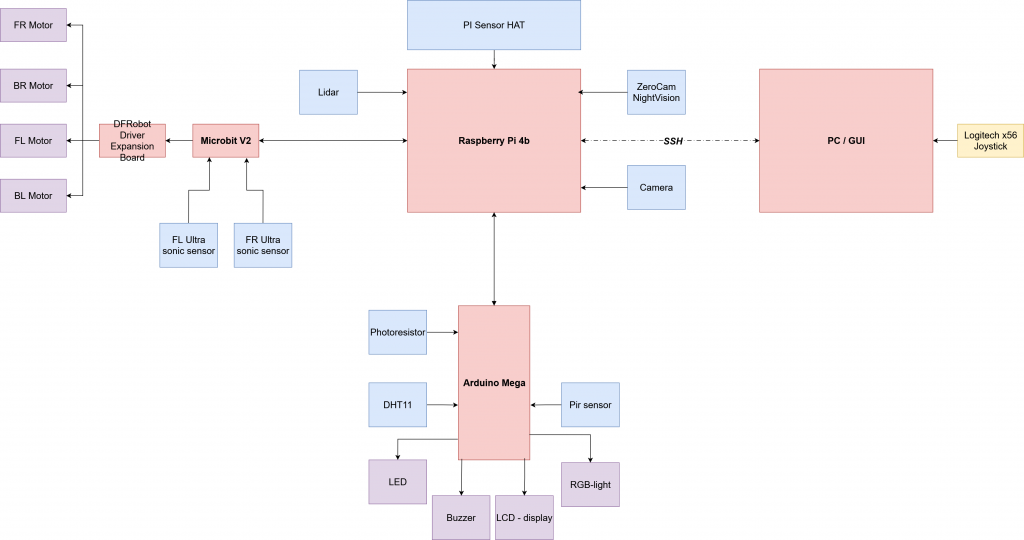

System main components overview

Sketch of the lidar sections, to be iterated on.

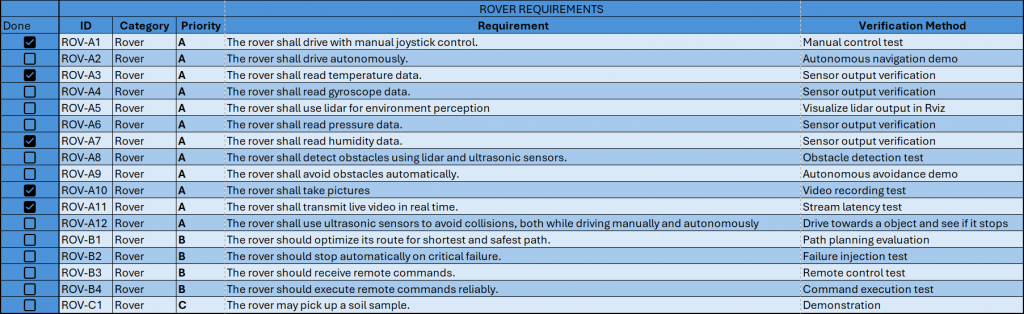

Requirements progress

Next week:

- Iterating through the requirements once more

- Updating sprint backlog

- More visualizations

- Integration

- Team meeting to see if we need to re-scope, because of quitting team member.

- Plan upcoming sprint

Sondre

This week:

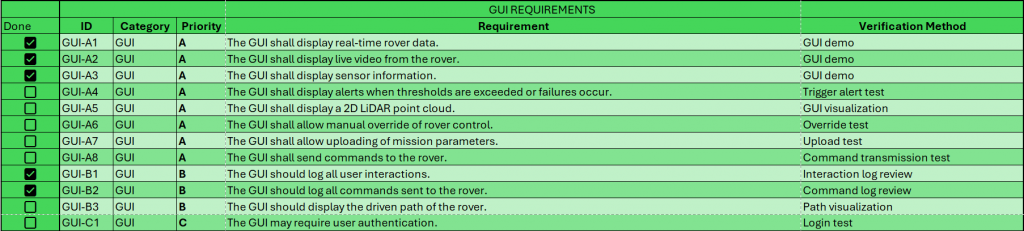

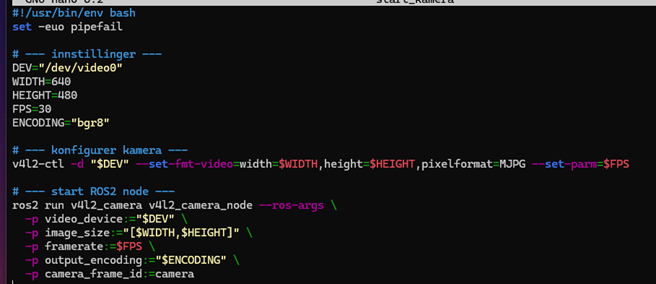

I created a script that sets the camera formatting and starts the camera node, allowing me to launch the video publisher with a single command and specify the desired configuration. This also made it easier to test different camera settings. The FPS and resolution can be adjusted as needed. After testing several combinations, I found that 30 FPS and a 640×480 resolution provided the most stable performance.

The camera node is started using the command: ./start_camera

When I used my home network for both the Raspberry Pi and my computer, RViz2 was unable to display the video feed. However, when I connected them through the Pi’s hotspot, it worked immediately with the same setup. I’ll keep this in mind for future configurations.

Now, I can view the camera feed from the Raspberry Pi directly in RViz2 on my computer. Finally I can move on and start on some fun stuff, like camera processing and so on.

Oliver

This week, I worked with the LiDAR. The one we received did not have a belt for the motor, so it had to be replaced. I tried several different materials, including thread, which worked for a short time, but after about 30–60 seconds the knot got caught in the motor wheel and caused it to stop. I ran some tests while searching for a better replacement. I managed to read some data, but there were large errors. I believe this is because the belt is not rotating properly.

Plan for next week:

- Group meeting

- Start looking at camera processing

- Learn some new stuff:)