Bram van den Nieuwendijk (mechanical engineer)

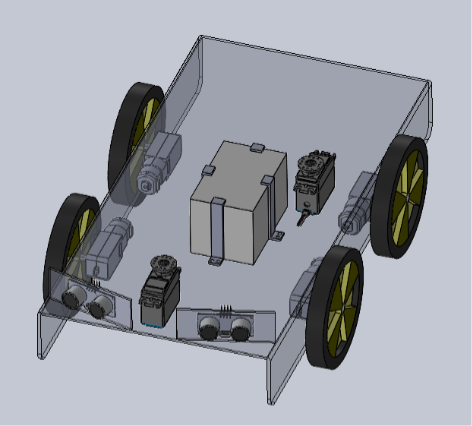

In week 5 I continued with the 3D model and figuring out what type of connections to use for each of the components. I also worked on creating concepts for how to realize the pumping mechanism which turned out to be more difficult than first expected. Jonas the other mechanical engineer has let us know that he will drop this class so I also started picking up his work which is to realize the x-axis rotation of the camera. Week 6 focusses on the 3D realization of the pumping mechanism and the x-axis rotation mechanism of the camera.

Åsmund Wigen Thygesen

This week we discussed how to do positional tracking indoors, since we couldn’t use the GPS system we had originally planned. We ended up deciding that for our small scale demonstration here at school, using motors with encoders to keep track of the position is accurate enough, however, for an actual product intended for outdoor use we would use GPS.

My work task for the rest of the week was to continue on the motion control system, but due to there being a lot going on last week and me generally not being great at time management, I have not gotten much done. I will make up for this as best I can next week.

Rick Embregts

This week a schematic of the entire electrical system was to be completed. Unfortunately, due to components that needed to be swapped out for other components the schematic isn’t finished yet. Apart from that we have already got the first few components to make sure we can use them and prepare for the design process of our first board. Next week the first schematic will be done and there may be some progress in the design of the motor controller and BMS.

Darkio Luft (Data Engineer)

I’ve started training with a free dataset from Kaggle of around 240 weed images. Since the original images were high definition, my first step was compressing them all to 512×512 px. (https://www.kaggle.com/datasets/jaidalmotra/weed-detection)

After that, I began the manual annotation process using Roboflow. I’m drawing polygons around the plants to ensure precise labeling, but this step takes quite a bit of time, so I haven’t finished yet.

Sulaf: Planning for Testing, Requirements & Risks

Overview

This week was all about preparing for the testing phase. Even though we still don’t have the physical components yet (we are waiting for the teacher to receive the IMU wheel encoders among other things), I still wanted to give our product some meat on its bones by showing the possible risks in the product. This will show that we have taken into consideration the weaknesses and strength of our product, as any company with any product would do. I have also included a requirement list I made while considering our product as a whole, to not only know the product goals that we have to reach, but also all the varying steps and functionalities we have to show at the end of the course.

And of course, as usual, I have made a few guiding questions, to answer the questions of what exactly do we want to test, and what risks might we face once we do?

Guideline Questions:

- What are the core requirements we need to test?

- What risks could effect our testing or final product?

- How can we prepare for testing without hardware so far?

Requirements Breakdown:

I revisited our original A/B/C requirement list and refined it based on what we’ve learned so far. Here’s a more detailed version of what we need to have achieved as a group once the robot is physically assembled:

A – Must-Have Requirements (Testable):

- The robot shall autonomously navigate a 3m x 3m terrain using wheel encoders and IMU compass heading.

- It shall detect obstacles within a 10-15 cm range using HC-SR04 ultrasonic sensors and pause movement.

- It shall reroute around obstacles.

- It shall identify weeds using a camera and a trained AI model with at least 90% classification accuracy.

- It shall activate a spray mechanism only when a weed is detected and within 10 cm of the nozzle.

- It shall avoid revisiting the same terrain area by logging visited coordinates or encoder-based distance.

B – Should-Have Requirements (Testable if time allows):

- It should pause for 2 seconds upon obstacle detection before rerouting.

- It should log weed detection events with timestamp and location (if we decide to implement a logging system).

- It should notify when the spray tank is empty via LED output (based ona sensor).

C – Could-Have Requirements (Optional):

- A dashboard interface (a simple web UI) showing weed count and spray usage.

- Autonomous charging station (which we are not prioritizing due to time constraint this semester).

Risk Analysis:

I decided to make a risk analysis for our product, and included the high to medium impact risks, and a possible way to mitigate risk.

| RISK | IMPACT | MITIGATION |

| Delay in Components | High | Simulate the code online as I have done, and continue to develop the code and models regardless |

| Sensor misalignment or inaccuracy due to noise | Medium | Calibrate the sensors and apply filtering algorithms if need be. Make many tests to make sure it is accurate |

| AI model misclassification | High | Expand the training dataset and training model |

| Power instability | Medium | We can use battery packs (a normal USB power bank) to test each subsystem independently before fully integrating it |

| Integration bugs | High | Test each module separately before integrating them together |

Simulation for testing

Even without hardware, I continued testing the code of the obstacle detection in digital simulations. I refined the ultrasonic sensor code and began sketching out how the rerouting logic will work once motor control is integrated.