18.11.2024 – 25.11.2024

“Alone we can do so little. Together we can do so much.”

-Helen Keller

Group Summary

Dearly beloved,

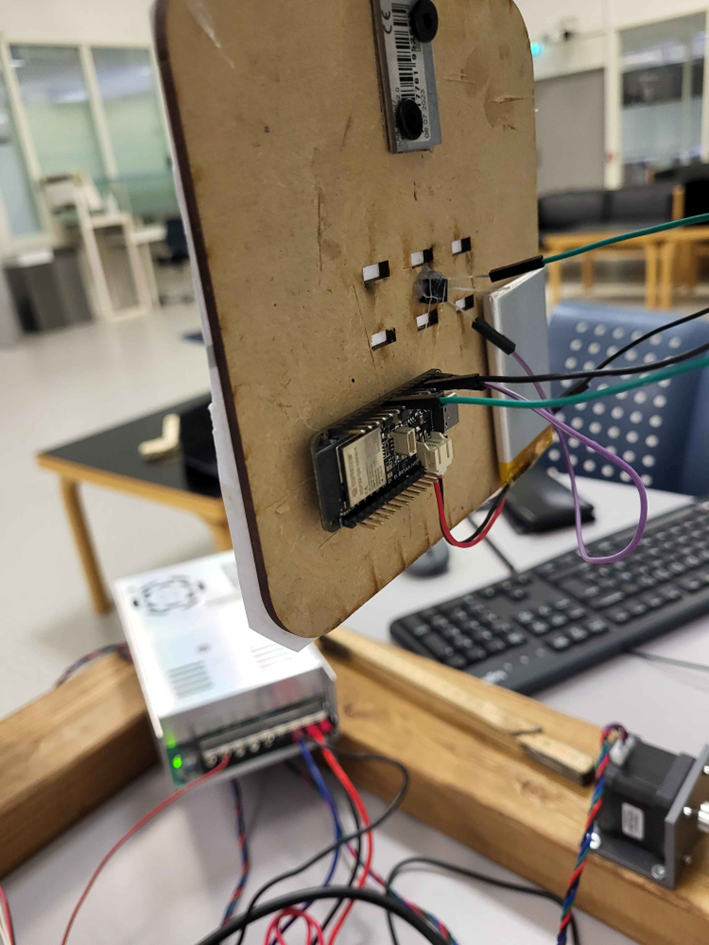

Friday afternoon and there is finally movement, at long last. Vetle finally got the components for the circuit board that controls the stepper motors. This means that once this is wired up, we only need the IR-circuit for the point counting system and to fix the software. The main difficulty in this last phase has been to combine all smaller pieces of code into one large program that can run all functions of the system. We also had to wire up the small IR circuit on the target itself, to recognize when the player scores a hit.

GitHub Repository:

Videos:

We unfortunately did not have time to implement all the code to a single program, so here are two videos to showcase the main functionalities of the project:

Individual Summaries

Eirik (Data):

Monday:

- Given that Robin had to stay home with a sick kid, and had the Raspberry Pi with him, I did not get to test the code with the servos.

- Still did some smaller testing and modifications with it.

Tuesday:

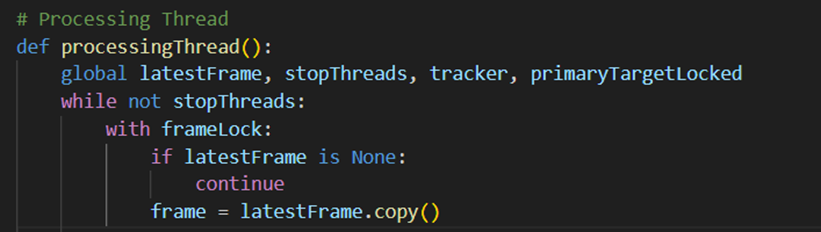

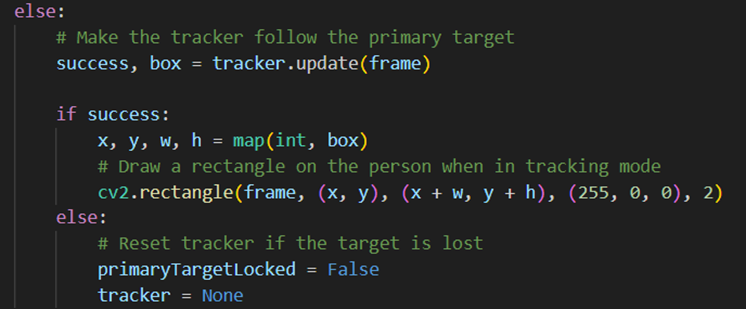

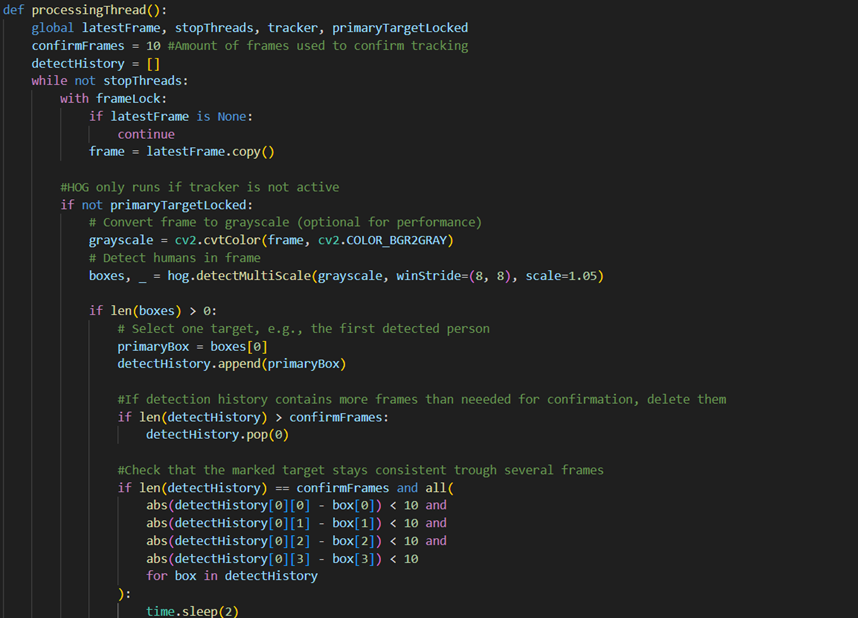

- Fixed the tracker variable problem in my code, the variable was not considered global within the “processingThread” where it is used.

- To resolve it I simply had to make it global so that it is available anywhere in the thread.

- The code now runs as intended, with the tracker locking on to the first person the HOG descriptor detects, so if anybody else walks into frame they will not be recognized.

- This implementation created some other issues that now needs to be addressed.

- Given that the tracker locks on pretty much immediately, if the HOG descriptor were to recognize a random object as a human for a split second (which it often does), this could lock the tracker onto a static object rather than the player.

- To fix this I need to implement some kind of delay and/or confirmation process that ensures it locks to the player.

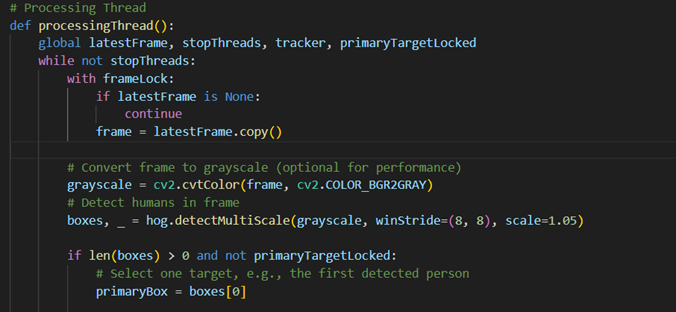

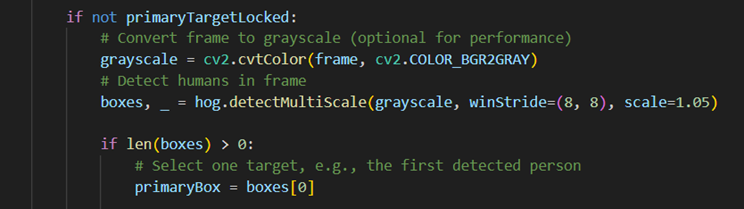

- The second problem is that the framerate drops significantly with the tracker implemented. A solution to this can be to turn off the HOG descriptor once the tracker is locked. Given that the HOG is not needed to identify anything once the tracker is active, it can save some resources for the Raspberry to shut it off.

- The third problem that we saw while testing were some serious levels of jittering with the servos.

- The jittering was so severe that we could barely notice the movement correlated with the computer vision code, but we did still see that the servos to some degree followed the person the camera focused on.

- Though the code itself is not the reason for the jittering, it is more of a connection issue, we can do some fixes with pins and servo libraries within the code to improve it.

Implementing the ability for the HOG descriptor to shut off only required a few lines of change.

Before:

After:

Friday:

- While waiting for Vetle to rig up the electronics, me and Hans quickly fixed an actual target for the player to aim at. Easily done with a flat piece of wood, some glue and a piece of paper

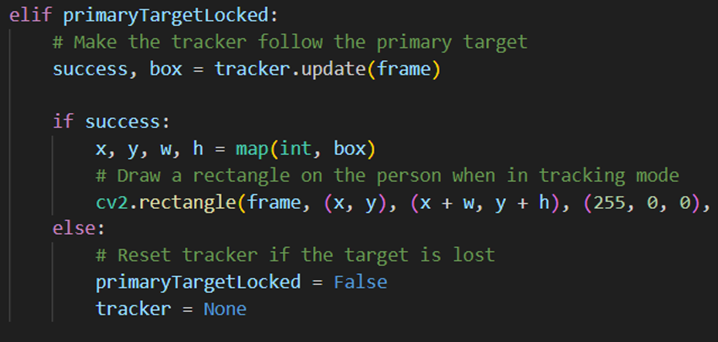

- After this I decided to start implementation of the tracker-confirmation function in my code. The function will use a couple of the previous frames to confirm that whatever it locks on has consistently stayed in frame before this.

It worked, though the green rectangle should appear as soon as he enters the frame, since green is for HOG descriptor while blue represents when the tracker takes over. Since it is a minor detail, I will let it be until everything else is properly up and running

Saturday:

- Me and Vetle began the day by trying to fix the servos within the computer vision code, since it did not manage to move them based on the camera input.

- By implementing the AdaFruit Servokit we managed to get rid of the jittering, meaning they now stand completely still unless they are given input to move.

- In the beginning we had some problems with the servo angles being held at a constant value, even though we moved around, and the camera tracker followed as it should.

- After some testing about we realized that the servo angles provided by the code are not whole numbers, but decimals, and servos cannot use anything else than integers.

- This could easily be resolved with the floor() function in Python, which rounds decimals to the closest whole number.

- We also had to reduce the minimum and maximum angles, otherwise the servos could “go out of range” and crash the program.

- Did some structuring with our GitHub Repository to make it more accessible and easier to navigate.

- Began (trying) to combine my computer vision code with the rest of the code to get everything running with one single program.

Monday:

- This day has been dedicated to doing everything in our efforts to get the project as close to completion as we possibly could do in these last few hours.

- This has mainly been related to the software parts of our project, by implementing smaller fixes in the code.

Robin (Data):

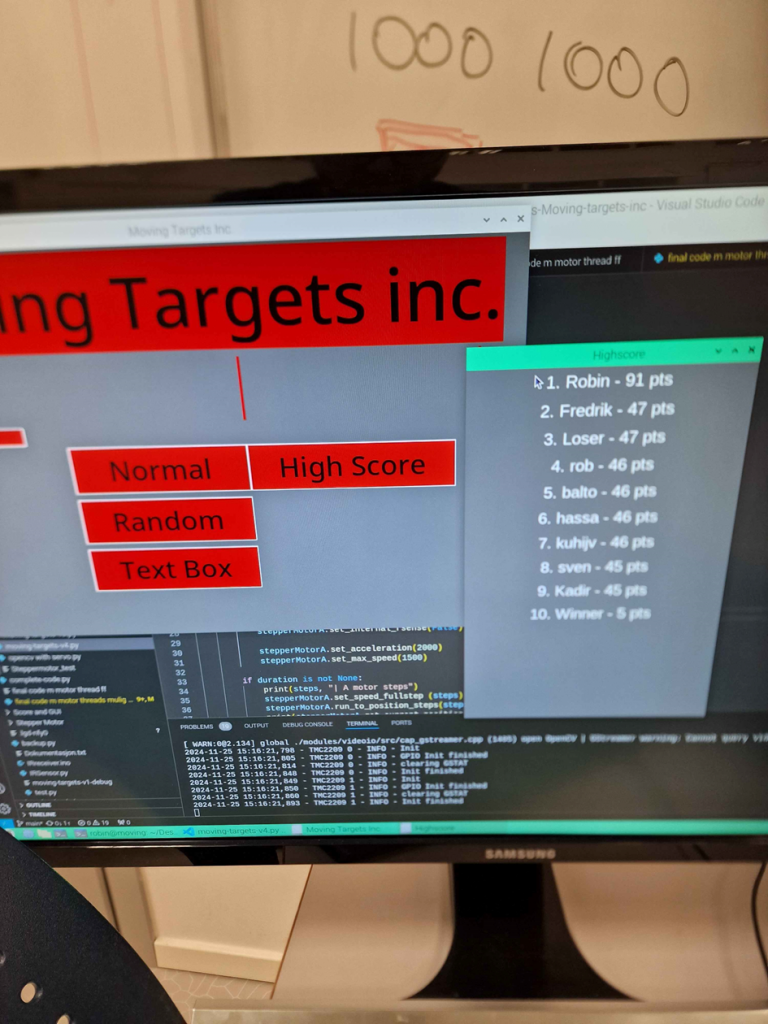

I started the week collaborating with Fredrik to finish the final software, we had to do quite a lot of bug fixing but eventually all the game modes should be functional. I also implemented a high score where the player can type in their name and gets highlighted if they are within top 10 scores.

Then we created a gameplay loop so that we didn’t have to close the application for every new attempt.

After that I tried to merge all the different code parts and spent a lot of time on that.

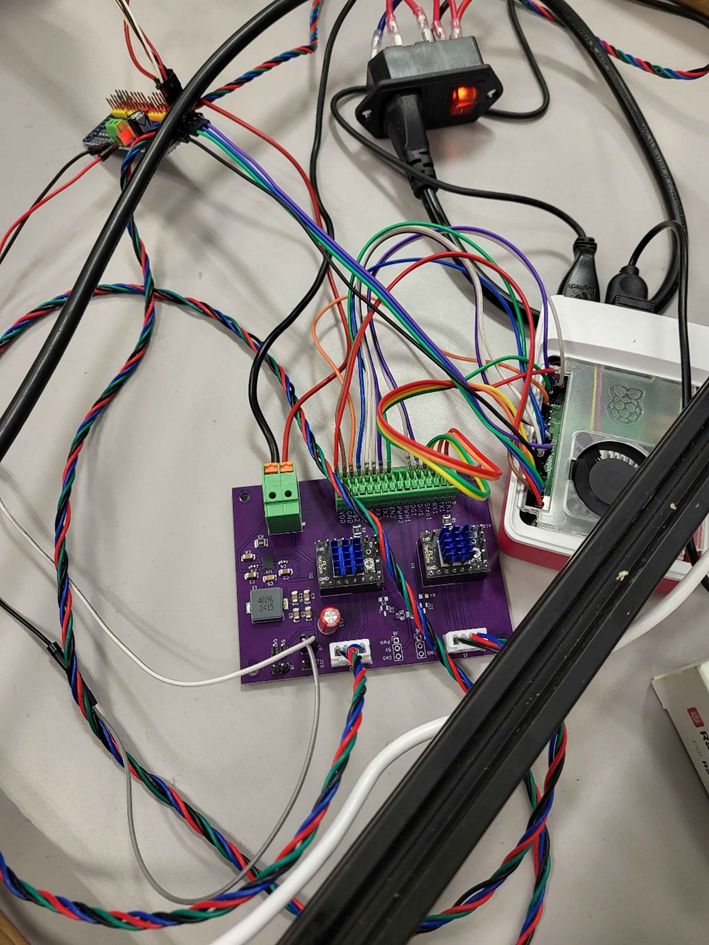

On Friday, we finally got our PCB and the work started where we could finally test our code! Oh happy glorious day! The initial code had to be revamped as we had to change the stepperMotor library and understand the new library. We got the system up and running where we could enter steppermotor positions to move the target around. We did however notice that only one steppermotor ran at a time and there is some sort of bug where it sometimes moves in the opposite direction of where it should go.

We tried for a long time to implement a stall guard to no avail, so we started to try to map the boundaries manually, which proved to be quite hard as the steppermotors are currently a bit unreliable.

We tried to bug fix the servo motors so it would match the movement of the player, however, the mechanical ratios made it so our 180degree servo only could rotate 50 degrees, therefore the aim would not be correctly set.

Then we were able to fix the steppermotors so they would move to the correct positions so we could map a game playthrough.

We also tried to implement IR-reciever, which Vetle had gotten to work on a Arduino, however on the raspberry pi, we could not fully realize it and make it work, as it used some sort of loop_forever() function which stopped all other programs even though we tried to thread it in the hopes of it working, to no avail.

Fredrik (Data):

On Monday I coded with Robin on Monday spending time trying to fix bugs and implementing some new features like Robin has mentioned above.

Then on the Monday after which was the final sprint, we all, Eirik, Robin and I, coded trying to make the turret correctly tracking the player. We also tried to get the motor threading to work. We also tried to implement the IR sensor that Vetle made.

Vetle (Electrical):

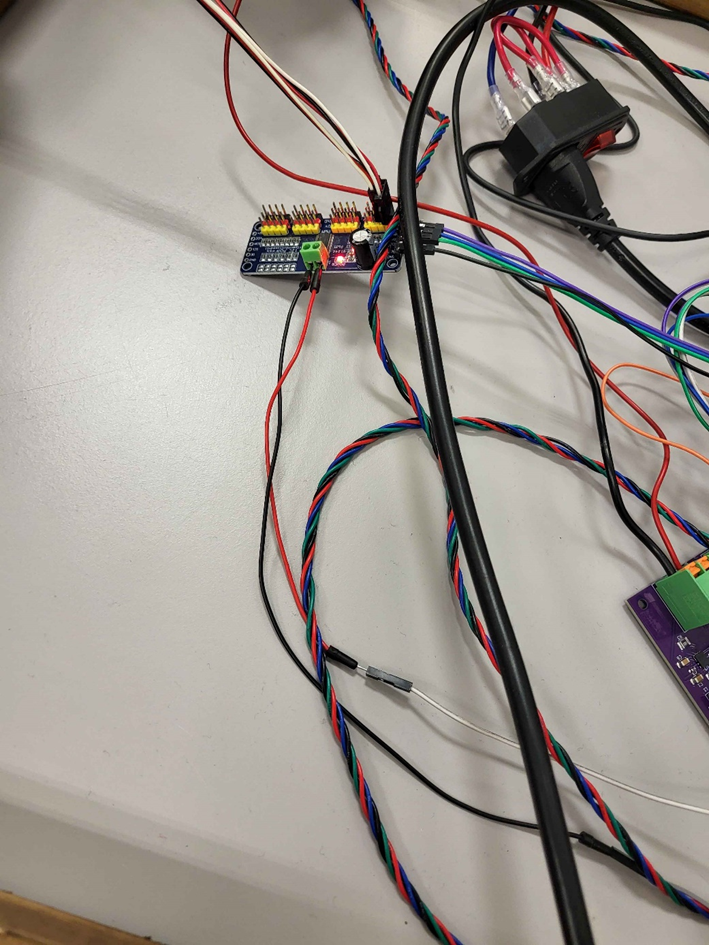

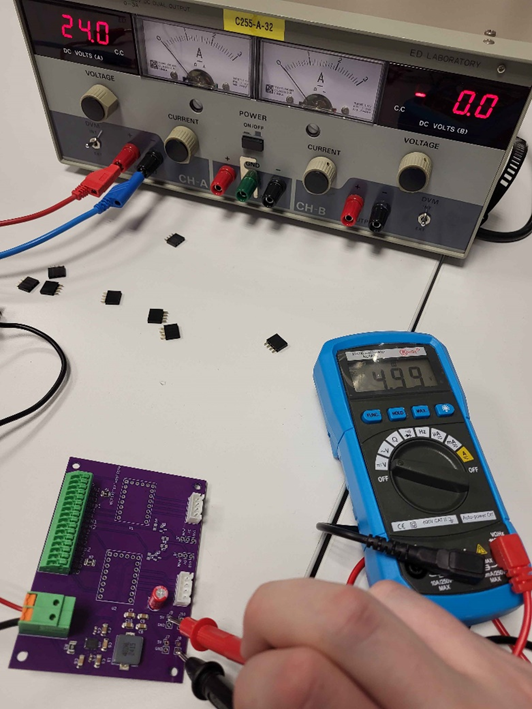

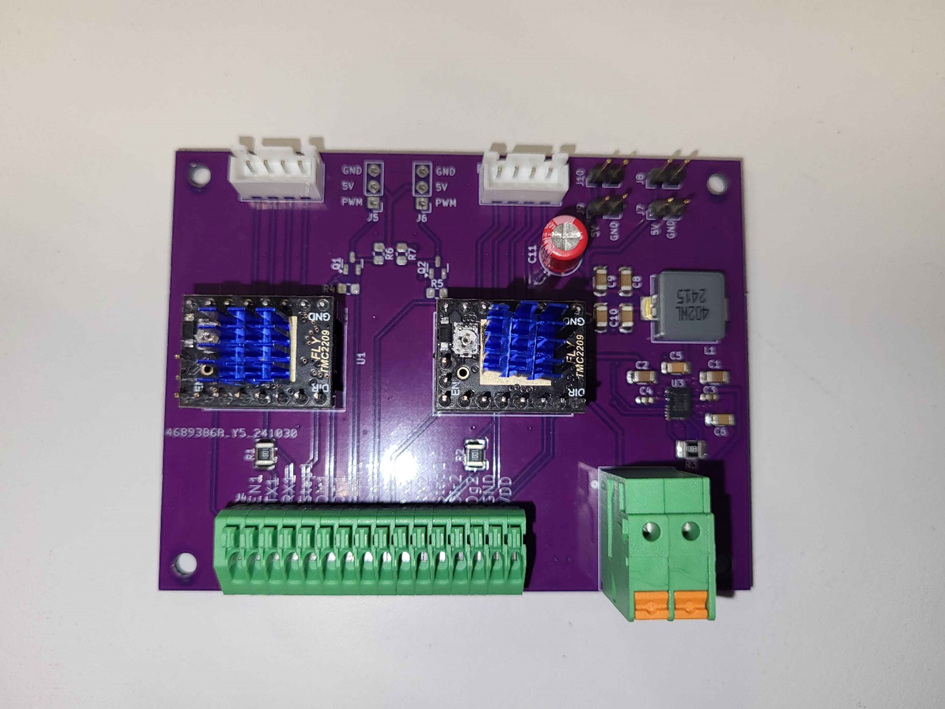

I got the components for the PCB this week and was able to solder the components on and test the PCB. I tested the voltage regulator with only the SMD components, and it gave an output of 5V as expected, but with a load applied the voltage dropped. This can be caused by the 220uF electrolytic capacitor not being in place, which could cause a higher voltage ripple.

Since we went away from using the RPi 5 to control the servos directly the 3.3V to 5V logic level converters are unpopulated together with the connectors. This was because generating the PWM signal with the RPi 5 caused a lot of jitter, moving to a PCA9685 we are borrowing from group 3

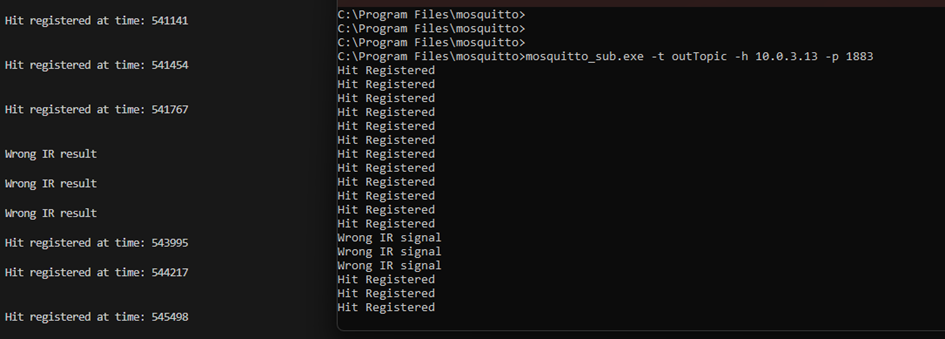

I have programmed ESP32-S3 for the target using the PubSubClient and IRremoteESP8266 library, so it can decode the IR signal it gets from the TSOP4840 IR Receiver and then publish it on a MQTT server. The IR signal I am sending is for a Sony TV since it was convenient since I have a Sony remote, and it sends 40kHz IR signals. The remote I have been using sends out a too strong IR signal, so reflections became an issue. I have also made an IR transmitter using the TSAL6200 and an Arduino Mega 2560, by limiting the current of the TSAL6200 to around 17mA the reflections were less of a problem. I tried it with a Raspberry Pi Pico as well, but I encountered some issues I couldn’t troubleshoot before the deadline. I tried using a 2N2222 and a 2N3904 transistor to switch the IR diode with a signal pin from the RPi Pico. It switched on, but the IR signal was too weak to detect. If I had another ESP32-S3 I could have used the same library to implement the IR transmitter, and I could have theoretically been able to drive it directly from the pins.

Below you can see the serial monitor for the ESP32-S3 and what a MQTT subscriber sees when the ESP32-S3 publishes a message to the MQTT broker server.

Kadir (Mechanical):

Hans (Mechanical):

This week I have been:

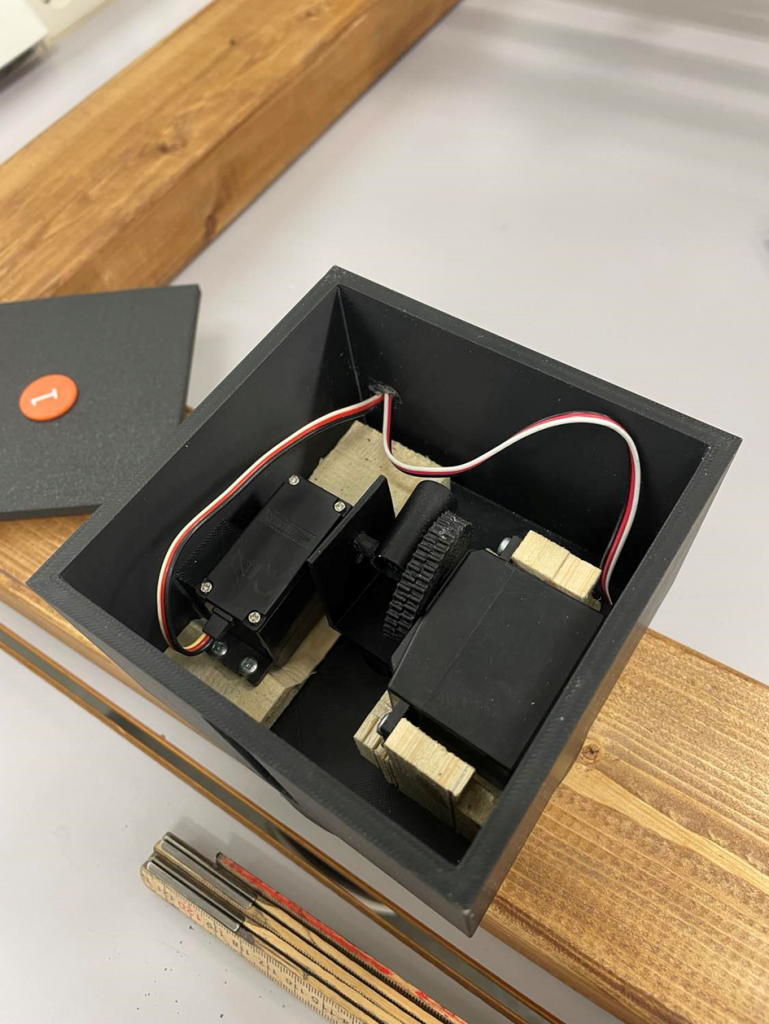

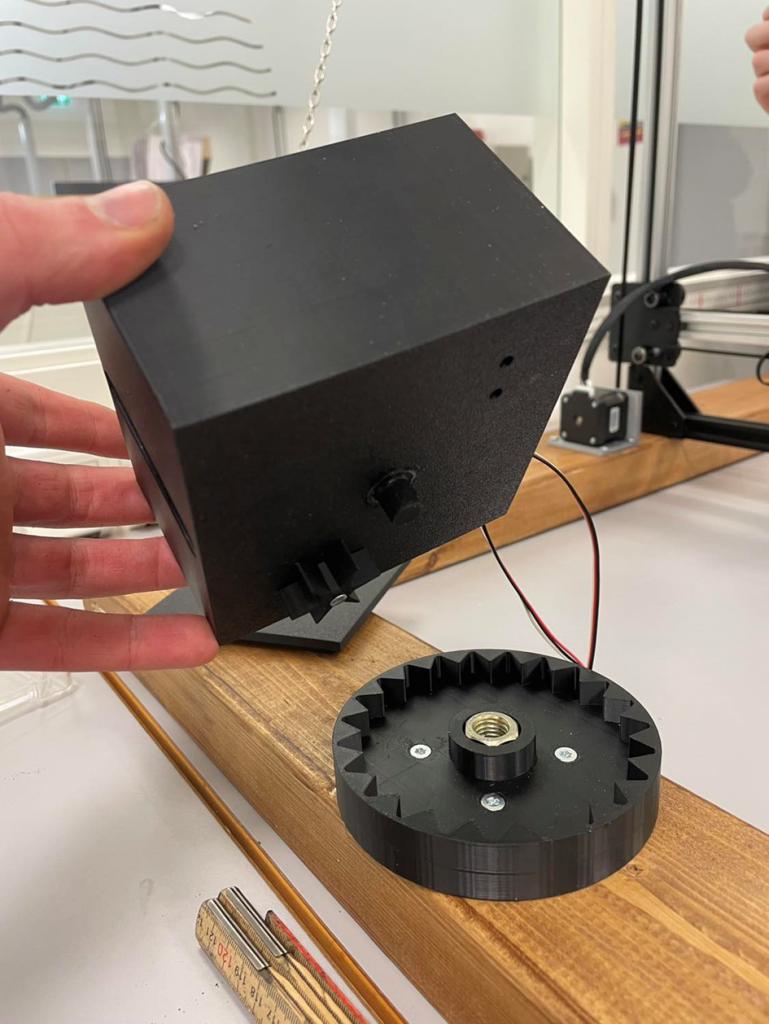

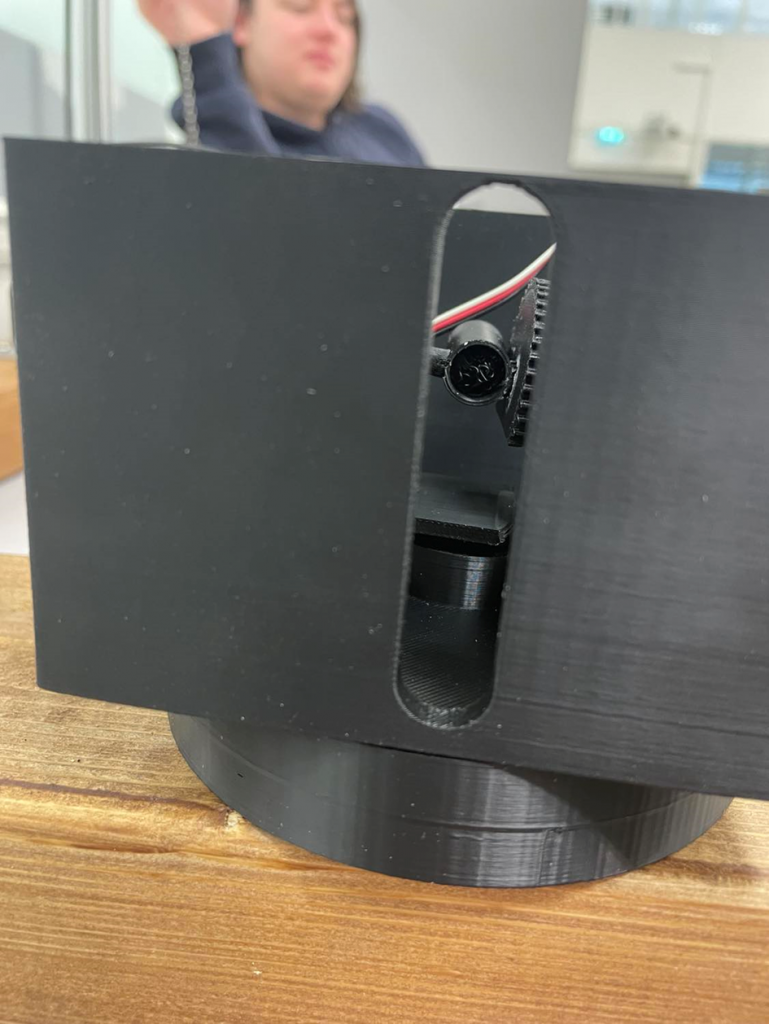

– Making new servo holders due to new servoes provided did not match our original design.

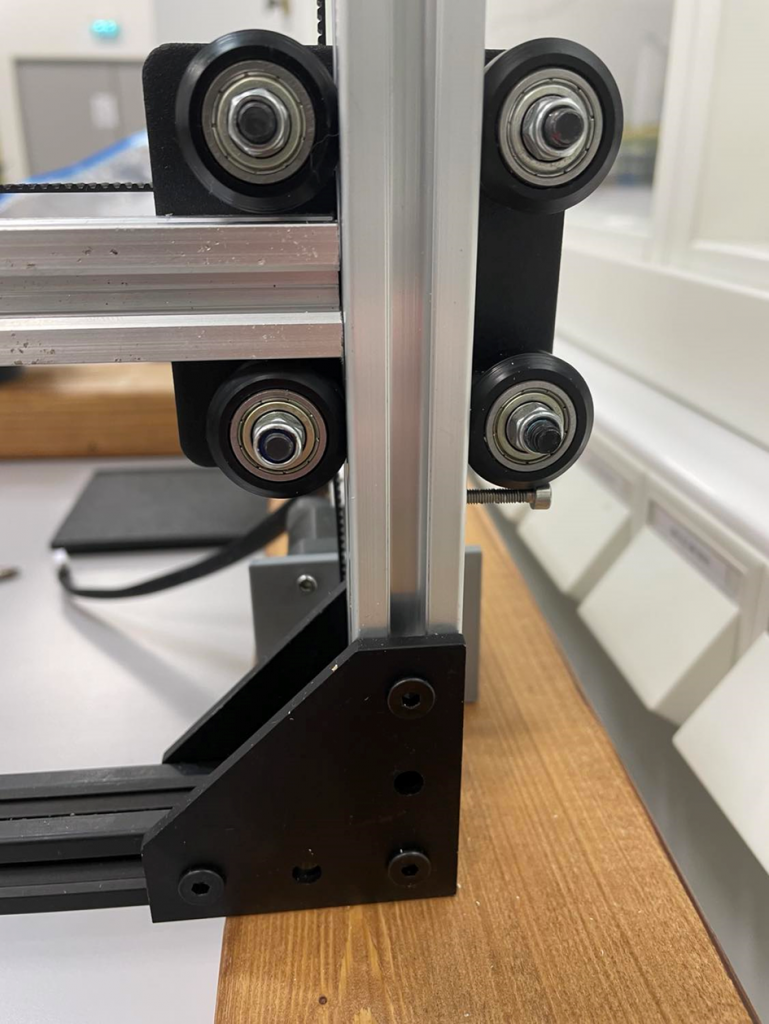

-Installed the Shootback system on the base, with servos.

-Mounted the target to our system, and in this prosess also mounted som downward endstops en dolly to prevent cashing the target into the ground.

-Made a gun to bee used for shooting at the target ready for computer and elekto.